The Rack2Cloud Method: A Strategic Guide to Kubernetes Day 2 Operations

Why Your Cluster Keeps Crashing: The 4 Laws of Kubernetes Reliability

This is the Strategic Pillar of the Rack2Cloud Diagnostic Series. It synthesizes the lessons from our technical deep dives into a unified operational framework.

Day 0 is easy. You run the installer, the API server comes up, and you feel like a genius. Day 1 is magic. You deploy Hello World, and it scales. Day 2 is a hangover.

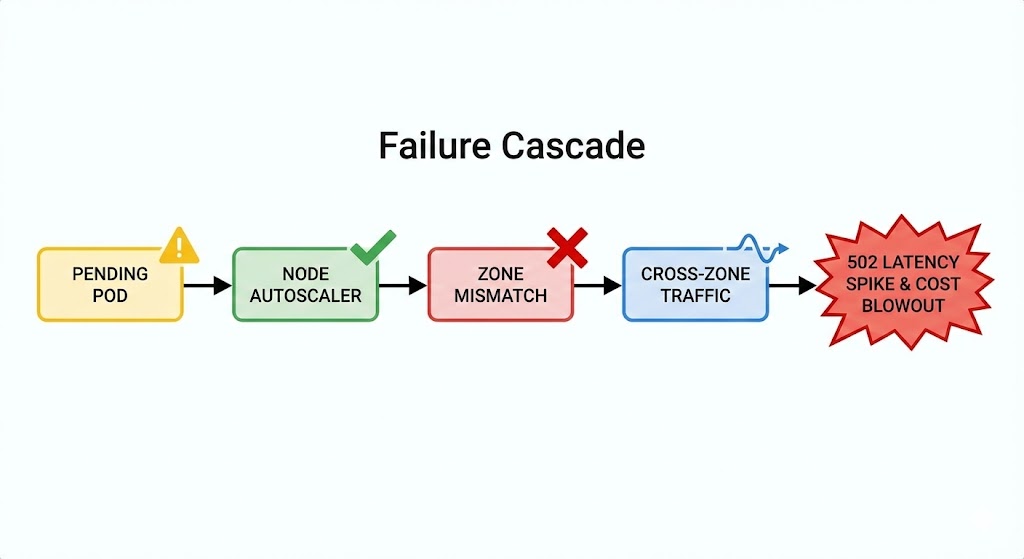

On Day 2, the pager rings. A Pending Pod triggers a node scale-up, which triggers a cross-zone storage conflict, which saturates a NAT Gateway, causing 502 errors on the frontend.

Most teams treat these incidents as “random bugs.” They are not. Kubernetes failures are never random.

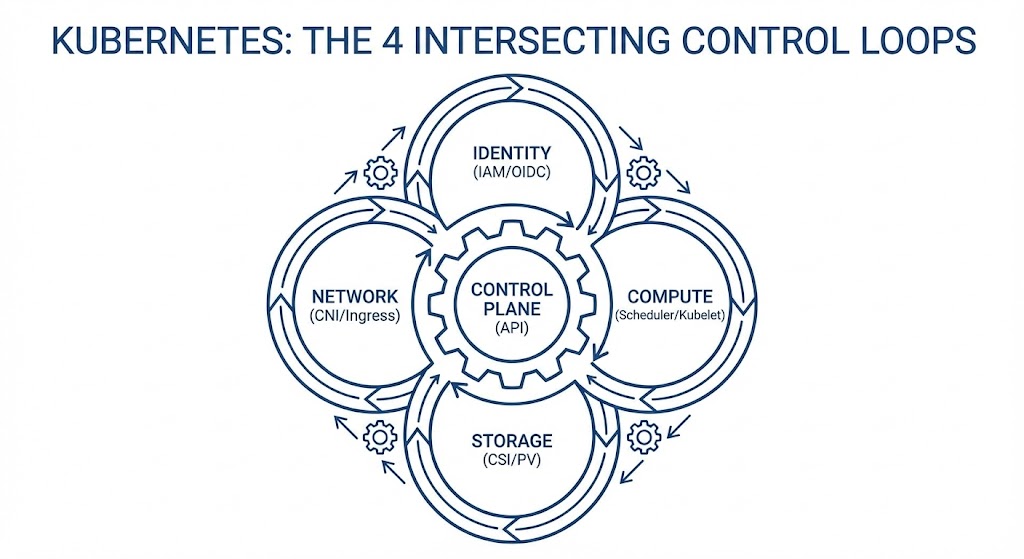

Every production incident comes from violating the physics of Four Intersecting Control Loops:

- Identity (The API & IAM)

- Compute (The Scheduler)

- Network (The CNI & Overlay)

- Storage (The CSI & Physics)

If you treat Kubernetes like a collection of VMs, you will fail. If you treat it like an eventual-consistency engine, you will thrive.

This is the Rack2Cloud Method for surviving Day 2.

The System Model: 4 Intersecting Loops

We need to fix your mental model. Kubernetes is not a hierarchy; it is a mechanism. Incidents happen at the seams where these loops grind against each other.

- Identity Loop: Authenticates the request (

ServiceAccount→ AWS IAM). - Compute Loop: Places the workload (Scheduler → Kubelet).

- Storage Loop: Provisions the physics (CSI → EBS/PD).

- Network Loop: Routes the packet (CNI → IP Tables → Ingress).

When you see a “Networking Error” (like a 502), it is often a Compute decision (scheduling on a full node) colliding with a Storage constraint (zonal lock-in).

The Domino Effect: A Real-World Escalation

Here is why you need to understand the whole system.

- 09:00 AM: A Pod goes

Pending. (Compute Issue). - 09:01 AM: Cluster Autoscaler provisions a new Node in

us-east-1b. - 09:02 AM: The Pod lands on the new Node.

- 09:03 AM: The Pod tries to mount its PVC. Fails. The disk is in

us-east-1a. (Storage Issue). - 09:05 AM: The app tries to connect to the database. Because of the zonal split, traffic crosses the AZ boundary.

- 09:10 AM: Latency spikes. The NAT Gateway gets saturated. (Network Issue).

- Result: A “Storage” constraint manifested as a “Network” outage.

Pillar 1: Identity is Not a Credential

The Law of Access

In Day 1, you hardcode AWS Keys into Kubernetes Secrets. By Day 365, this is a security breach waiting to happen.

- The Mistake: Long-lived static credentials.

- The Symptom: ImagePullBackOff, and broken permission handshakes.

- The Strategy: Identity must be ephemeral.

The Production Primitives

- IAM Roles for Service Accounts (IRSA): Never put an AWS Access Key in a Pod. Map an IAM Role directly to a Kubernetes

ServiceAccountvia OIDC. - ClusterRoleBinding: Audit these weekly. If you have too many

cluster-admins, you have no security.

Pillar 2: Compute is Volatile

The Law of Capacity

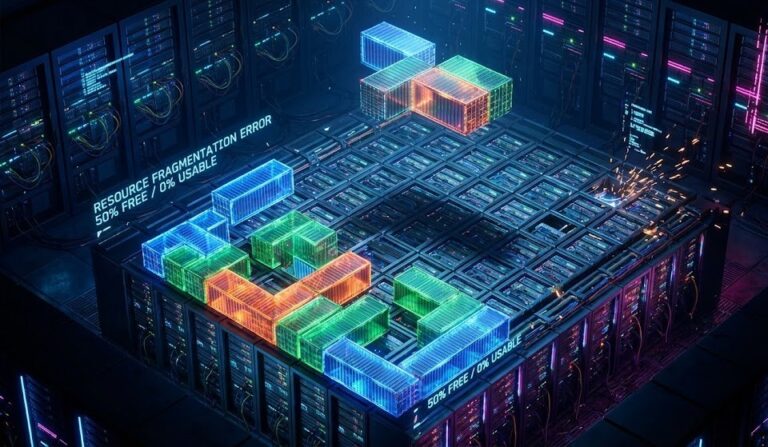

You think of Nodes as servers. Kubernetes thinks of Nodes as a pool of CPU/RAM liquidity. If you don’t define your “spend,” the Scheduler will freeze your assets.

- The Mistake: Deploying pods without Requests/Limits.

- The Symptom: Pending Pods & Scheduler Lock-up.

- The Strategy: Treat scheduling as a financial budget.

The Production Primitives

- Requests & Limits: Mandatory. If they are missing, the scheduler is guessing.

- PriorityClass: Define

criticalvsbatch. When the cluster is full, who dies first? - PodDisruptionBudget (PDB): You must tell Kubernetes, “You can kill 1 replica, but never 2.”

Pillar 3: The Network is an Illusion

The Law of Connectivity

Kubernetes networking is a stack of lies. It is an Overlay Network wrapping a Cloud Network wrapping a Physical Network.

- The Mistake: Trusting the overlay network blindly.

- The Symptom: 502 Bad Gateway & MTU Blackholes.

- The Strategy: Design for Eventual Consistency.

The Production Primitives

- Readiness Probes: If these are wrong, the Load Balancer sends traffic to dead pods.

- NetworkPolicy: Default deny. Don’t let the frontend talk to the billing database directly.

- Ingress Annotations: Tune your timeouts (

proxy-read-timeout) and buffers. Defaults are for demos, not production.

Pillar 4: Storage Has Gravity

The Law of Physics

Compute can teleport. Data has mass. A 1TB disk cannot move across an Availability Zone in milliseconds.

- The Mistake: Treating a Database Pod like a Web Server Pod.

- The Symptom: Volume Node Affinity Conflict.

- The Strategy: Respect Data Gravity.

The Production Primitives

- volumeBindingMode: WaitForFirstConsumer: The single most important setting for EBS/PD storage.

- topologySpreadConstraints: Force the scheduler to spread pods across zones before they bind storage.

- StatefulSet: Never use a Deployment for a database.

The 5th Element: Observability

You cannot fix what you cannot see. Without observability, Kubernetes replaces simple outages with complex mysteries.

The Two Models (RED vs USE)

- The Services (RED): Rate, Errors, Duration. (Is the App happy?)

- The Infrastructure (USE): Utilization, Saturation, Errors. (Is the Node happy?)

The Golden Rule of Logs: “Log parsing” is dead. You need Structured Logging. Every log line must include: trace_id, span_id, pod_name, node_name, namespace.

The Maturity Ladder: How to Level Up

Where is your team today? And how do you get to the next level?

| Stage | Behavior | Architecture Pattern | The Learning Path |

| Reactive | SSH into nodes to debug. | Manual YAML editing. | Start Here |

| Operational | Dashboards & Alerts. | Helm Charts & CI/CD. | Modern Infra & IaC Path |

| Architectural | Guardrails (OPA/Kyverno). | Policy-as-Code. | Cloud Architecture Path |

| Platform | “Golden Paths” for devs. | Internal Developer Platform (IDP). | Mastery |

Moving from Operational to Architectural:

If you are stuck fixing broken pipelines and debugging Terraform state, you need the Modern Infra & IaC Path.

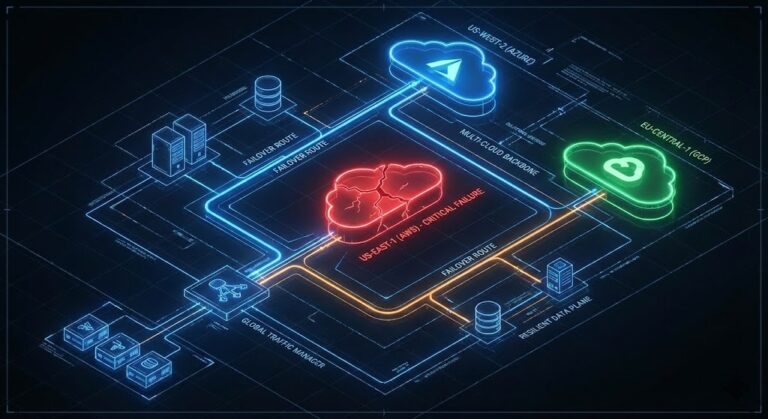

If you are ready to design resilient, multi-region control planes, you need the Cloud Architecture Path.

The Rack2Cloud Anti-Pattern Table

Share this with your team. If you see the Symptom, stop blaming the wrong cause.

| Symptom | What Teams Blame | The Real Cause |

ImagePullBackOff | The Registry / Docker | Identity (IAM/IRSA) |

Pending Pods | “Not enough nodes” | Fragmentation & Missing Requests |

| 502 / 504 Errors | The Application Code | Network Translation (MTU/Headers) |

| Stuck StatefulSet | “Kubernetes Bug” | Storage Gravity (Topology) |

Conclusion: From Operator to Architect

Kubernetes is not a platform you install.

It is a system you operate.

The difference between a frantic team and a calm team isn’t the tools they use. It’s the laws they respect.

The Cluster Readiness Checklist:

Ready to survive Day 2? If you want to go deeper than blog posts and build these systems in a hands-on lab environment, join us in the Cloud Architecture Learning Path.

Frequently Asked Questions (Day 2 Ops)

A: Day 1 is about installation and deployment (getting the cluster running and shipping the first app). Day 2 is about lifecycle management (backups, upgrades, security patching, observability, and scaling). Day 2 is where 90% of the engineering time is spent.

A: This is usually a Compute Loop failure. The Kubelet may be crashing due to resource starvation (missing Requests/Limits), or the CNI plugin (Network Loop) may have failed to allocate IP addresses. Check the Kubelet logs on the node itself.

A: This is a Storage Gravity issue. To fix it, you must use volumeBindingMode: WaitForFirstConsumer in your StorageClass. This forces the storage driver to wait until the Scheduler has picked a node before creating the disk, ensuring the disk and node are in the same Availability Zone.

A: In stateful workloads, Kubernetes effectively has two schedulers: the Compute Scheduler (which places pods based on CPU/RAM) and the Storage Scheduler (which places disks based on capacity). If they don’t coordinate, you end up with a Pod in Zone A and a Disk in Zone B.

The Rack2Cloud Diagnostic Series (Full Library)

- Part 1: ImagePullBackOff is a Lie (Identity)

- Part 2: The Scheduler is Stuck (Compute)

- Part 3: It’s Not DNS, It’s MTU (Network)

- Part 4: Storage Has Gravity (Storage)

- Strategy: The Rack2Cloud Method (This Article)

Additional Resources

- The Google SRE Book (Reliability): The definitive guide on Service Level Objectives (SLOs) and eliminating toil.

- CNCF Cloud Native Definition: The official philosophy behind immutable infrastructure and declarative APIs.

- Kubernetes Production Best Practices: A community-curated checklist for hardening clusters.

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.