The “Lift and Shift” Cost Trap: A Sysadmin’s Guide to FinOps and Avoiding Cloud Sticker Shock

Introduction: The “Lift and Shift” Trap

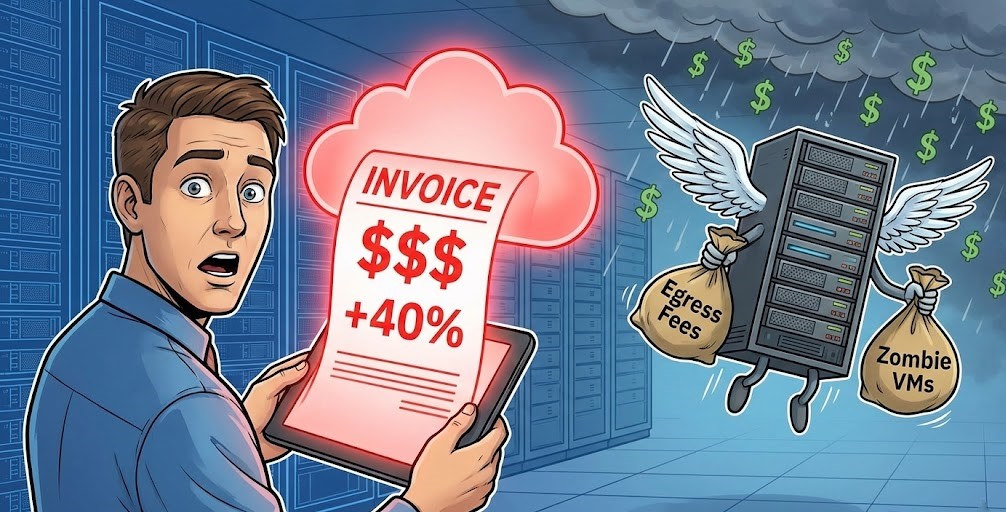

You’ve successfully migrated your first workload. The terraform applied cleanly, the latency looks good, and the boss is happy. Then, 30 days later, the first bill arrives. It’s 40% higher than your estimate.

Welcome to the “Lift and Shift” trap.

For traditional sysadmins, “capacity” was a sunk cost. If you bought a Dell PowerEdge server with 1TB of RAM, it cost the same whether you used 1% or 99% of it. In the cloud, that logic is a financial death sentence.

This guide introduces FinOps—not as a buzzword for the finance team, but as a critical engineering skill. We will cover the silent killers of cloud budgets and how to architect for cost before you deploy.

What is FinOps? (It’s Not Just “Saving Money”)

FinOps (Financial Operations) is the practice of bringing financial accountability to the variable spend model of the cloud.

- Old Way: Finance approves a budget $\rightarrow$ IT buys hardware $\rightarrow$ Engineers deploy.

- Cloud Way: Engineers deploy $\rightarrow$ Finance gets a bill $\rightarrow$ Panic ensues.

FinOps bridges that gap. It divides the lifecycle into three phases: Inform (Visibility), Optimize (Efficiency), and Operate (Continuous Improvement).

The “Silent Killers” of Your First Cloud Bill

Most “sticker shock” comes from three specific engineering oversights:

1. Data Egress Fees (The Hidden Tax)

Ingress (putting data in) is usually free. Egress (taking data out) is where hyperscalers make their margins.

- The Mistake: Replicating backups from Cloud A to Cloud B without calculating the per-GB transfer fee.

- The Fix: Keep data processing in the same region as the storage. If you must move data, use a transfer appliance or dedicated connect circuits (like Direct Connect/ExpressRoute) for lower per-GB rates.

2. Zombie Resources (Unattached EBS & IPs)

When you terminate an EC2 instance or VM, the storage volume (EBS/Managed Disk) and Static IP often persist unless you explicitly flagged them to delete on termination.

- The Cost: A 500GB SSD volume sitting unattached costs the same as one attached to a production database.

- The Fix: Implement “Tagging” policies immediately. If a resource lacks an

OwnerorProjecttag, a script should flag it for deletion.

3. Over-Provisioning (The “Just in Case” Tax)

On-prem, we provision for peak load plus 20% buffer. In the cloud, this is wasteful.

- The Fix: Right-sizing. Use CloudWatch or Azure Monitor to check actual RAM/CPU utilization. If your instance averages 10% CPU, cut the instance size in half. This principle is similar to how you’d approach on-prem sizing—understanding your workload’s actual needs is key. For a deeper look at sizing methodologies, check out our Hyper-V vs. Nutanix AHV Sizing Framework.

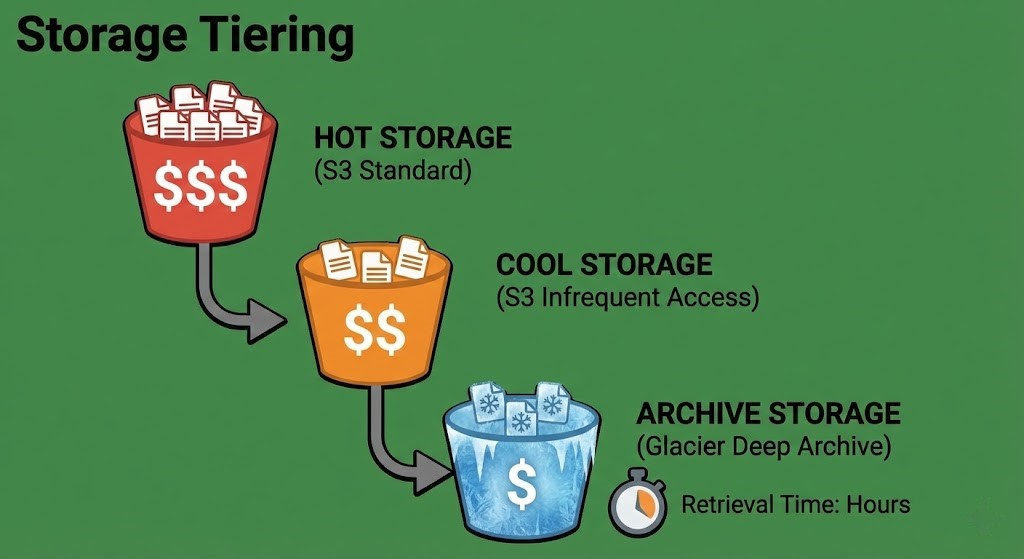

The Storage Tiering Opportunity

Perhaps the easiest “quick win” for engineers is storage optimization. Cloud storage isn’t just one bucket; it’s a ladder of tiers—from “Hot” (milliseconds access) to “Deep Archive” (12+ hour retrieval).

Moving 100TB of log data from S3 Standard to S3 Glacier Deep Archive can drop your monthly storage bill by over 90% without deleting a single byte.

Conclusion: Cost is an Architecture Decision

In 2025, a cloud architect who can’t discuss costs is like a structural engineer who doesn’t understand material strengths. By adopting basic FinOps principles—tagging resources, right-sizing instances, and watching egress flows—you prevent the bill from becoming a surprise.

Your goal isn’t to spend zero; it’s to ensure every dollar spent returns value to the business. For those looking to transition their career and master these skills, our Cloud Engineer Roadmap 2025 provides a clear path forward.

Additional Resources:

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.