Beyond the Hyper-scaler: Why AI Inference is Moving to the Edge (and How to Architect It)

The ink is barely dry on the NVIDIA-Groq deal, and it confirms what many of us have suspected for the last eighteen months: The centralized cloud is struggling with inference.

Don’t get me wrong—I rely on the cloud for training. There is no substitute for spinning up a massive GPU cluster to process terabytes of data. However, asking a hyperscaler to handle real-time inference for thousands of devices is inefficient. It is like hiring a convoy of semi-trucks to deliver individual pizzas. It is expensive, it is overkill, and the delivery time will frustrate your customers.

If you are an Enterprise Architect planning for 2026, you need to stop treating the Edge as a collection of passive sensors. Instead, treat it as your primary compute layer. Here is how to make that decision and, more importantly, how to build it responsibly.

The Decision Framework: When to Leave the Cloud

We shouldn’t make architectural shifts based on hype. We must make them based on physics and economics.

If you are debating between hosting your model on AWS SageMaker versus deploying it to a local Kubernetes cluster, use this comparison guide.

| Factor | Stay in the Cloud (Hyperscaler) | Move to the Edge |

| Data Gravity | Data originates in the cloud (e.g., Web traffic, CRM logs). | Data originates on-premise (e.g., Cameras, LIDAR, Sensors). |

| Latency Needs | > 200ms is acceptable (Human interactions). | < 20ms is mandatory (Machine reactions). |

| Connectivity | High-bandwidth fiber is guaranteed. | Intermittent (Maritime, Oil & Gas) or Metered (5G/Satellite). |

| Privacy | Data can be anonymized and moved. | Data must stay on-site (Strict GDPR, HIPAA). |

| Updates | You retrain the model hourly. | You update the model weekly or monthly. |

Field Note: The biggest mistake I see teams make is underestimating the “Egress Tax.” I recently audited a manufacturing client streaming high-definition video to Azure for defect detection. They were spending $12,000/month on bandwidth to find just 3 defects. We moved the model to a local edge device (a $500 one-time cost), and their monthly operational costs dropped to near zero. Before you commit to a cloud-first strategy, consult our Field Guide to Cloud Egress and Data Gravity to understand the hidden costs of moving data.

The Financial Reality: CapEx vs. OpEx

This is the most important section to share with your finance leadership.

Let’s look at a real-world scenario: An Intelligent Retail store with 50 cameras analyzing foot traffic 12 hours a day.

Option A: Cloud Inference

- The Cost: You pay for bandwidth to upload 50 video streams. Then, you pay a “serverless” fee for every image processed.

- The Result: You will likely spend $15,000 – $20,000 per month. If your traffic spikes, your bill spikes.

Option B: Edge Inference

- The Cost (CapEx): You buy 5 Edge Servers with decent GPUs. This is a one-time purchase of roughly $10,000.

- The Cost (OpEx): You pay a small monthly fee for management software (like Nutanix or VMware Edge), roughly $500/month.

- The Result: You achieve a Return on Investment (ROI) in Month 1. After that, your inference is essentially free, costing only electricity.

Architect’s Warning: While serverless seems cheaper initially, there is always a tipping point where convenience becomes a liability. Run your numbers through our Low-Code vs Serverless: Refactoring Cliff Calculator to see exactly when you should switch from Pay-As-You-Go to fixed infrastructure.

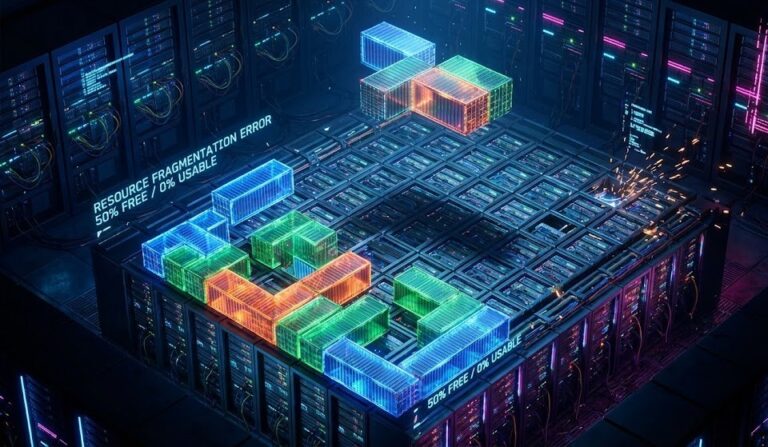

Architecture Pattern: The “Split-Brain” Pipeline

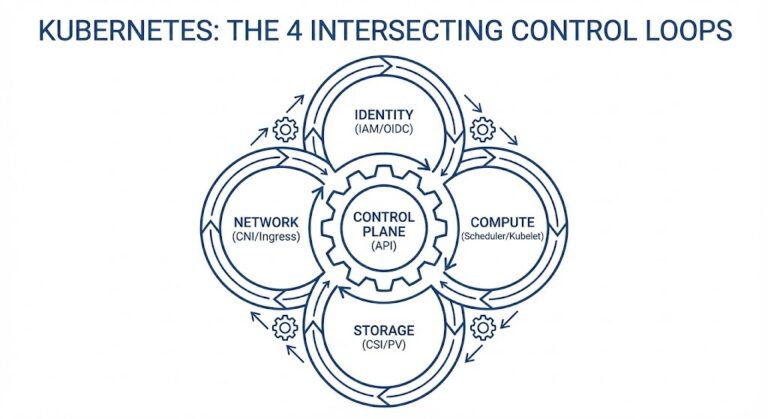

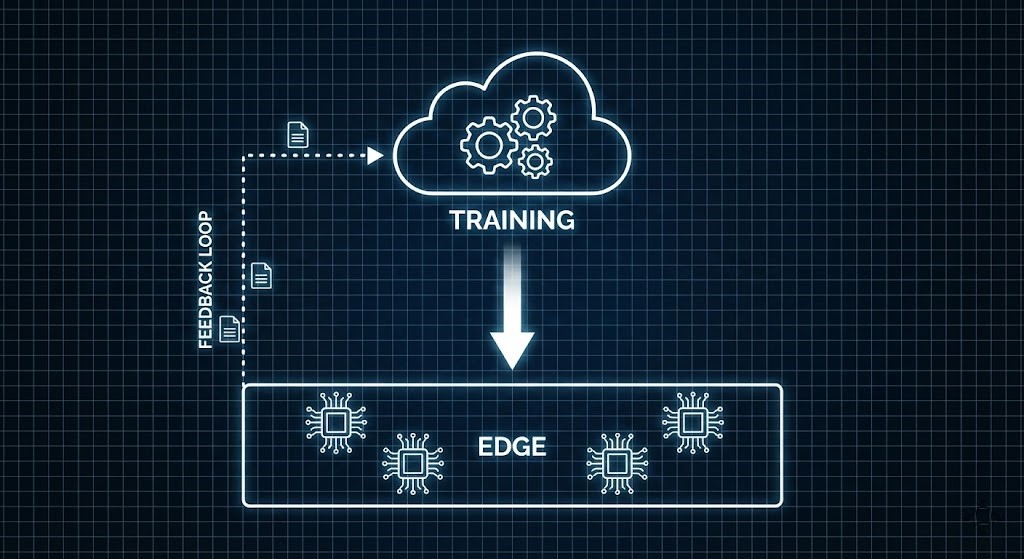

You do not need to abandon the cloud completely. You just need to specialize how you use it. The most robust architecture I have deployed follows a “Loop” pattern:

- Core (Cloud): This is the training ground. Your massive datasets live here. You use high-power compute to build your models.

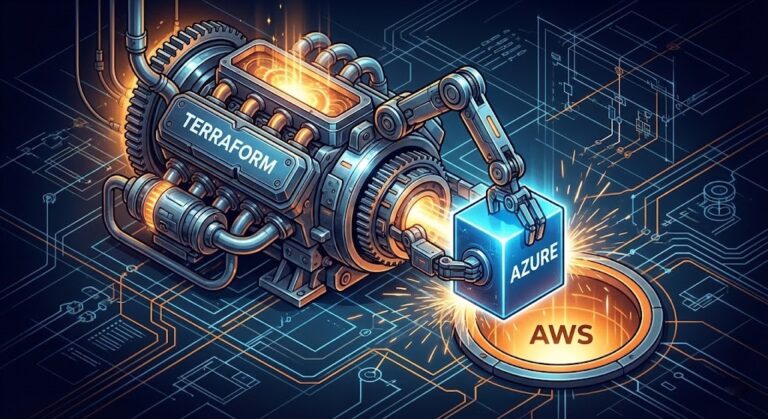

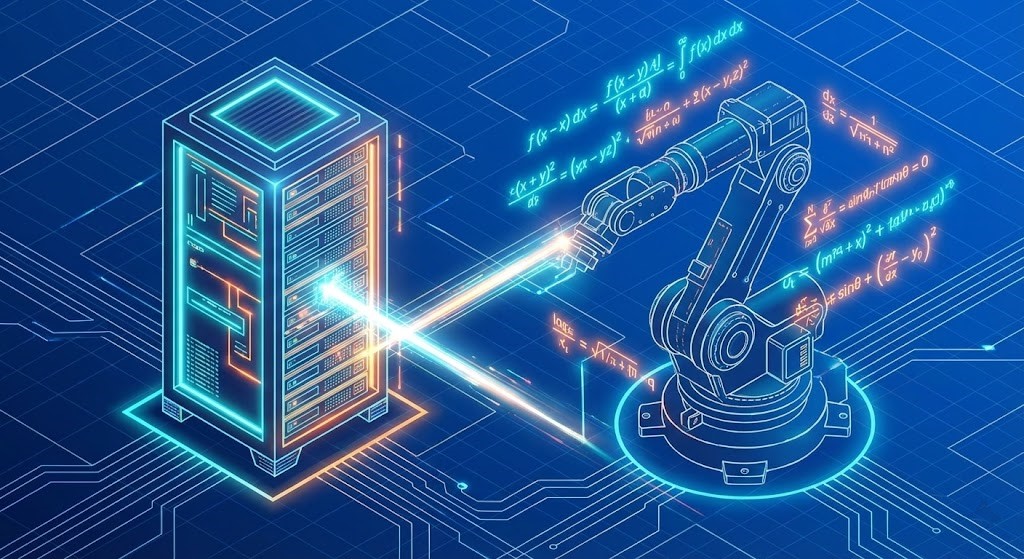

- The Bridge (CI/CD): Once a model is ready, we shrink it (quantization) and package it into a container (Docker). This often involves moving data between object storage and block storage at the edge—a process detailed in our Storage Learning Path.

- Edge (Inference): The container is pushed to the edge devices. The analysis happens locally.

- The Feedback Loop: We only upload “interesting” data—like low-confidence predictions or anomalies—back to the cloud to improve the model.

The Tech Stack Visualization:

| Layer | Technology Examples | Function |

| Orchestration | K3s, Azure Arc, AWS Greengrass | Manages the software on the device. |

| Runtime | ONNX Runtime, TensorRT | Runs the AI model efficiently. |

| Compute | NVIDIA Jetson, Coral TPU, Groq LPU | Hardware that accelerates the math. |

| Messaging | MQTT, NATS.io | Lightweight communication back to the cloud. |

Tools Engineers Love

Don’t guess at your architecture—calculate it.

Additional Resources:

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.