Architecting for Density: Why Your Choice of Container Runtime Limits Your Scale

The “Shim Tax” is Killing Your ROI

If you are running standard Kubernetes clusters on top of VMware or Cloud VMs, you are paying a hidden tax on every single pod you launch. It’s called the Shim Tax.

When you run 1,000 containers using the standard runc runtime (written in Go), you are also running 1,000 “Shim” processes to babysit them. Each shim consumes memory. In high-density environments, I’ve seen this overhead eat 2GB to 4GB of RAM per node just to keep the lights on.

That is RAM you are paying for but can’t use for your application. In a 100-node cluster, that’s 400GB of wasted memory—or about $15,000/year in cloud costs purely for “overhead.”

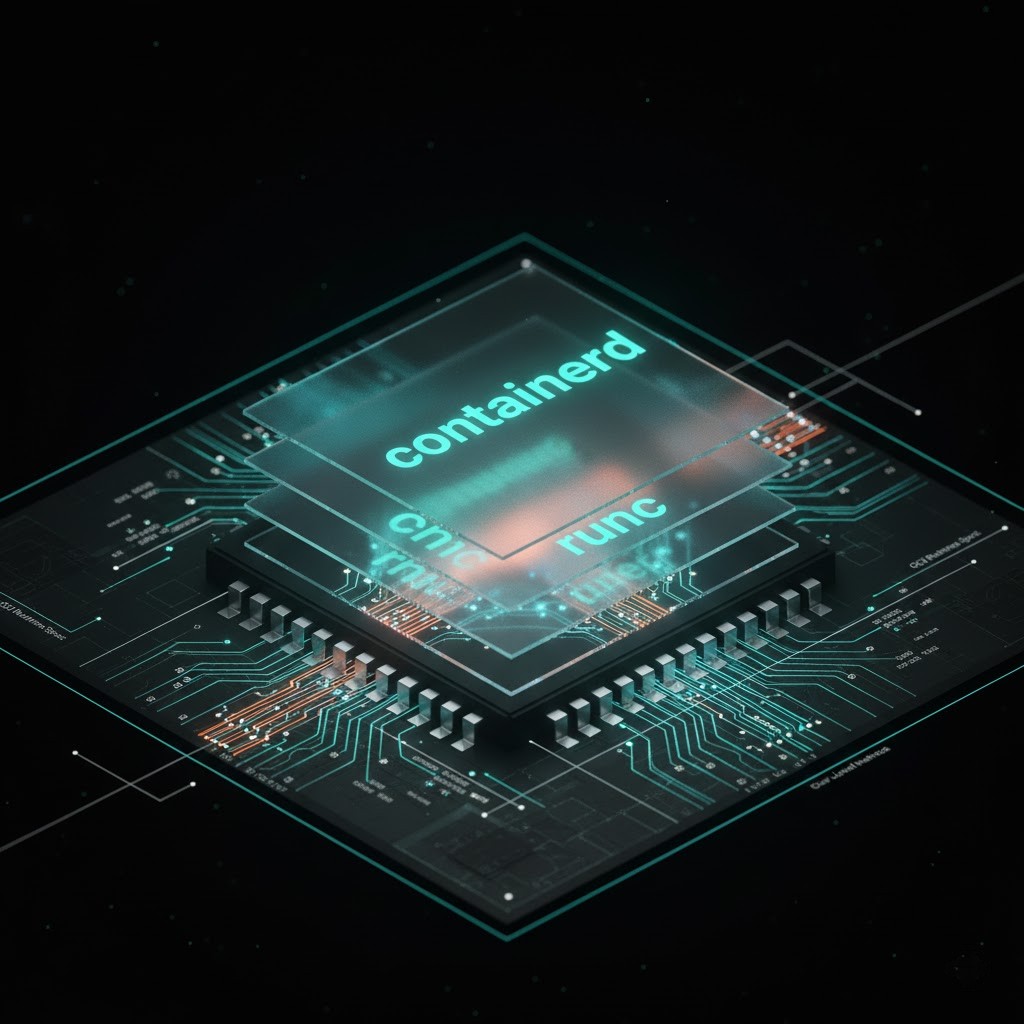

The Architecture: High-Level vs. Low-Level

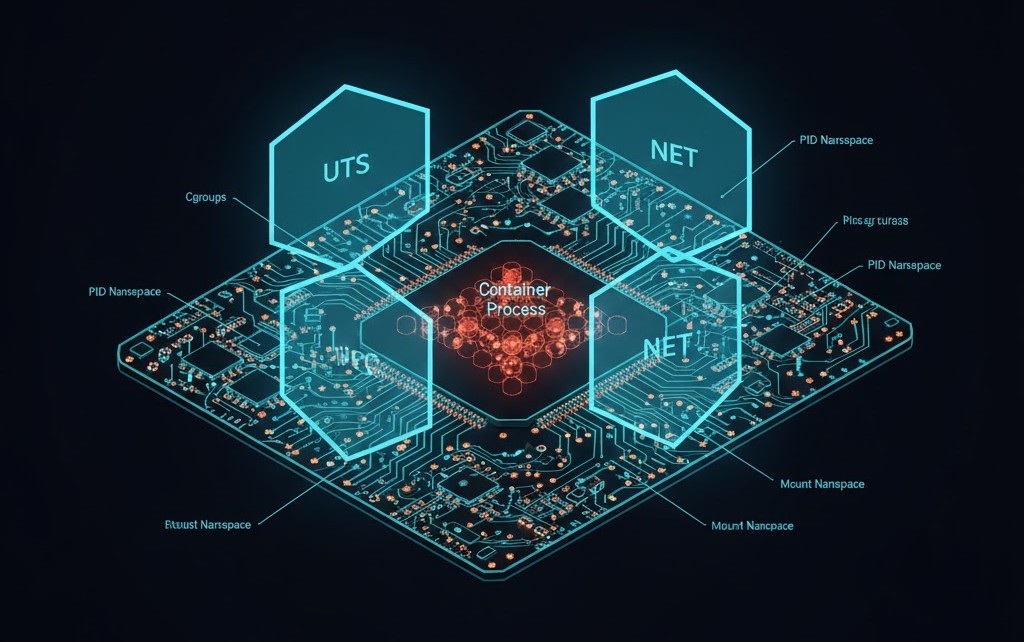

To fix this, you need to understand the plumbing. The Kubernetes runtime has two layers:

- The Manager (High-Level):

- containerd or CRI-O.

- This daemon pulls images and sets up networking. It’s the “General.”

- The Executor (Low-Level):

- runc (Standard, written in Go).

- crun (Optimized, written in C).

- This process actually talks to the Linux Kernel to create namespaces. It’s the “Soldier.”

The Problem: runc is the default, but it is heavy. It spawns a Go runtime for every single container.

The Fix: Switch to crun. It is written in C, has zero garbage collection overhead, and starts closer to the metal.

The Financial Math: runc vs. crun

In 2026, with VMware’s mandatory per-core licensing, density is the only metric that matters. If you can fit 50% more pods on a node, you avoid buying the next license tier.

| Metric | Legacy (runc + Docker) | Modern (crun + CRI-O) |

| Language | Go (Memory Heavy) | C (Lightweight) |

| Per-Pod Overhead | ~15MB – 35MB | ~1MB – 2MB |

| 100 Pod Overhead | ~3.5 GB RAM | ~0.2 GB RAM |

| Cold Start Time | ~150ms | ~5ms |

| VMware Impact | Hits RAM limit early | Maximizes Core Usage |

The Result: Switching to crun allows you to reclaim ~3GB of RAM per node. That is enough space to run 20 more pods per node without buying a single extra CPU license.

War Story: The “Zombie” Node

I once consulted for a Fintech firm seeing massive node instability at scale. They were using a legacy Docker setup where the daemon had become a Single Point of Failure.

When the Docker daemon crashed (which it did under load), it took every container on the node with it. The processes were technically running, but the kubelet lost track of them. We called them “Zombie Nodes.”

The Fix: We migrated them to CRI-O + crun. By decoupling the management layer (CRI-O) from the execution layer (crun), we removed the SPOF. Now, if the management daemon restarts, the containers keep humming along. That is the difference between an amateur setup and a resilient architecture.

Engineer’s Action Plan

- Audit Your Overhead: Run

topon a worker node. If you see hundreds ofcontainerd-shimprocesses eating 20MB each, you have a density problem. - Switch the Runtime: Configure your Kubelet to use Crun. It is fully OCI compliant and a drop-in replacement for

runc. - Recalculate Licensing: Use our VMware Core Calculator to see how much you save by increasing pod density from 40 to 60 per node.

Stop paying for the container wrapper. Pay for the application inside it.

Additional Resources:

- The OCI Runtime Spec – Deep dive into the standards.

- Cgroup v2 Documentation – Understanding resource isolation.

- Project Atomic: The Origin of CRI-O – Context on why a lean K8s runtime was built.

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.