Why Serverless Isn’t Dead for GenAI — It’s Just Misunderstood

Debunking the myth that AWS Lambda can’t power real GenAI workloads by redefining the boundary between the “Brain” and the “Nerves.”

Debunking the myth that AWS Lambda can’t power real GenAI workloads requires redefining one boundary.

Not technology — anatomy.

The difference between the Brain and the Nerves.

I recently ignited a firestorm on Reddit with a post titled “Serverless is Dead for GenAI” (you might have seen the viral discussion here).

The reaction was immediate and polarized. Half the comments dismissed Lambda as a toy; the other half claimed I was using it incorrectly. But as the dust settled on that thread, I realized something important. We weren’t arguing about technology; we were arguing about context.

I wrote that post because I was watching teams—including my own—burn $12,000 a month on idle GPU clusters. We were building “AI Agents” that spent 90% of their time waiting for API responses, yet we provisioned heavy iron as if they were training a model 24/7.

We swung the pendulum from “Serverless First” to “Heavy Iron Only” based on the fear that Lambda couldn’t handle the load. That fear stems from a fundamental misunderstanding of Compute Physics and where the processing actually happens in a modern AI stack.

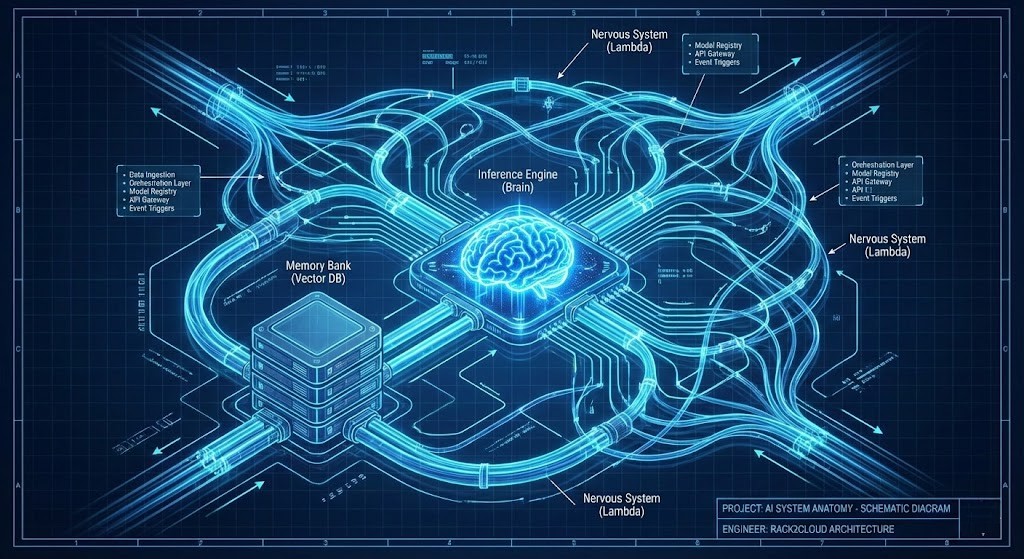

The Anatomy of Modern AI: Brain vs. Nerves

To build cost-effective AI at scale, we must decouple the components. We need to treat the architecture like biological anatomy.

1. The Brain (Inference)

- Role: Heavy lifting, stateful, expensive.

- Tech: SageMaker, Bedrock, persistent ECS clusters.

- Reference: See our AI Architecture Path for handling LLM Ops.

2. The Memory (Context)

- Role: Where state lives.

- Tech: Vector Databases (Pinecone, OpenSearch), Redis.

3. The Nervous System (Orchestration)

- Role: Senses, routes, decides, triggers.

- Tech: AWS Lambda.

The mistake isn’t using Lambda; the mistake is trying to make the Nervous System do the thinking.

Deep Dive: If you want to explore the specific Step Function patterns that make this “Nervous System” possible, we break down the code in our companion piece: AWS Lambda GenAI Architecture Guide.

Decision Matrix: The Architect’s Boundary

This is the boundary I enforce in production. No guesswork.

| Workload Characteristic | Recommended Compute | The Strategic “Why” |

| Agent Orchestration | AWS Lambda | Agents are reactive. Paying for idle containers while an agent waits for a user trigger is OpEx waste. |

| Pre/Post Processing | AWS Lambda | Sanitation (PII masking) and formatting (JSON repair) are CPU tasks. Don’t waste GPU cycles on Regex. |

| Long Context Caching | ECS / EKS | If you need to keep 10GB of context hot in RAM for immediate access, stateless Lambda will fail. |

| Heavy Inference | SageMaker / EC2 | You need raw metal and CUDA cores. Lambda layers cannot support multi-gigabyte dependencies efficiently. |

| WebSockets / Streaming | ECS / Fargate | While Lambda can do WebSockets, maintaining connection state for long streams is often cheaper in a lightweight container. |

The Financial Reality: CapEx vs. OpEx

Here is where the argument usually ends for my clients: Cost.

If you run a containerized agent fleet, you are paying for capacity availability. If you run a serverless orchestration layer, you are paying for capacity utilization.

In my own “bad old days” of that $12,000/mo GPU cluster, we eventually refactored the orchestration logic out of the cluster and into Step Functions + Lambda. The GPU cluster was downscaled to only handle raw inference calls (Bedrock).

- Previous Bill: $12,000/mo (mostly idle GPU time).

- New Bill: $4,500/mo (Bedrock tokens + minimal Lambda costs).

The savings didn’t come from cheaper models — they came from eliminating idle capacity. This aligns directly with the “Cloud Economics & Cost Physics” module in our Cloud Architecture Learning Path.

A Note on Scaling Costs

However, there is a ceiling. If your transaction volume is massive—millions of inference calls a day—public cloud inference can become prohibitively expensive. At that point, buying your own hardware (CapEx) beats the cloud OpEx.

If you are staring at a cloud bill that seems to be spiraling, it might be time to check if you’ve crossed that threshold. We use our Cloud Repatriation ROI Estimator to calculate exactly when the “Cloud Tax” exceeds the cost of owning the hardware.

The Hidden Cost: The “Refactoring Cliff”

This is where many serverless discussions break down: not cost, but complexity.

The pro-container crowd in my Reddit thread did have one valid point. Writing a monolithic Python script that runs in a container is easy. Breaking that script into 15 discrete, event-driven Lambda functions coordinated by a state machine is hard. It requires a different level of engineering maturity.

You have to ask yourself: Is the OpEx saving of Serverless worth the engineering hours required to refactor the application?

Before you commit to a full refactor, I recommend modeling the break-even point. We built the Refactoring Cliff Calculator for this exact scenario—to help you decide if the effort of refactoring pays off against the technical debt and operational cost of maintaining the monolith.

Conclusion: Don’t Be a Purist

The future of GenAI architecture isn’t Serverless OR Containers. It is Serverless AND Containers, deliberately composed.

If you tell me you are “ditching Serverless” because it can’t run Llama-3-70B, I assume you don’t understand distributed systems.

Use GPUs for thinking. Use databases for memory. And if you care about your OpEx budget, use Lambda for the nerves.

Additional Resources:

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

Get the Playbooks Vendors Won’t Publish

Field-tested blueprints for migration, HCI, sovereign infrastructure, and AI architecture. Real failure-mode analysis. No marketing filler. Delivered weekly.

Select your infrastructure paths. Receive field-tested blueprints direct to your inbox.

- > Virtualization & Migration Physics

- > Cloud Strategy & Egress Math

- > Data Protection & RTO Reality

- > AI Infrastructure & GPU Fabric

Zero spam. Includes The Dispatch weekly drop.

Need Architectural Guidance?

Unbiased infrastructure audit for your migration, cloud strategy, or HCI transition.

>_ Request Triage Session