The Hangover After the Boom: Why AI Is Forcing an On-Prem Infrastructure Reckoning

This strategic advisory has passed the Rack2Cloud 3-Stage Vetting Process: Market-Analyzed, TCO-Modeled, and Contract-Anchored. No vendor marketing influence. See our Editorial Guidelines.

For a decade, “Cloud First” wasn’t just a strategy; it was dogma. If you weren’t aiming for 100% public cloud, you were viewed as “legacy.” Buying servers felt retro. Then came the Generative AI boom, and with it, a harsh physical and economic reality check.

As we settle into 2026, enterprises are facing an “AI Infrastructure Reckoning.” CIOs and Architects are realizing that the architecture designed for a successful 3-month Proof of Concept (POC) becomes economically and operationally lethal at production scale—because inference is always-on, data is immovable, and GPUs are never idle. Bringing workloads back on-premises—specifically for AI—is no longer an act of nostalgia for the data center era. It is a calculated, strategic move forced by the need to regain control over margins, latency, and data sovereignty.

Key Takeaways:

- The Scaling Cliff: POC economics collapse under always-on inference when “pay-per-minute” becomes “pay-forever.”

- Physics Wins: Data gravity makes cloud-first AI architectures nonviable at PB scale; moving data to the model is technical suicide.

- The New Stack: Repatriation now means Kubernetes, high-performance object storage, and GPU slicing — not SANs and ticket queues.

The “POC Trap” and the Scaling Cliff

The current stalling of AI ROI across the enterprise isn’t usually a failure of the models; it’s a failure of the economics.

We need to be honest about how these projects start. It is incredibly easy to swipe a corporate credit card, spin up a powerful H100 instance in AWS or Azure, and test a new model. It’s fast, it’s impressive, and for a few weeks, the bill is negligible. This is the Proof of Concept (POC) Trap.

The trap snaps shut when you move from “innovation” to “production.”

Architects often assume AI workloads behave like traditional web applications—bursty, stateless, and elastic. They don’t. AI workloads—specifically training and high-volume RAG (Retrieval-Augmented Generation) inference—are insatiable compute-hogs that run hot 24/7.

The Economic Reality Check

You can rent a Ferrari for a weekend road trip. It’s fun, and for 48 hours, it makes financial sense. But you do not rent a Ferrari to commute to work every single day. If your workload is baseload, your infrastructure must be owned — not rented.

Yet, that is exactly what I see enterprises doing. They use premium, elastic rental infrastructure for baseload, always-on demand. As the FinOps Foundation recently noted, unit cost visibility — not performance — is now the primary barrier to cloud sustainability.

The “Scaling Cliff” Scenario

This is the moment when architects stop optimizing for velocity and start optimizing for survivability.

- Month 1 (Dev): $2,000. (One engineer experimenting). Status: Hero.

- Month 3 (Pilot): $15,000. (Small internal user group). Status: Optimistic.

- Month 6 (Production): $180,000/month. (Public rollout, constant inference). Status: CFO Emergency Meeting.

Architectural Decision Point: Before you commit to a hyperscaler for a production rollout, you need to calculate the “break-even point”—the month where owning the hardware becomes cheaper than renting.

Run the numbers on our Cloud Egress Calculator to model the true cost of moving datasets to cloud inference endpoints vs. keeping them local.

Data Gravity is Undefeated

Beyond the financials, there is the stubborn reality of physics.

For the last ten years of the “Cloud Era,” the paradigm was simple: Code was heavy. Data was light. We moved the data to the cloud because that’s where the compute lived, and the datasets were manageable.

AI reverses this paradigm. Data Gravity has won.

Deep Learning models and Vector Databases require massive, localized context to function. We are talking about Petabytes of training data.

The Latency Tax: I recently reviewed an architecture for a manufacturing client. They had 5 Petabytes of proprietary machine logs, high-res images, and historical sensor data sitting in a secure on-prem object store. Their initial plan? Pipe that data over a WAN link to OpenAI’s public API for every single inference request to detect defects.

This is technical insanity.

- Latency: The round-trip time (RTT) alone killed the “real-time” requirement.

- Egress Fees: Moving that volume of data out of their data center would have cost more than the engineering team’s combined salaries.

- Security: Expanding the attack surface by exposing proprietary IP to the public internet.

The Rule of Proximity: If you want AI to be responsive, secure, and cost-effective, the compute must come to the data, not the other way around.

If your “Crown Jewel” data lives on a mainframe or a secure on-prem SAN, your AI inference engine needs to live in the rack next to it. We explored the specific architecture for this in our AI Infrastructure Strategy Guide, detailing the “Data-First” topology.

Decision Framework: Where Does This Workload Belong?

| Variable | Public Cloud AI | On-Prem / Edge AI |

| Data Volume | Low / Cached (TB scale) | Massive / Dynamic (PB scale) |

| Data Sensitivity | Public / Low Risk | PII / HIPAA / Trade Secrets |

| Connection State | Tolerant of Jitter/Disconnects | Requires <5ms Deterministic Latency |

| Throughput | Bursty (Scale to Zero) | Constant (Baseload) |

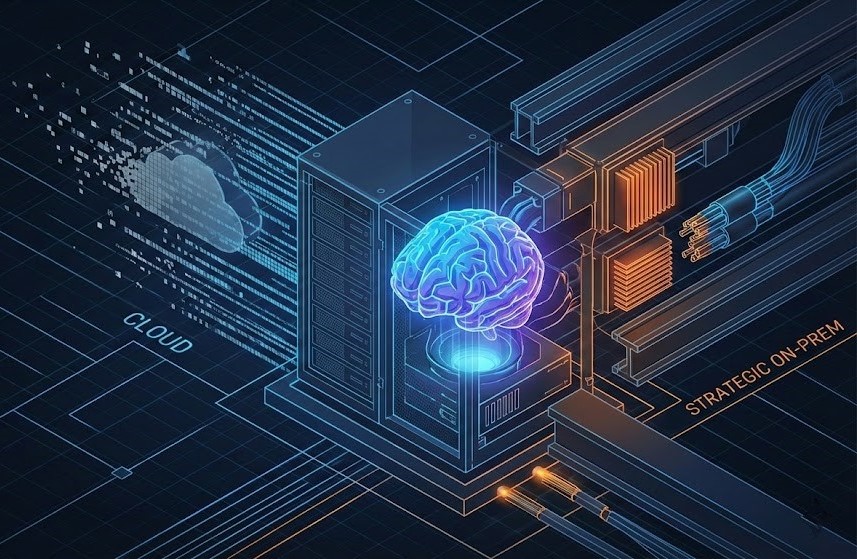

The New “Strategic” On-Prem Stack

The biggest misconception hindering the repatriation discussion is the idea that “going back on-prem” means returning to the brittle, monolithic architectures of 2010.

Repatriation without modernization is not strategy — it’s regression.

I’ve sat in meetings where the mere mention of on-premises infrastructure conjures up nightmares of waiting six weeks for a LUN to be provisioned on a SAN. If that is your definition of on-prem, stay in the cloud. You aren’t ready.

But strategic repatriation is not about nostalgia. It is about modernization. The new on-prem AI stack is fundamentally different. It is “Cloud Native, Locally Hosted.”

1. Kubernetes is the New Hypervisor

In the legacy stack, the atomic unit of compute was the Virtual Machine (VM). In the AI stack, the atomic unit is the Container. While you might still run VMs for isolation, the orchestration layer must be Kubernetes. This allows your data science teams to deploy training jobs using the same Helm charts and pipelines they used in the cloud.

2. The Death of the SAN / The Rise of High-Performance Object

Traditional block storage (SAN) struggles with the massive, unstructured datasets required for AI training. The new stack relies on high-performance, S3-compatible object storage (like MinIO or Nutanix Objects) running over 100GbE or InfiniBand.

The War Story: I recently audited a firm trying to run RAG off a legacy NAS. The latency killed the project. By switching to a local NVMe-based Object store, they reduced query times from 4 seconds to 300ms — the difference between “interesting” and “usable.”

3. Sliced Compute (NVIDIA MIG)

In the cloud, you pay a premium for flexibility. On-prem, you used to pay for idle capacity. That changed with technologies like NVIDIA’s Multi-Instance GPU (MIG). We can now slice a massive H100 GPU into seven smaller, isolated instances. This mimics the “T-shirt sizing” of cloud instances.

The Architect’s Note: MIG solves utilization — not thermal density or power draw. That math still matters.

Licensing Warning: If you are retrofitting existing virtualized environments (VCF/VVF), Broadcom’s new subscription model punishes inefficiency. Before you order hardware, verify your licensing density.

Run the numbers on our VMware Core Calculator to ensure your new high-density AI nodes don’t accidentally double your virtualization renewal costs.

Table: Modernizing the Stack – This table summarizes why “on-prem” in 2026 does not resemble “on-prem” in 2016.

| Feature | Legacy On-Prem (The “Nostalgia” Stack) | AI-Ready On-Prem (The “Strategic” Stack) |

| Compute Unit | Static Virtual Machines (VMs) | Containers & GPU Slices (MIG) |

| Storage Access | Block/File (SAN/NAS) via Fiber Channel | S3-Compatible Object Store via 100GbE |

| Provisioning | Manual Tickets (Days/Weeks) | API-Driven / IaC (Seconds) |

| Scale Model | Scale-Up (Bigger Servers) | Scale-Out (HCI / Linear Nodes) |

| Primary Cost | Maintenance & Power | GPU Density & Cooling Efficiency |

Additional Resources:

- FinOps Foundation: State of FinOps Report – Reference for cloud unit economics challenges.

- Deloitte: State of Generative AI in the Enterprise – Reference for infrastructure misalignment.

- NVIDIA: Multi-Instance GPU (MIG) User Guide – Reference for GPU slicing architecture.

- NIST: AI Risk Management Framework (AI RMF) – Reference for governance and secure data architecture standards.

- Andreessen Horowitz (a16z): The Cost of Cloud, a Trillion Dollar Paradox – Reference for the Repatriation thesis.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.