GPU Cluster Architecture: Engineering the Hardware Stack for Private LLM Training

Private AI infrastructure is systems engineering, not optimization. If you treat a GPU cluster like a standard virtualization farm, you will fail. I have seen deployments where millions of dollars in H100s sat idle 40% of the time because the architect underestimated the network fabric or the storage controller’s ability to swallow a checkpoint.

This guide strips away the marketing noise to focus on the physical realities of training large language models (LLMs). If your GPUs are waiting, your architecture is failing.

Key Takeaways

- The “NVLink Tax” is Mandatory for Training: PCIe GPUs are fine for inference, but for training large models, the SXM form factor is the only way to avoid interconnect bottlenecks.

- Networking is the New CPU: You will spend as much time tuning RoCEv2 congestion control as you will managing CUDA drivers. InfiniBand is easier to tune but harder to manage.

- Storage Throughput > Capacity: Checkpointing performance dictates your training efficiency. If your storage can’t swallow 4TB of RAM in under 60 seconds, you are burning GPU cycles.

- Power Density is the Limit: 10kW per chassis is the new normal. Standard 42U racks are now effectively 15U racks unless you have rear-door heat exchangers or DLC (Direct Liquid Cooling).

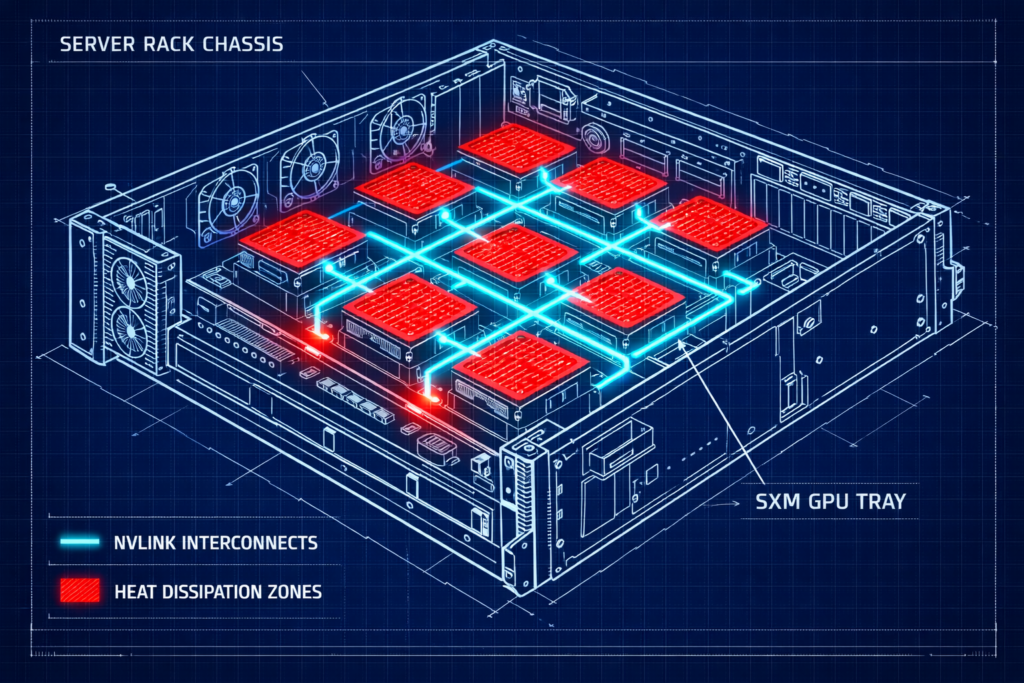

The Compute Layer: SXM vs. PCIe

The first question every CFO asks is, “Why can’t we buy the PCIe version? It’s cheaper and fits in our existing Dell R760s.”

As an architect, your job is to explain why that is a trap for training workloads.

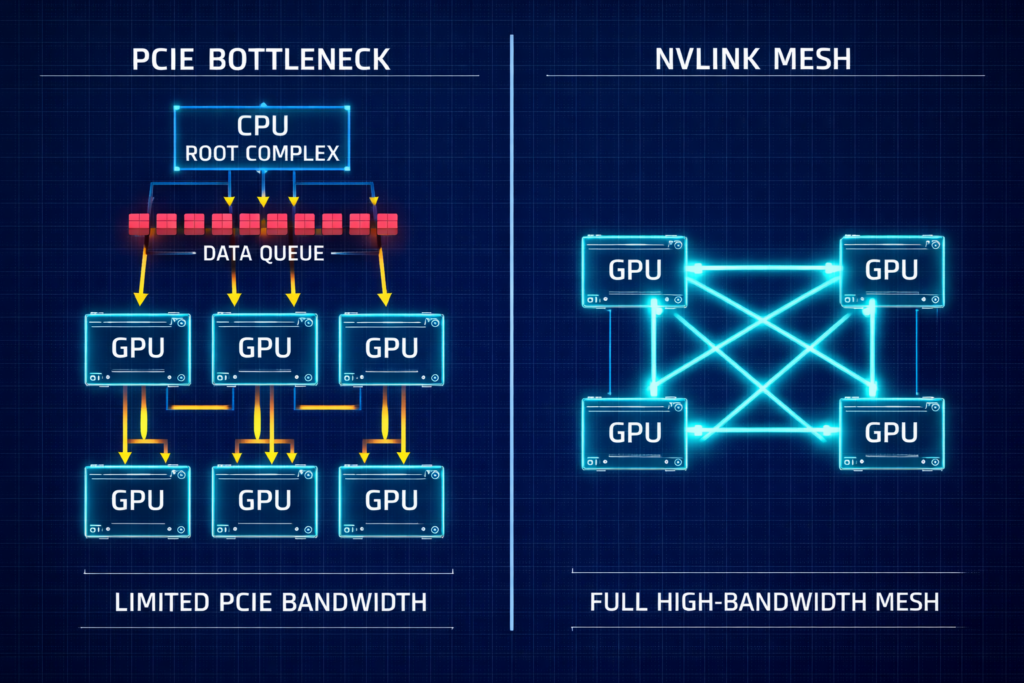

The Interconnect Bottleneck

In distributed training, GPUs do not work in isolation. They spend a significant percentage of every cycle syncing gradients (All-Reduce operations).

- PCIe Gen5: Caps out at roughly ~128 GB/s bi-directional.

- NVLink (SXM5): Delivers ~900 GB/s of GPU-to-GPU bandwidth inside the node (NVIDIA HGX H100 Architecture).

For 70B+ parameter models, PCIe latency becomes the dominant factor in your total training time. You aren’t paying for compute; you are paying for your expensive GPUs to wait for data to traverse a bottleneck.

The Verdict:

- Use SXM5 (HGX) for foundation model training or heavy fine-tuning.

- Use PCIe for inference fleets or lightweight LoRA fine-tuning.

The Fabric: RoCEv2 vs. InfiniBand

InfiniBand (The “Pure” Choice)

If you have the budget and the specialized talent, InfiniBand is the gold standard. It offers native credit-based flow control (meaning effectively zero packet loss), extremely low deterministic latency, and superior collective communication via NCCL and SHARP.

- Drawback: It is an “island.” It requires specialized tooling, and engineers who understand it are rare and expensive.

RoCEv2 (The “Pragmatic” Choice)

RDMA over Converged Ethernet (RoCEv2) runs on standard Ethernet switches (Spectrum-4, Arista, Cisco). This is what I recommend for most enterprise builds because your existing network team can support it.

The Failure Mode: RoCEv2 requires perfect tuning of PFC (Priority Flow Control) and ECN (Explicit Congestion Notification).

Field Note: I have seen a single silent switch buffer overflow stall a training run for 40% of its duration. The network looked “up,” but the pause frames were choking the throughput. Monitoring priority pause frames is mandatory, not optional.

Storage: The Checkpoint Problem

LLM training crashes are inevitable. You survive them by writing checkpoints (snapshots of model weights) every 30–60 minutes. This isn’t just about saving data; it’s about saving progress.

- The Math: A 175B model checkpoint is ~2.5TB (weights + optimizer states + metadata).

- The Target: You need this write to finish in < 60 seconds to minimize GPU idle time.

- The Requirement: Your storage subsystem must sustain ~40 GB/s write throughput per node.

Rack2Cloud Tool Tip: Storage Profiling for Checkpoints

Before you sign the PO for GPUs, simulate checkpoint bursts on your storage array.

- Benchmark I/O: Use

fioto simulate 2–4TB sequential writes. - Test Concurrency: Run the simulation across multiple nodes simultaneously.

- Check Tail Latency: Ensure metadata storms don’t throttle your throughput.

- Evaluate Topology: Consider Local NVMe scratch + Parallel FS / object store.

High-Performance References:

Decision Framework: The “Build” Tiers

| Tier | Use Case | GPU Spec | Network | Storage |

| Sandbox | Prototyping, <13B models | 4x H100 NVL (PCIe) | 400GbE (Single Rail) | Local NVMe RAID |

| Enterprise | Fine-tuning 70B, RAG | 8x H100 SXM5 (1 Node) | 800GbE RoCEv2 | All-Flash NAS (NetApp/Pure) |

| Foundry | Training from scratch | 32+ H100 SXM5 (4+ Nodes) | 3.2Tb InfiniBand/Spectrum-X | Parallel FS (Weka/VAST) |

Facilities: The Silent Killer

You cannot treat these systems like standard 2U servers.

- Power: Expect ~10.2 kW per chassis.

- Cooling: If your ambient inlet temp exceeds 25°C, your GPUs will throttle.

- Rack Density: Without rear-door heat exchangers or Direct Liquid Cooling (DLC), a standard 42U rack is effectively a 15U rack. You simply cannot evacuate the heat fast enough.

Architect’s Verdict

Private AI infrastructure is a discipline of failure management. Failures usually come from:

- Network misconfigurations.

- Storage bottlenecks.

- Power & cooling underestimation.

- Scheduler/topology ignorance.

Mandatory for Production LLM Training:

- SXM-class GPUs.

- Lossless or near-lossless fabric.

- Checkpoint-capable storage.

- Facility-grade power/cooling.

- Scheduler-aware topology.

Anything less is not an architecture—it’s a gamble.

Additional Resources

- NVIDIA HGX Platform (AI Compute Infrastructure)

- NVIDIA Certified Systems (Reference Architectures)

- InfiniBand Networking (NVIDIA)

- NVIDIA Spectrum‑X Ethernet Fabric

- NCCL (NVIDIA Collective Communications Library)

- VAST Data – AI Storage Overview

- WekaIO Parallel File System (WekaFS)

- Lustre Parallel File System

- NetApp AI Infrastructure & Solutions

- Pure Storage + NVIDIA AI Integration (Certifications & Case Studies)

- fio (Flexible I/O Tester) — Official Documentation

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.