Resource Pooling Physics: Mastering CPU Wait Time and Memory Ballooning in High-Density Clusters

I’ve spent 25 years watching infrastructure fail, and here’s what I’ve learned: most outages don’t kick off with some dramatic meltdown. They creep in quietly. You get a bit of scheduler pressure, some memory reclaim, and no one’s dashboard even notices.

Your CPU looks fine at 40%. There’s a 30% buffer left on memory. Still, the helpdesk queue keeps growing with people complaining about “sluggish” apps. Tail latencies shoot up. Suddenly, your recovery windows are way too long for comfort. This isn’t some mystery. It’s just physics at work. If you want to really get virtualization, you have to start with the Modern Virtualization Learning Path.

So let’s get into the nuts and bolts—the resource pooling stuff that actually causes production to break: run queue depth, memory ballooning, NUMA collapse. This isn’t about tuning for the sake of tuning. The goal is to engineer clear, predictable limits, so you’re not getting paged at 3 AM.

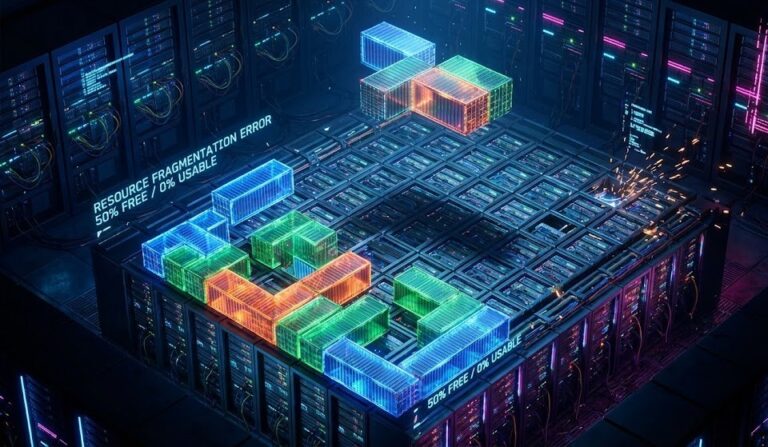

The Illusion of Utilization

Your dashboard says “CPU: 40%.” Your users say “The app is frozen.” Both are true.

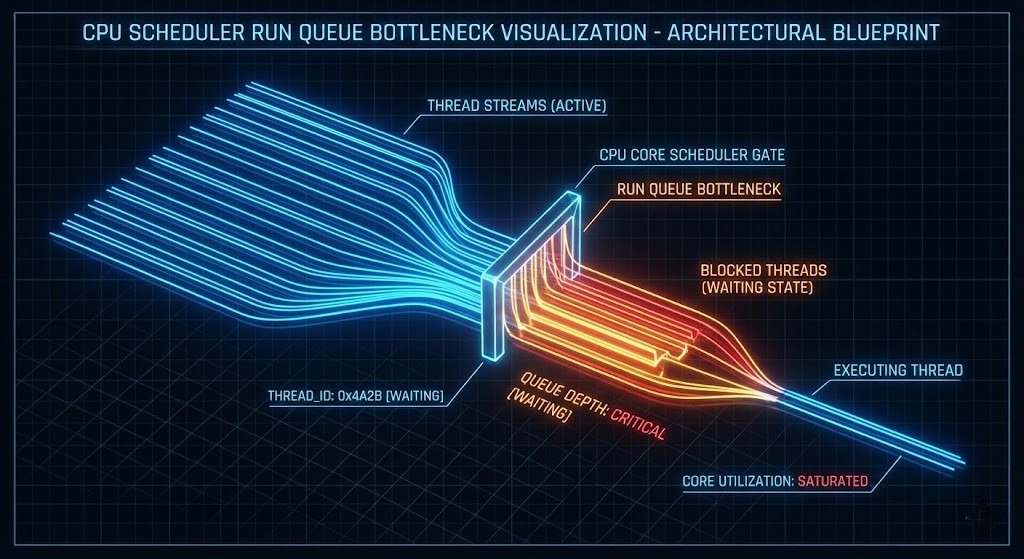

In high-density HCI environments, utilization is a vanity metric. Contention is the reality. When a VM freezes for 500ms, it isn’t because the CPU is full; it’s because the scheduler (the hypervisor kernel) hasn’t given it a turn on the physical core yet.

This “Wait Time” (or %RDY in vSphere / %st in Linux) is the silent killer of transactional databases and real-time AI inference. It is also the primary reason we advocate for Kernel Hardening for Architects—because a congested scheduler is also a vulnerable one.

| Metric | What It Measures | Why It Matters |

| Run Queue Depth | How many processes are waiting for CPU | Shows scheduler congestion — the real bottleneck. |

| Memory Reclaim Rate | How often memory is forcibly taken | Indicates ballooning, swapping, or working set eviction. |

CPU Scheduling Physics: When Cores Lie

The Run Queue Reality

The run queue is simply the line of processes begging for CPU cycles. If you have 32 physical cores and a run queue consistently hovering above 32, latency inflation is guaranteed. It doesn’t matter if your utilization says 50%.

This happens when:

- Oversubscription: You stacked too many vCPUs on the host.

- Broken NUMA: The scheduler is fighting to find local memory.

- Noisy Neighbors: The control plane and data plane are fighting for the same cycles.

Architectural Design Rule: Never mix Control Plane (latency-sensitive) with Batch/AI workloads. They have opposing scheduling needs. This is why our Day-2 Reality of Nutanix AHV guide emphasizes isolating CVM resources from heavy guest workloads.

Memory Physics: Ballooning Is Controlled Starvation

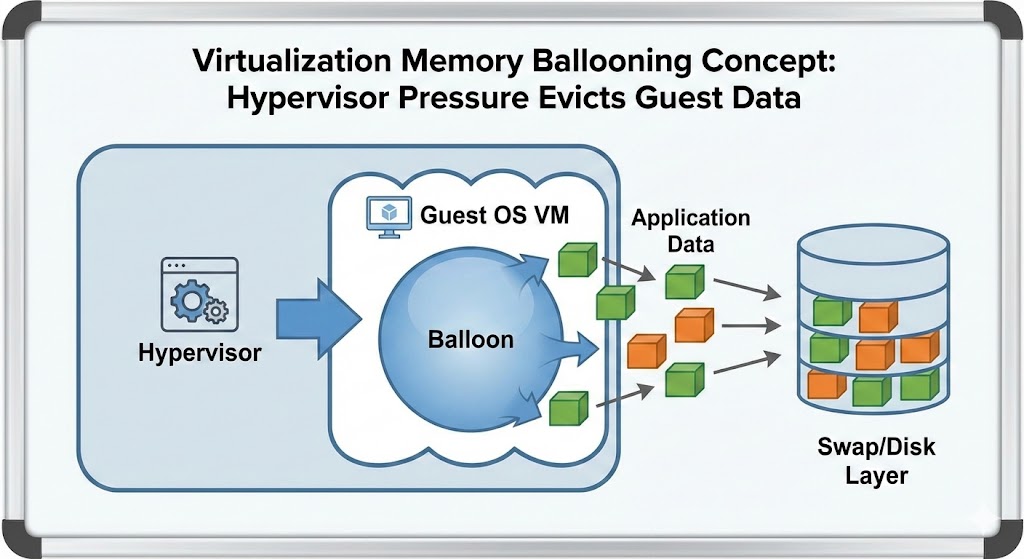

Let’s get one thing straight: Memory ballooning is not an optimization feature. It is forced eviction.

When the host hits memory pressure, it tells the guest OS to inflate the “balloon driver,” claiming pages from the guest to give back to the host. The guest OS panics and starts dropping cache or swapping to disk to satisfy the request.

Why Ballooning kills performance:

- It’s invisible: Unlike swap, ballooning often doesn’t trigger standard alerts until it’s too late.

- It destroys caching: The first thing a guest OS dumps is the file system cache—the exact thing keeping your I/O performance acceptable.

- The Feedback Loop: Guest drops cache -> Disk reads increase -> I/O wait rises -> CPU wait rises.

If you are seeing ballooning during a migration, stop. Consult the vSphere to AHV Migration Strategy immediately to resize your target containers before you crash the source.

NUMA Locality: The Hidden Tax

High-density clusters are where NUMA locality goes to die. If a VM’s vCPU is on Socket 0, but its memory pages are on Socket 1, every memory access has to traverse the interconnect (QPI/UPI/Infinity Fabric).

- Result: Memory latency doubles.

- Symptom: “Random” application slowness during peak hours.

NUMA drift isn’t a tuning issue; it’s an architectural density failure.

The Scheduler Health Toolkit

If you can’t see run queues and ballooning, you’re flying blind. Here is how I check for the physics of failure across different platforms.

1. Platform-Agnostic Quick Checks (Linux Hosts)

CPU Scheduler Health

Bash

uptime

vmstat 1 5

- Watch

r: If run queue > core count, you are saturated. - Watch

st: Steal time > 0 means the host is starving you.

Memory Pressure

Bash

cat /proc/meminfo | egrep 'MemFree|MemAvailable|Buffers|Cached'

- Red Flag: If

MemAvailableis collapsing whileBuffers/Cachedis near zero, you are thrashing.

2. Nutanix AHV / KVM

Bash

virsh list --all

virsh domstats <vm-name> --balloon

- Red Flag: Any balloon target different from current allocation implies reclaim is active.

3. VMware ESXi (The Classic)

Run esxtop in the shell.

- %RDY (Ready): > 5% means scheduler contention.

- %CSTP (Co-Stop): > 3% means you have too many vCPUs (SMP scheduling collapse).

- MEMCTL: > 0 means ballooning is active.

4. Kubernetes Nodes

Check Pressure Stall Information (PSI)—this is the modern way to see contention.

Bash

cat /proc/pressure/cpu

cat /proc/pressure/memory

- Red Flag: Any

someorfullvalue > 0 indicates processes are stalling due to resource lack.

Prometheus Alert Rules

Don’t wait for the phone to ring. Alert on the physics.

CPU Scheduler Saturation

YAML

- alert: SchedulerRunQueueSaturation

expr: node_load1 / count(node_cpu_seconds_total) > 1

for: 2m

labels:

severity: warning

annotations:

summary: "Run queue exceeds core count: Scheduler saturation detected"

Memory Reclaim / Ballooning

YAML

- alert: MemoryPressureDetected

expr: node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes < 0.15

for: 2m

labels:

severity: critical

annotations:

summary: "Available Memory < 15%: Critical Risk of Ballooning"

Architectural Design Rules (Non-Negotiable)

These rules are part of the Modern Infrastructure & IaC Learning Path—because you should be enforcing them with code, not hope.

- Separate Resource Pools: Never mix Control Plane (latency-sensitive) with Batch/AI workloads. They have opposing scheduling needs.

- Enforce Hard Memory Floors: Use reservations for production. Disable ballooning for databases. Treat swap as a failure state.

- Cap vCPU Oversubscription:

- Stateful: 1.5:1 max.

- Stateless: 4:1 max.

- AI/Batch: Dedicated (1:1).Anything beyond this is gambling with scheduler determinism.

Closing Principle

Utilization is a statistic. Contention is physics.

Architecture must obey physics, or you will be punished by it. If you want deterministic performance, you must engineer deterministic scheduling. Everything else is just observability theater.

Additional Resources

- Linux Kernel Documentation: Pressure Stall Information (PSI) – The definitive source on tracking resource contention on modern Linux kernels.

- VMware Docs: vSphere Resource Management Guide – Official documentation on Memory Ballooning and Swapping mechanics.

- Nutanix Bible: AHV Architecture & Resource Scheduling – Deep dive into how Acropolis handles VM placement and NUMA.

- Brendan Gregg: Linux Load Averages: Solving the Mystery – Essential reading for understanding why load average is a demand metric, not a usage metric.

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.