Kubernetes ImagePullBackOff: It’s Not the Registry (It’s IAM)

The Lie

By 2026, when your pod hits an ImagePullBackOff, the registry is usually fine. The image tag is there, the repo is up—nothing is wrong on that end.

But here’s the kicker: your Kubernetes node is leading you on.

ImagePullBackOff is just Kubernetes’ way of saying, “I tried to pull the image, it didn’t work, and now I’m gonna wait longer before I try again.” It doesn’t tell you what really happened. The real issue? Your token died quietly in the background.

So you burn hours checking Docker Hub, thinking it’s down. Meanwhile, the actual problem is that your node’s IAM role can’t talk to the cloud provider’s authentication service.

What You Think Is Happening

You type kubectl get pods. You see the error and your mind jumps to the usual suspects:

- Maybe the image tag is off (was it

v1.2orv1.2.0?). - Maybe the registry is down.

- Maybe Docker Hub is rate-limiting me.

But nope. If the registry were down, you’d see connection timeouts. If you are seeing ImagePullBackOff, it usually means the connection worked, but the authentication handshake failed.

What’s Actually Going On

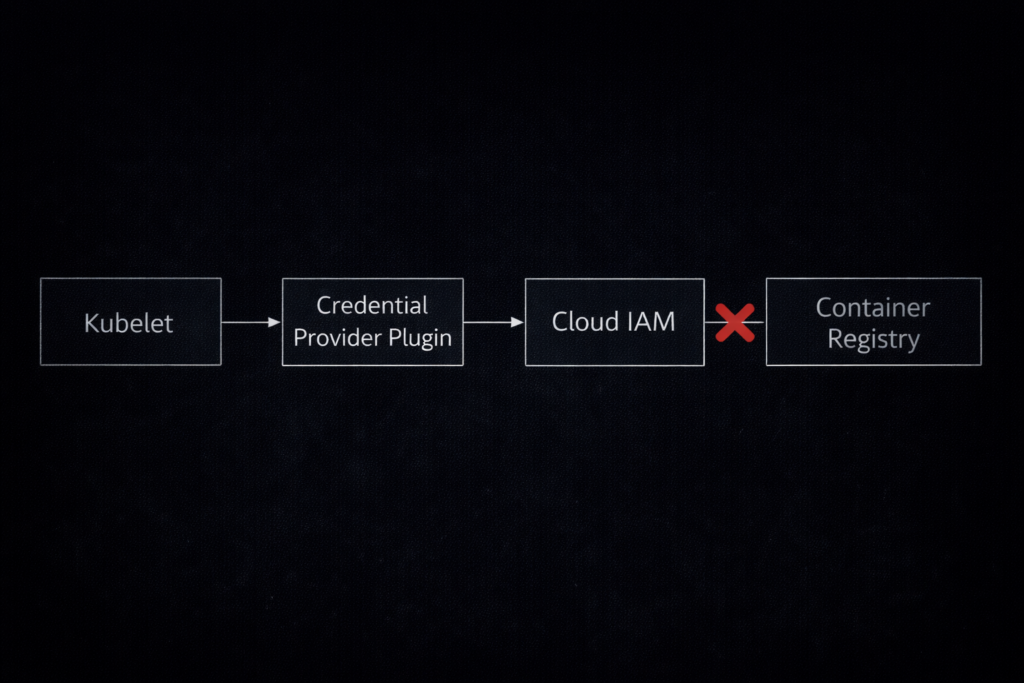

Forget the network. The problem lives in the Credential Provider.

Ever since Kubernetes kicked out the in-tree cloud providers (the “Great Decoupling”), kubelet doesn’t know how to talk to AWS ECR or Azure ACR by itself. Now it leans on an external helper: the Kubelet Credential Provider.

Here’s what happens when you pull an image:

- Request: Kubelet spots your image:

12345.dkr.ecr.us-east-1.amazonaws.com/app:v1. - Exchange: It asks the Credential Provider plugin for a short-lived auth token from the cloud (think AWS IAM or Azure Entra ID).

- Validation: The cloud checks if your Node’s IAM Role is legit.

- Pull: With a valid token, kubelet hands it off to the registry.

But if Step 3 fails—maybe the token is expired, the clock is out of sync, or the instance metadata service is down—the registry throws back a 401 Unauthorized. Kubelet sees “pull failed” and gives you the generic error.

The 5-Minute Diagnostic Protocol

Stop guessing. Here’s how you get to the root of it fast.

Step 1: Look for Real Error Strings

Forget the status column. You want the actual error message.

Bash

kubectl describe pod <pod-name>

Keep an eye out for:

- The Smoking Gun:

rpc error: code = Unknown desc = failed to authorize: failed to fetch anonymous token: unexpected status: 401 UnauthorizedTranslation: “I reached the registry, but my credentials didn’t work.”

- The “No Auth” Error:

no basic auth credentialsTranslation: Kubelet didn’t even try to authenticate—maybe your imagePullSecrets or ServiceAccount setup is missing.

Step 2: Test Node Identity Directly

If kubectl isn’t clear, skip Kubernetes and SSH into the node.

You’re likely running containerd (Docker Shim is gone), so skip docker pull. Use crictl instead. (See our guide on Kubernetes Node Density for why containerd matters).

Bash

# SSH into the node

crictl pull <your-registry>/<image>:<tag>

- If

crictlworks: The node’s IAM setup is fine. The problem is in your Kubernetes ServiceAccount or Secret. - If

crictlfails: The node itself is misconfigured—could be IAM or network.

Step 3: Check Containerd Logs

If crictl fails, dig into the runtime logs. That’s where you’ll find the real error details.

Bash

journalctl -u containerd --no-pager | grep -i "failed to pull"

Step 4: Double-Check IAM Policies

Make sure your node’s IAM role really has permission to read from the registry.

- AWS: Look for

ecr:GetAuthorizationTokenandecr:BatchGetImage. - Azure: Make sure the

AcrPullrole is assigned to the Kubelet Identity.

Cloud-Specific Headaches

AWS EKS: The Instance Profile Trap

You get random 401s on some nodes, but not others.

- The Cause: Usually, the node’s Instance Profile is missing the

AmazonEC2ContainerRegistryReadOnlypolicy. - The Fix: Attach it, and you’re good.

Azure AKS: Propagation Delay

You spin up a cluster with Terraform, deploy right away, and it fails.

- The Cause: Azure role assignments (

AcrPull) can take up to 10 minutes to show up everywhere. - The Fix: Just wait, or verify the identity manually:

Bash

az aks show -n <cluster> -g <rg> --query "identityProfile.kubeletidentity.clientId"Google GKE: Scope Mismatch

You’re sure the Service Account is right, but you still get 403 Forbidden.

- The Cause: If the VM was made with the default Access Scopes (Storage Read Only), it literally can’t talk to the Artifact Registry API.

- The Fix: You need Workload Identity, or you need to recreate the node pool with the

cloud-platformscope.

The 2026 Failure Pattern: Token TTL & Clock Drift

This is where senior engineers get tripped up. Cloud credentials don’t last long anymore.

- AWS: EKS tokens die every 12 hours.

- GCP: Metadata tokens expire every 1 hour.

Now, if your node’s clock drifts (maybe NTP broke), or if the Instance Metadata Service (IMDS) gets swamped, kubelet can’t refresh the token.

Suddenly, a node that’s been fine for 12 hours starts rejecting new pods with ImagePullBackOff.

How do you catch it? Keep an eye on node-problem-detector for NTP and IMDS issues.

The Private Networking Trap

If your IAM is perfect but pulls still fail, check your network setup.

A lot of architects lock down outbound internet traffic. As we discussed in Vendor Lock-In Happens Through Networking, if you are using AWS PrivateLink or Azure Private Endpoints, a misconfigured VPC Endpoint policy might just be silently dropping your traffic.

The Test: Run this from the node:

Bash

curl -v https://<your-registry-endpoint>/v2/

- Result: Hangs / Timeout → Networking Issue. (Security Group / PrivateLink missing).

- Result: 401 Unauthorized → IAM Issue. (Network is fine, Auth is wrong).

- Result: 200 OK → The Repo Exists. (You likely have a typo in the image tag).

Production Hardening Checklist

Don’t just fix it. Proof it.

Final Thought

ImagePullBackOff is rarely a “Docker” problem. It is almost always an “Identity” problem.

If you are debugging this by staring at the Docker Hub UI, you are looking at the wrong map. Stop checking the destination. Start auditing the handshake.

Series Context

- Part 1: The Dependency Trap (Why shared services kill availability).

- Part 2: Identity is the New Firewall (Why IAM is the root of all failures).

- Part 3: Vendor Lock-In Happens Through Networking (Why private endpoints trap you).

- Current: Kubernetes ImagePullBackOff (The invisible IAM failure).

Additional Resources

- Kubernetes Docs: Pull an Image from a Private Registry (Official spec on

imagePullSecrets). - AWS Containers: Amazon ECR Interface VPC Endpoints (The networking requirements).

- Azure Engineering: Authenticate with ACR from AKS (Troubleshooting Managed Identities).

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.