It’s Not DNS (It’s MTU): Debugging Kubernetes Ingress

This is Part 3 of the Rack2Cloud Diagnostic Series, where we debug the silent killers of Kubernetes reliability.

- Part 1: ImagePullBackOff: It’s Not the Registry (It’s IAM)

- Part 2: The Scheduler is Stuck: Debugging Pending Pods

- Part 3: It’s Not DNS (It’s MTU): Debugging Ingress (You are here)

- Part 4: Storage Has Gravity (Debugging PVCs)

You deploy your application. The pods are Running. kubectl port-forward works perfectly. You open the public URL, expecting success.

502 Bad Gateway.

Or maybe:

504 Gateway Timeoutupstream prematurely closed connectionconnection reset by peerno healthy upstream

Welcome to the Networking Illusion. In Kubernetes, “Network Connectivity” is a stack of lies. The error message you see is rarely the error that happened.

This is the Rack2Cloud Guide to debugging the space between the Internet and your Pod.

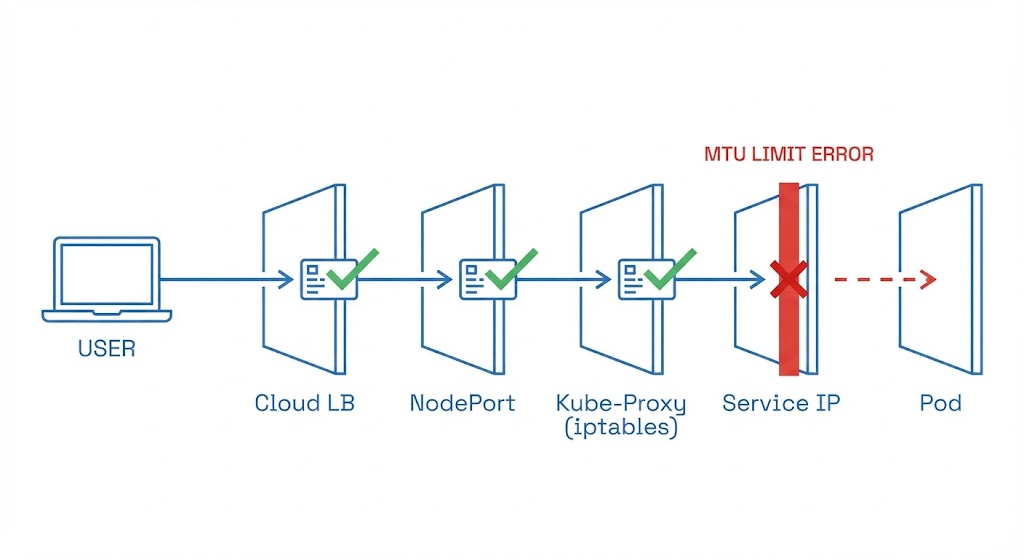

The False Reality Stack (The “Bridge” Lie)

Engineers often think of Ingress as a simple bridge: Traffic hits the Load Balancer and goes to the Pod.

Wrong. Ingress is five different networks pretending to be one. If any single handshake fails, the whole chain collapses, and the Load Balancer returns a generic 502.

The Real Path:

- Client → Cloud Load Balancer (AWS ALB/Google GLB)

- LB → NodePort (The VM’s physical port)

- NodePort →

kube-proxy/ iptables (The Linux Kernel routing) - iptables → Service IP (Virtual Cluster IP)

- Service → Pod (The Overlay Network)

If port-forward works, you know Layer 5 is fine. The problem is in Layers 1 through 4.

Health Check Mismatch (The #1 Cause of Outages)

Your app is running, but the Load Balancer thinks it’s dead.

The Trap: Cloud Load Balancers do not check your Pod. They check the NodePort. Kubernetes readinessProbe checks the Pod inside the cluster. These are two different universes.

The Failure Scenario:

- Pod: Returns

200 OKat/health/api. - Ingress Controller: Configured to check

/. - Result: The App returns

404at/. The Load Balancer sees404, marks the backend as “Unhealthy,” and cuts the connection.

The Fix: Ensure your livenessProbe, readinessProbe, and Ingress healthCheckPath all point to the same endpoint that returns a 200.

The Host Header Trap (The Classic Engineer Mistake)

You try to debug by curling the Load Balancer IP directly. It fails. You panic.

The Command You Ran:

Bash

curl http://35.192.x.x

> 404 Not Found

The Reality: Your Ingress Controller (likely NGINX) is a “Virtual Host” router. It looks at the Host header to decide where to send traffic. If you hit the IP directly, it doesn’t know which app you want, so it drops you.

The Fix: You must spoof the header to test the route:

Bash

curl -H "Host: example.com" http://35.192.x.x

If this works, your networking is fine—you just have a DNS propagation issue.

The MTU Blackhole (The Silent Killer)

This is the differentiator between a junior admin and a Senior SRE. Everything works for small requests (login, health checks). But a large JSON response hangs forever.

The Cause: Kubernetes uses an Overlay Network (like VXLAN or Geneve). These protocols wrap your data in extra headers (usually 50 bytes).

- Standard Ethernet MTU: 1500 bytes.

- Overlay Packet: 1550 bytes.

When this fat packet tries to leave the node, the physical network says “Too Big.” Normally, routers fragment the packet. But many cloud networks (AWS/GCP) drop fragmented packets for performance.

The Symptom:

curlworks for small data.curltimes out for large data.

The Test:

Bash

# Force a small MTU to test connectivity

curl --limit-rate 10k https://site.com

# Test the "large body" hang

curl -v https://site.com/large-json-endpoint

The Fix: Lower the MTU on your CNI (Calico/Flannel/Cilium) to 1450 or enable “Jumbo Frames” on your VPC.

TLS Termination Mismatch

You see this error in your Ingress Controller logs: SSL routines:ssl3_get_record:wrong version number

The Cause: You are trying to speak HTTPS to an application that expects HTTP (or vice versa).

- Scenario A: The Load Balancer terminates TLS (decrypts it) and sends plain HTTP to the pod. But your Pod is configured to expect HTTPS.

- Scenario B: You have “Double Encryption” enabled, but the Ingress annotation is missing.

The Fix: Check your Ingress annotations. For NGINX:

YAML

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

Advanced: Connection Tracking (Conntrack) Exhaustion

This is for the high-traffic war rooms. You see random 502 errors during peak load, but CPU/Memory are fine.

Linux uses a table called conntrack to remember NAT entries. If you have massive short-lived connections (like PHP/Node.js without keep-alive), this table fills up.

The Log:

Bash

dmesg | grep conntrack

> nf_conntrack: table full, dropping packet

The Fix: Tune your sysctl settings (net.netfilter.nf_conntrack_max) or enable HTTP Keep-Alive to reuse connections.

The Rack2Cloud Diagnostic Protocol

Networking debugging is usually panic-driven. Stop guessing. Run these 5 commands in order. Every step removes one possible universe of failure.

Phase 1: Inside the Cluster (The Control)

Goal: Prove the application is actually running and reachable internally.

Bash

# syntax: bash

# Replace <pod-name> with a debug pod (like curl-client)

kubectl exec -it <debug-pod> -- curl -v http://<service-name>

- ✅ Works? Your App and Service Discovery (DNS) are fine. Move to Phase 2.

- ❌ Fails? Stop looking at the Ingress. Your application is dead, or your Service is misconfigured.

Phase 2: The Service Glue

Goal: Prove Kubernetes knows where your pods are.

Bash

# syntax: bash

kubectl get endpoints <service-name>

- ✅ Result: You see IP addresses listed (e.g.,

10.2.4.5:80). - ❌ Result:

<none>or empty. - The Fix: Your Service

selectorlabels do not match your Podlabels.

Phase 3: The NodePort (The Hardware Interface)

Goal: Prove traffic can enter the VM.

Bash

# syntax: bash

# Get the NodePort first

kubectl get svc <service-name>

# Curl the Node IP directly

curl -v http://<node-ip>:<node-port>

- ✅ Works?

kube-proxyandiptablesare working. - ❌ Fails? Your Cloud Firewall (AWS Security Group) is blocking the port, or

kube-proxyhas crashed.

Phase 4: The Load Balancer (The Host Trap)

Goal: Prove the Ingress Controller is routing correctly.

Bash

# syntax: bash

# Do NOT just curl the IP. You must spoof the Host header.

curl -H "Host: example.com" http://<load-balancer-ip>

- ✅ Works? The Ingress Controller is healthy.

- ❌ Fails (404/503)? Your Ingress

hostorpathrules are wrong. The Controller received the packet but didn’t know who it was for.

Phase 5: The Internet (The Silent Killers)

Goal: Prove the pipe is big enough.

Bash

# syntax: bash

# Test for MTU issues (Small header works?)

curl --head https://example.com

# Test for Fragmentation (Large body hangs?)

curl https://example.com/large-asset

- ❌ Fails only here? This is MTU (Packet drop), WAF (blocking request), or DNS propagation.

Summary: Networking Failures are Location Problems

When you see a 502, do not blame the code. Trace the packet.

- Check the Host Header.

- Check the MTU.

- Check the Conntrack table.

Next Up in Rack2Cloud: We finish the Diagnostic Series with Part 4: Storage Has Gravity—why your PVC is bound, but your pod is stuck in the wrong Availability Zone.

The Rack2Cloud Diagnostic Series

- Part 1: ImagePullBackOff is a Lie: Debugging IAM Handshakes

- Part 2: Your Cluster Isn’t Full: Debugging the Scheduler

- Part 3: It’s Not DNS (It’s MTU): Debugging Ingress (This Article)

- Part 4: Storage Has Gravity (Debugging PVCs)

Additional Resources

- Kubernetes Ingress Controllers (Official Docs)

- Debug Services and DNS (Official Guide)

- NGINX Ingress Troubleshooting Guide

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.