Storage Has Gravity: Debugging PVCs & AZ Lock-in

Why Your Pod is Pending in Zone B When Your Disk is Stuck in Zone A

This is Part 4 of the Rack2Cloud Diagnostic Series, where we debug the silent killers of Kubernetes reliability.

- Part 1: ImagePullBackOff: It’s Not the Registry (It’s IAM)

- Part 2: The Scheduler is Stuck: Debugging Pending Pods

- Part 3: It’s Not DNS (It’s MTU): Debugging Ingress

- Part 4: Storage Has Gravity (You are here)

You have a StatefulSet—maybe it’s Postgres, Redis, or Jenkins. You drain a node for maintenance. The Pod tries to move somewhere else. And then… nothing. It just sits in Pending. Forever.

The error message might be the classic one:

1 node(s) had volume node affinity conflict

But at 3 AM, you might also see these terrors:

pod has unbound immediate PersistentVolumeClaimspersistentvolumeclaim "data-postgres-0" is not boundfailed to provision volume with StorageClassMulti-Attach error for volume "pvc-xxxx": Volume is already used by node

Welcome to Cloud Physics.

We spend so much time treating containers like “cattle” (disposable, movable) that we forget

Data is not a container.

You can move a microservice in the blink of an eye. You cannot move a 1TB disk in the blink of an eye.

This is Part 4 of the Rack2Cloud Diagnostic Series. Today: Data Gravity.

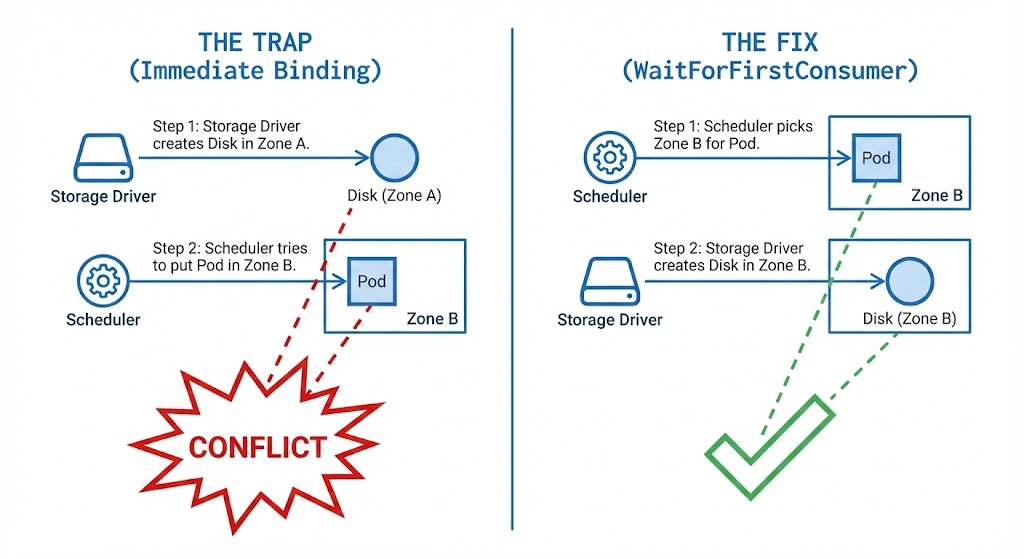

The Mental Model: The “Double Scheduler”

Most engineers think Kubernetes schedules a Pod once. Wrong. A Stateful Pod is effectively scheduled twice:

- Storage Scheduling: Where should the disk exist?

- Compute Scheduling: Where should the code run?

If these two decisions happen independently, you get a deadlock. The Storage Scheduler picks us-east-1a (because it has free disk space). The Compute Scheduler picks us-east-1b (because it has free CPU). Result: The Pod cannot start because the cable doesn’t reach.

The Trap: “Immediate” Binding

The #1 cause of storage lock-in is a default setting called VolumeBindingMode: Immediate.

Why does this even exist?

It exists for legacy reasons, local storage, or single-zone clusters where location doesn’t matter. But in a multi-zone cloud, it is a trap.

The Failure Chain:

- You create the PVC.

- The Storage Driver wakes up immediately. It thinks, “I need to make a disk. I’ll pick

us-east-1a.” - The Disk is created in

1a. It is now physically anchored there. - You deploy the Pod.

- The Scheduler sees

1ais full of other apps, but1bhas free CPU. - The Conflict: The Scheduler wants to put the Pod in

1b. But the disk is locked in1a.

The Scheduler cannot move the Pod to the data (no CPU in 1a).

The Cloud Provider cannot move the data to the Pod (EBS volumes do not cross zones).

The Fix: WaitForFirstConsumer

You have to teach the storage driver some patience.

Do not make the disk until the Scheduler picks a spot for the Pod.

This is what WaitForFirstConsumer does.

How it works:

- You create the PVC.

- Storage Driver: “I see the request, but I’m waiting.” (Status:

Pending). - You deploy the Pod.

- Scheduler: “I’m picking Node X in

us-east-1b. Plenty of CPU there.” - Storage Driver: “Alright, Pod’s going to

1b. I’ll make the disk in1b.” - Result: Success.

The Hidden Trap:

This only works once.

WaitForFirstConsumer delays the creation of the disk. Once the disk is created in us-east-1b, gravity wins forever. If you later upgrade and 1b is full, your Pod will not fail over to 1a. It will simply hang until space frees up in 1b.

The Multi-Attach Illusion (RWO vs RWX)

Engineers often assume, “If a node dies, the pod will just restart on another node immediately.”

This is the Multi-Attach Illusion.

Most cloud block storage (AWS EBS, Google PD, Azure Disk) is ReadWriteOnce (RWO).

This means the disk can only attach to one node at a time.

| Mode | What Engineers Think | The Reality |

| RWO (ReadWriteOnce) | “My pod can move anywhere.” | Zonal Lock. The disk must detach from Node A before attaching to Node B. |

| RWX (ReadWriteMany) | “Like a shared drive.” | Network Filesystem (NFS/EFS). Slower, but can be mounted by multiple nodes. |

| ROX (ReadOnlyMany) | “Read Replicas.” | Rarely used in production databases. |

If your node crashes hard, the cloud control plane might still think the disk is “attached” to the dead node.

Result: Multi-Attach error. The new Pod cannot start because the old node hasn’t released the lock.

The “Slow Restart” (Why DBs Take Forever)

You rescheduled the Pod. The node is in the right zone. But the Pod is stuck in ContainerCreating for 5 minutes.

Why?

The “Detach/Attach” dance is slow.

- Kubernetes tells the Cloud API: “Detach volume

vol-123from Node A.” - Cloud API: “Working on it…” (Wait 1–3 minutes).

- Cloud API: “Detached.”

- Kubernetes tells Cloud API: “Attach

vol-123to Node B.” - Linux Kernel: “I see a new device. Mounting filesystem…”

- Database: “Replaying journal logs…”

This is not a bug. This is physics.

The Rack2Cloud Storage Triage Protocol

If your stateful pod is pending, stop guessing. Run this sequence to isolate the failure domain.

Phase 1: The Claim State

Goal: Is Kubernetes even trying to provision storage?

Bash

kubectl get pvc <pvc-name>

- ✅ Result: Status is

Bound. Move to Phase 2. - ❌ Result: Status is

Pending. - The Fix: Describe the PVC (

kubectl describe pvc <name>). Look forwaiting for a volume to be created, either by external provisioner...or cloud quota errors. If usingWaitForFirstConsumer, you must deploy a Pod before it will bind.

Phase 2: The Attachment Lock

Goal: Is an old node holding the disk hostage?

Bash

kubectl describe pod <pod-name> | grep -A 5 Events

- ✅ Result: Normal scheduling events, eventually pulling image.

- ❌ Result:

Multi-Attach errororVolume is already used by node. - The Fix: The cloud control plane thinks the disk is still attached to a dead node. Wait 6–10 minutes for the cloud provider timeout, or forcibly delete the previous dead pod to break the lock.

Phase 3: The Zonal Lock (The Smoking Gun)

Goal: Are the physics impossible?

Bash

# 1. Get the Pod's assigned node (if it made it past scheduling)

kubectl get pod <pod-name> -o wide

# 2. Get the Volume's physical location

kubectl get pv <pv-name> --show-labels | grep zone

- ✅ Result: The Node and the PV are in the same zone (e.g., both

us-east-1a). - ❌ Result: Mismatch. The PV is in

us-east-1a, but the Pod is trying to run on a Node inus-east-1b. - The Fix: Deadlock. The Pod cannot run on that Node. You must drain the wrong node to force rescheduling, or ensure capacity exists in the correct zone.

Phase 4: Infrastructure Availability

Goal: Does the required zone even have servers?

Bash

# Replace with the zone where your PV is stuck

kubectl get nodes -l topology.kubernetes.io/zone=us-east-1a

- ✅ Result: Nodes are listed and status is

Ready. - ❌ Result:

No resources found, or all nodes areNotReady/SchedulingDisabled. - The Fix: Your cluster has lost capacity in that specific zone. Check Cluster Autoscaler logs or cloud provider health dashboards for that AZ.

Production Hardening Checklist

Don’t just fix it today. Prevent it tomorrow.

Summary: Respect the Physics

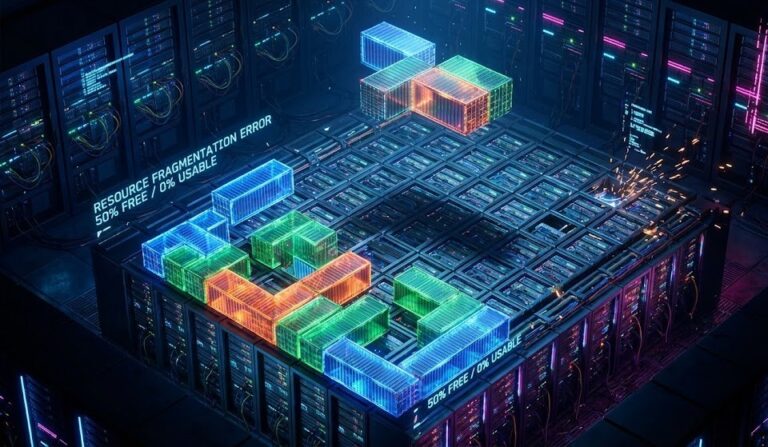

Stateless apps? They’re like water—they flow wherever there’s room. Stateful apps? Anchors. Once they drop, that’s where they stay.

- Use

WaitForFirstConsumerto prevent “Day 1” fragmentation. - Ensure your Auto Scaling Groups cover all zones, so there’s always a node available wherever your data lives.

- And please, never treat a database Pod like a web server.

This concludes the Rack2Cloud Diagnostic Series. Now that your Identity, Compute, Network, and Storage are solid, you’re ready to scale.

Next, we’ll zoom out and talk strategy: How do you survive Day 2 operations without drowning in YAML?

The Rack2Cloud Diagnostic Series (Complete)

- Part 1: ImagePullBackOff is a Lie: Debugging IAM Handshakes

- Part 2: Your Cluster Isn’t Full: Debugging the Scheduler

- Part 3: It’s Not DNS (It’s MTU): Debugging Ingress

- Part 4: Storage Has Gravity (This Article)

Additional Resources

- Kubernetes Storage Classes (Official Docs)

- Allowed Topologies & Volume Binding Mode

- AWS EBS CSI Driver Documentation

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.