Azure Landing Zone Refactors: The Hub-and-Spoke Reality Check

This technical deep-dive has passed the Rack2Cloud 3-Stage Vetting Process: Lab-Validated, Peer-Challenged, and Document-Anchored. No vendor marketing influence. See our Editorial Guidelines.

A landing zone built for day one rarely survives day 500.

Refactoring to hub-and-spoke can be zero-downtime — if you treat network and identity as lift-and-shift assets, not rebuilds. But in the real world, Azure Policy drift, Private Link sprawl, and custom role creep are the first visible symptoms of landing zone entropy.

And here’s the uncomfortable truth:

The “right” refactor depends entirely on your subscription governance model. Blindly copying Microsoft’s reference templates is how you end up with a beautifully documented failure.

Before touching anything, you must model CapEx vs OpEx. Managed firewalls, routing layers, and security services become long-term OpEx anchors if you’re not intentional.

Key Takeaways

- Entropy Is Inevitable: Policy drift, Private Link chaos, and custom RBAC sprawl are early warning signs.

- Zero-Downtime Is Possible: Treat network and identity as migrations, not rebuilds.

- Context Beats Templates: CAF is a baseline, not a blueprint.

- Cost Matters: Managed hubs and security layers are architectural and financial commitments.

The Problem You Don’t See Until Scale

I’ve lost count of how many times I’ve walked into an environment where the “cloud foundation” was a single resource group doing all the heavy lifting. One client proudly said they had a “complete landing zone.”

In reality? A hub VNet with peered spokes, no enforced naming policy, and 40 public IPs under developers’ personal accounts.

That’s not a landing zone — that’s technical debt waiting for an audit.

Refactoring in Azure isn’t glamorous. It’s like replacing your car’s wiring harness while driving 70 mph. But with a solid Cloud Strategy, you can realign governance, routing, and identity without outages or redeployments.

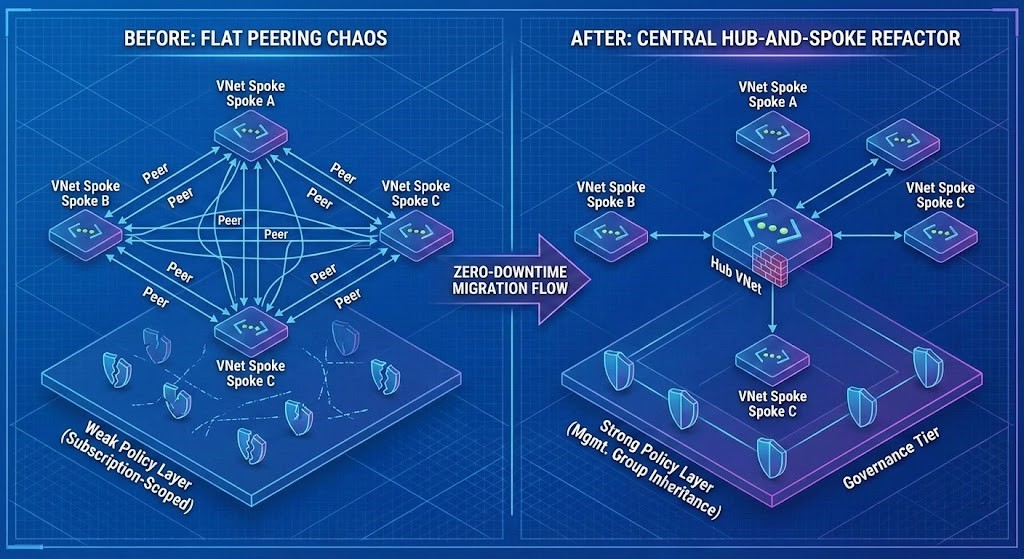

Network Refactor: Flattening the Spaghetti

Organic growth creates asymmetric peering, overlapping address spaces, orphaned DNS zones, and route collisions. True hub-and-spoke compliance means re-anchoring connectivity through centralized control points and clean subscription scoping.

Flat Peering vs. Compliant Hub-and-Spoke

| Metric | Flat Peering Model | Compliant Hub-and-Spoke | Why It Matters |

| Connectivity Control | Peer-to-peer | Central via Firewall/Hub | Enables deterministic routing & inspection |

| DNS Propagation | Manual zones | Central Private Resolver | Reduces resolution drift |

| Policy Compliance | Subscription-scoped | Org-level inheritance | Prevents shadow policy assignments |

| Cost Model | Low CapEx, high OpEx | Higher upfront, predictable OpEx | Hub costs amortized across tenants |

Real-world example:

In a July 2025 engagement with a medical SaaS provider, we replaced 18 peerings with a single secured transit hub. Downtime? < 20 seconds — because we pre-established UDRs and flipped DNS only after validation.

Identity Refactor: From “Just-in-Time” Access to Governance Guardrails

Developers love service principals — they’re fast. But at scale, they multiply like weeds. The fix isn’t deletion. It’s correlation and boundary enforcement.

Identity Refactor Patterns

- Identity Boundary: One Entra ID boundary per environment (Prod / Non-Prod / Regulated).

- Management Groups: Elevate producer subscriptions under governance MGPs.

- Conditional Access: Separate admin tier controls from service tier controls.

- Drift Detection: Detect cross-tenant role inheritance and orphaned permissions.

If you can’t answer who has access to what and why, your landing zone isn’t a foundation — it’s a liability.

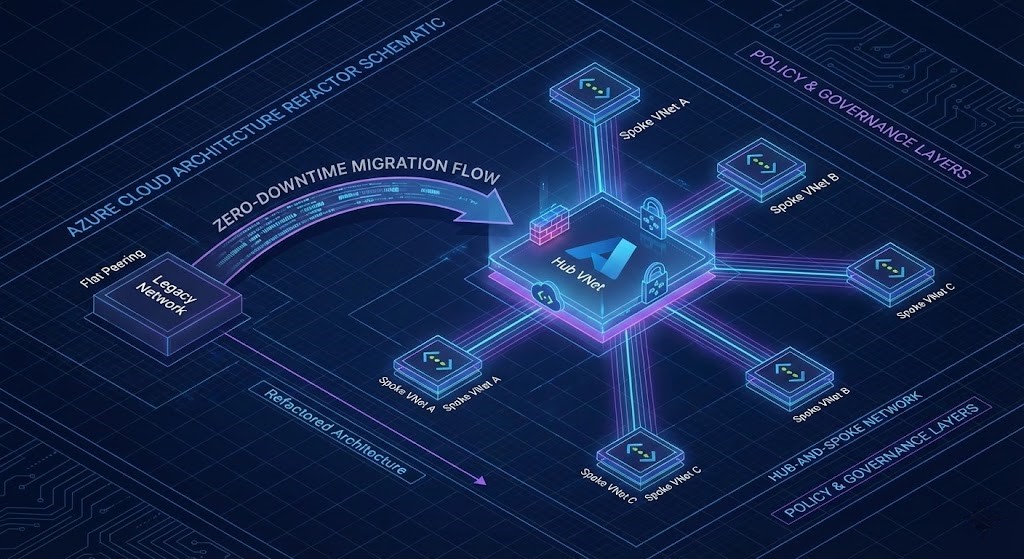

Zero-Downtime Refactor Workflow

This is where Modern Infrastructure & IaC discipline becomes non-negotiable.

Proven Sequence

- Map: Export current network graph, ARM templates, and Policy Insights.

- Shadow Hub: Deploy a parallel hub with mirrored address space and routes.

- Migrate: Attach spokes to shadow hub without cutover.

- DNS: Dual-register DNS zones across legacy + shadow hubs.

- Cutover: Atomic route flip, then decommission old peerings.

- Governance: Rebind policies at the management group layer.

Result: Zero downtime, and Azure Security Benchmark posture typically improves +15 to +22 points.

Cost and Licensing: The Quiet Architecture Constraint

Azure’s managed hub components are operationally elegant — and financially loud.

| Component | Monthly Cost (per hub region) | Cost Type | Notes |

| Azure Firewall Premium | ~$1,200 USD | OpEx | License + throughput |

| Private Resolver | ~$300 USD | OpEx | Hourly + query-based |

| ExpressRoute Gateway | ~$2,500 USD | CapEx + OpEx | Bandwidth adds variable cost |

| Hub NSGs / Policy | Negligible | OpEx | Scales with policy count |

The decision between Azure-managed services and custom NVAs is not technical — it’s financial maturity.

- CapEx-leaning orgs often build NVAs to suppress long-term OpEx.

- FinOps-mature orgs prefer native services for predictable cost envelopes.

Avoiding the “CAF Trap”

CAF samples are a strong baseline — but copying them verbatim creates fragility.

Why?

- CAF assumes greenfield with perfect naming.

- Real environments require phased drift correction.

- CAF cost models often hide OpEx inside managed layers.

Instead: Map your current governance tier to CAF control categories and remediate incrementally. That’s how you restore compliance without rewriting your blueprint JSON from scratch.

What Breaks at Scale (and How to See It Coming)

Common Failure Modes

- Private Link fragmentation: DNS and route table explosion.

- Inherited policy conflicts: Redundant assignments cause unpredictable deployment failures.

- Subscription sprawl: Teams create their own “mini landing zones.”

- Firewall bottlenecks: Throughput saturation under combined north-south + east-west traffic.

- Parallel team drift: Contributor roles erode security boundaries.

Proactive Detection

- Use Azure Resource Graph for drift queries (correlate resource ownership vs. MGP placement).

- Deploy Defender for Cloud to visualize live network topology.

- Validate AVNM route groups quarterly.

- Track RBAC inheritance anomalies across tenants.

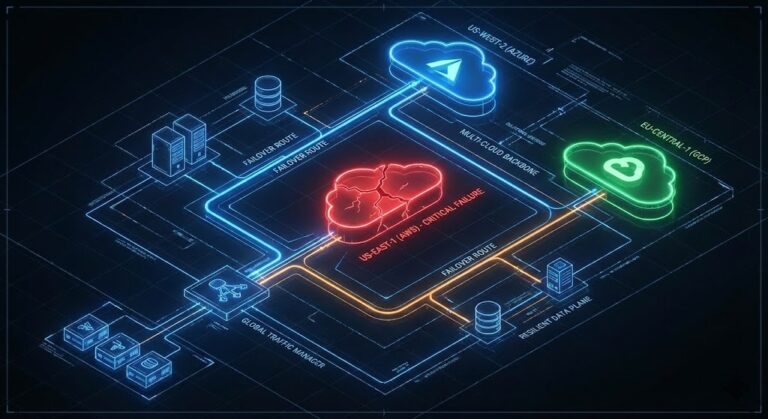

The “Optionality” Mindset

Even if you’re all-in on Azure today, build escape surfaces — architectural seams that preserve future mobility.

- Use AKS / containers for app layers, not tightly coupled PaaS services.

- Minimize reliance on region-pinned services (Automation Accounts, Log Analytics).

- Keep DNS and identity in cloud-agnostic layers.

Optionality is cheap insurance when regulatory, Sovereignty, or business shifts force architectural change.

Final Architectural Checklist

| Category | Target State | Validation |

| Network | Central Hub-and-Spoke w/ AVNM | Multi-region routing tested |

| Identity | Entra ID scoped RBAC | Conditional Access enforced |

| Policy | Org-level inheritance | 95%+ compliance baseline |

| Security | No unmanaged VMs | Defender + Policy alignment |

| Finance | Cost center tagging enforced | Verified via Cost Management exports |

Conclusion

Every Azure architect eventually inherits someone else’s landing zone.

What separates a patch job from a professional refactor isn’t tooling — it’s control plane mastery and patience. You don’t need to rebuild. You need to realign.

Most organizations learn this after a failed audit or a Data Protection incident caused by Private Link chaos. The better path is thinking like an architect but acting like an engineer:

Build incrementally. Validate relentlessly. Measure financial impact per design move.

If your Azure foundation feels fragile, that’s not failure — it’s the moment where architecture finally becomes real engineering.

Additional Resources

- Microsoft Cloud Adoption Framework – Enterprise‑Scale Landing Zone

- Azure Virtual WAN Pricing

- Azure Firewall Premium

- Azure Policy Documentation

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.