Breaking the HCI Silo: Nutanix Integration with Dell PowerFlex & Pure Storage

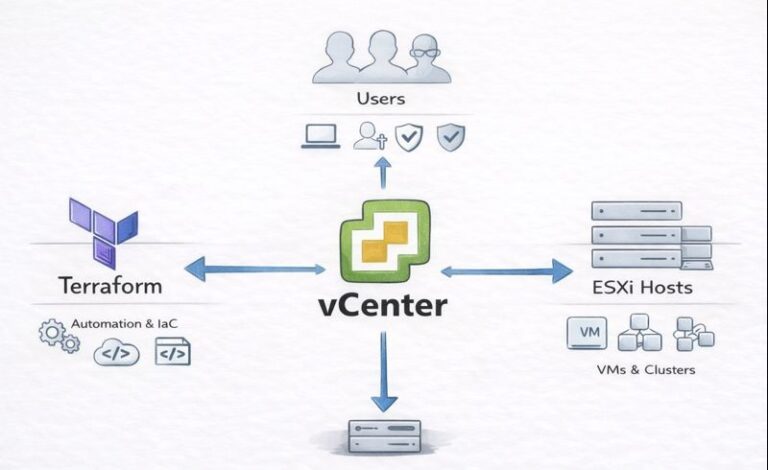

For over a decade, Nutanix’s mantra was “HCI or Death.” The philosophy was simple: storage and compute must live together in the same box to guarantee performance and simplicity.

However, the post-Broadcom VMware landscape has forced a market evolution. Enterprises want the freedom to keep their expensive Storage Area Networks (SANs) while migrating away from ESXi. In response, Nutanix has fundamentally altered its stack to support Compute-Only Nodes running AHV (Acropolis Hypervisor) that connect natively to external storage arrays.

This guide details how this integration works with Dell PowerFlex and Pure Storage, the supported hardware, and migration strategies.

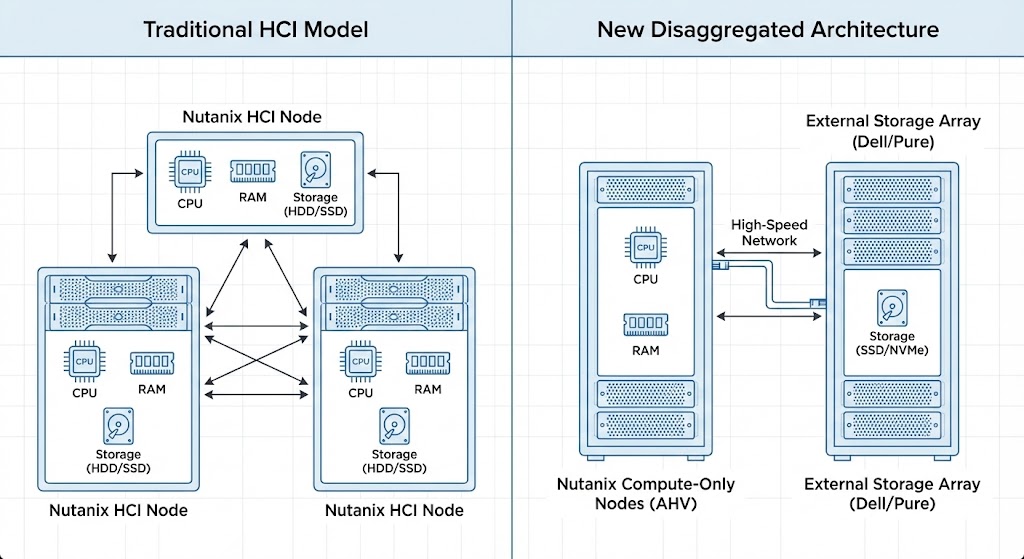

The Architecture Shift: Compute-Only Nodes

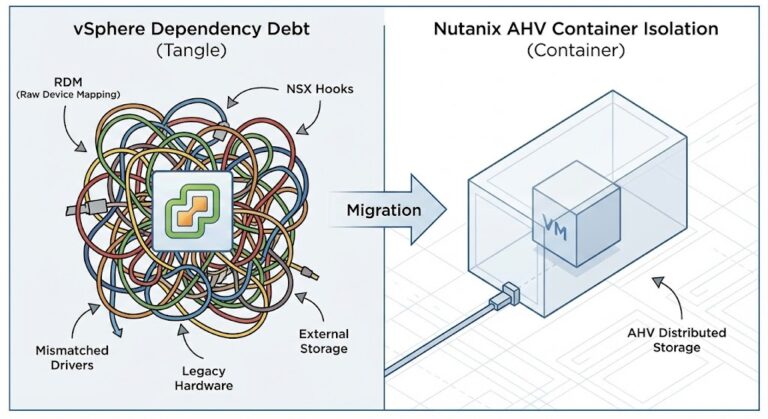

Traditionally, expanding a Nutanix cluster meant buying a node with both CPU and Disk, even if you only needed more CPU. The new Disaggregated Architecture allows you to run Nutanix AHV on server nodes that have no local storage capacity (other than a boot drive).

The fundamental shift from the integrated HCI model to the new Disaggregated Architecture allows for independent scaling of compute and storage resources.

- How it works: These “Compute Nodes” act as stateless hypervisors. They connect to external storage arrays over high-speed networks (IP-based) to store Virtual Machine (VM) data.

- The Benefit: You can scale compute (AHV nodes) and storage (Dell/Pure arrays) independently. This is critical for workloads like AI or massive databases where storage growth outpaces compute needs.

The Critical Limitation: No Local Storage for Data

While the disaggregated architecture offers immense flexibility, it comes with a strict architectural rule that can catch new architects by surprise: Compute-Only nodes cannot use their local disk capacity for the Nutanix storage cluster.

What This Means

If you buy a powerful server, like a Dell PowerEdge R760xd, which has 24 NVMe drive bays in the front, and you deploy it as a Nutanix Compute-Only node (NCI-C) connected to external Pure or Dell storage:

- Drive Bays Remain Empty: You cannot populate those 24 bays with drives and add them to a Nutanix storage pool. The Nutanix Distributed Storage Fabric (DSF) is disabled on these nodes.

- Wasted Potential: Any storage capacity physically present in the server is effectively unusable for VM data. It sits idle.

- Boot Drives Only: The only local storage used by the node is for the hypervisor boot OS (AHV) and logs. This is typically handled by a low-capacity, high-endurance M.2 SSD, BOSS card, or SATADOM.

The Design Implication

When spec’ing out hardware for this architecture, do not buy servers with high drive bay counts. You are wasting money on chassis capabilities you cannot use.

- Bad Choice: A 2U server with 24x 2.5″ drive bays (e.g., Dell R760xd).

- Good Choice: A 1U server designed for compute density, often with only 4-8 bays that will remain mostly empty (e.g., Dell R660 or Cisco UCS C220).

Crucial Takeaway: In a Disaggregated Nutanix environment, your server’s value is in its CPU cores and RAM density, not its storage slots. Plan your hardware purchases accordingly to avoid stranded capital.

Dell PowerFlex Integration

Dell was the first major third-party vendor to integrate with Nutanix’s disaggregated model. This partnership combines Nutanix’s cloud platform management with Dell’s software-defined storage powerhouse, PowerFlex.

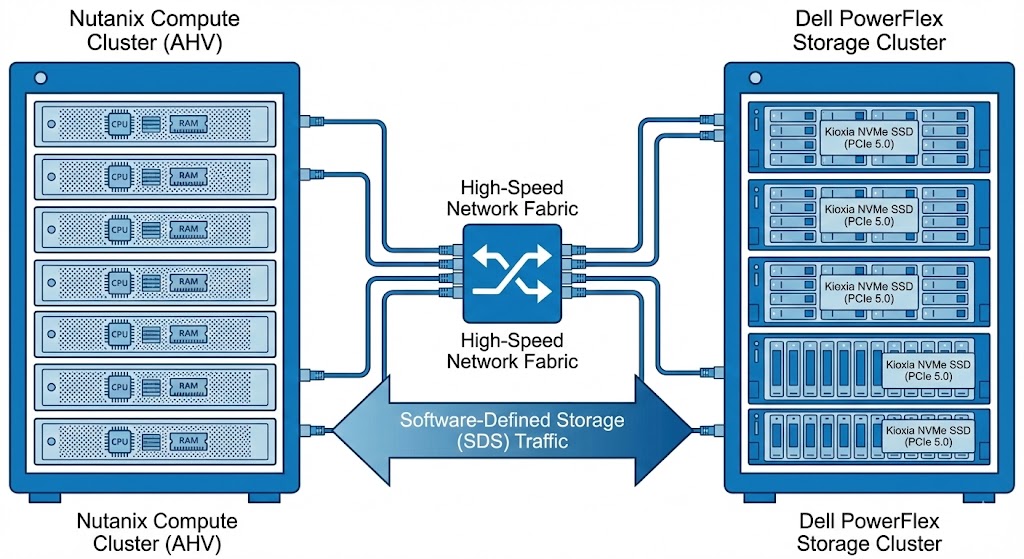

Nutanix Compute-Only Nodes connect to a Dell PowerFlex Storage Cluster via a high-speed network fabric, leveraging enterprise-grade Kioxia NVMe SSDs for extreme performance.

How It Works

- The Mechanism: Unlike a traditional iSCSI mount, the integration is deeply engineered. In the initial implementation, compute nodes use network HBAs to mount virtual disks from the PowerFlex array. These appear to the AHV hypervisor as if they were local disks, allowing Nutanix to apply its data services.

- Performance: PowerFlex is renowned for linear scalability. As you add PowerFlex storage nodes, IOPS and bandwidth increase predictably.

- Use Case: This is ideal for massive high-performance databases (like Microsoft SQL Server) that require millions of IOPS and sub-millisecond latency, which might otherwise saturate a standard HCI mesh.

Key Feature: The solution utilizes enterprise-grade Kioxia NVMe SSDs (PCIe 5.0) to ensure the bottleneck moves away from storage and onto the network fabric.

Pure Storage Integration (The New Challenger)

Announced at .NEXT 2025, the partnership with Pure Storage allows Nutanix AHV to run directly on top of Pure’s FlashArray.

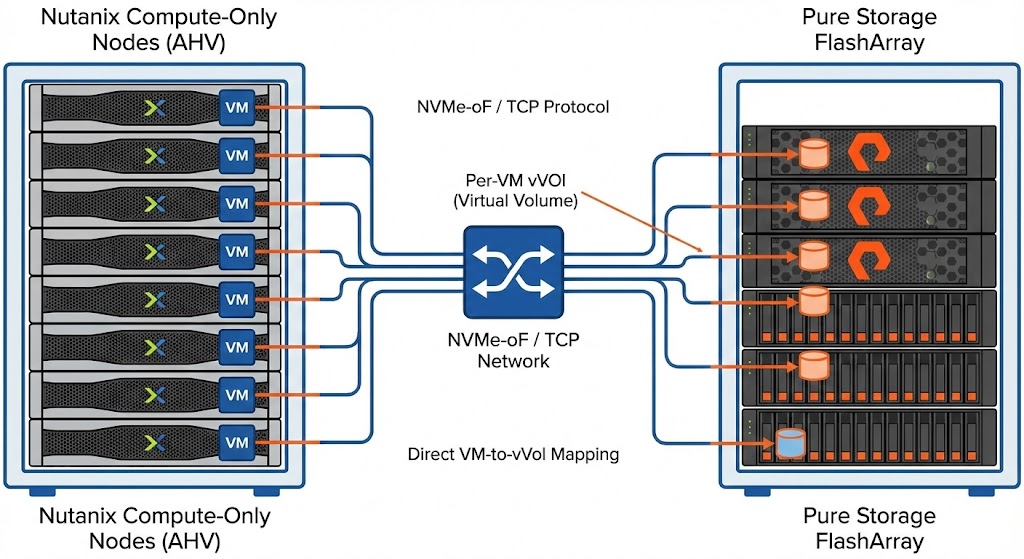

The Pure Storage integration uses the high-performance NVMe-oF / TCP protocol to map individual Nutanix VMs directly to their own vVols on the FlashArray.

Technical Specifics

- Supported Models: Currently limited to high-performance FlashArray X and FlashArray XL models. (Entry-level C-series and S-series are not supported at launch).

- Protocol: Exclusively uses NVMe over TCP (NVMe-oF/TCP). This was a deliberate choice to ensure low latency and high throughput, bypassing legacy protocols like iSCSI or NFS.

- Architecture: The integration is sophisticated. Each Nutanix VM receives its own dedicated Virtual Volume (vVol) on the Pure array. This means the array handles snapshots, replication, and data reduction at the VM level, rather than at a generic LUN level.

Limitations

- No “Native” Hardware: Surprisingly, you cannot use this feature with Nutanix’s own NX-series hardware. It is restricted to third-party compute nodes (Dell, Cisco, HPE, etc.).

- Strict Protocol: If your network infrastructure does not support NVMe-oF/TCP, you cannot utilize this integration.

Supported Compute Hardware

Since Nutanix NX appliances are excluded from these specific external storage integrations, you must bring your own compute (BYOC) from supported OEM partners.

| Vendor | Platform | Key Features |

| Dell | PowerEdge (XC Series) | The most mature ecosystem for Nutanix. Supports broad configuration options including high-density GPU nodes for AI workloads. |

| Cisco | UCS C-Series | Leverages Cisco Intersight for management. Popular in enterprises that want to reuse existing UCS Fabric Interconnect investments. |

| HPE | ProLiant (DX Series) | HPE’s DX series is factory-integrated with Nutanix software, offering a near-native experience similar to Nutanix NX. |

Important Note: The integration allows you to mix hardware generations (e.g., older Dell R740s with newer R760s) in the same compute cluster, provided they meet AHV compatibility lists (HCL).

Migration Strategies: Nutanix Move

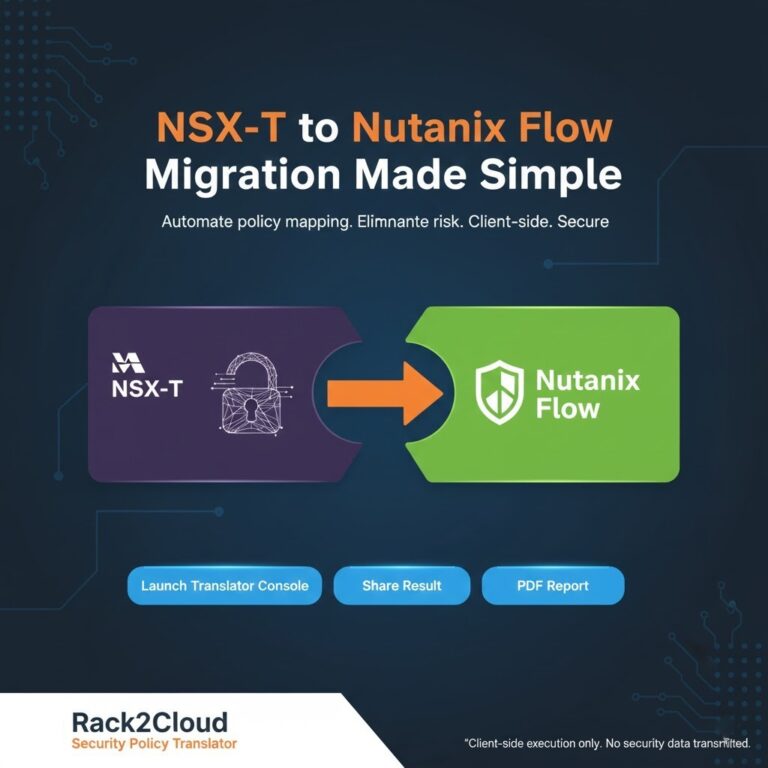

Switching to this new architecture often involves moving from VMware ESXi to Nutanix AHV. Nutanix Move is the dedicated free tool for this transition.

The “In-Place” Migration Strategy (Brownfield)

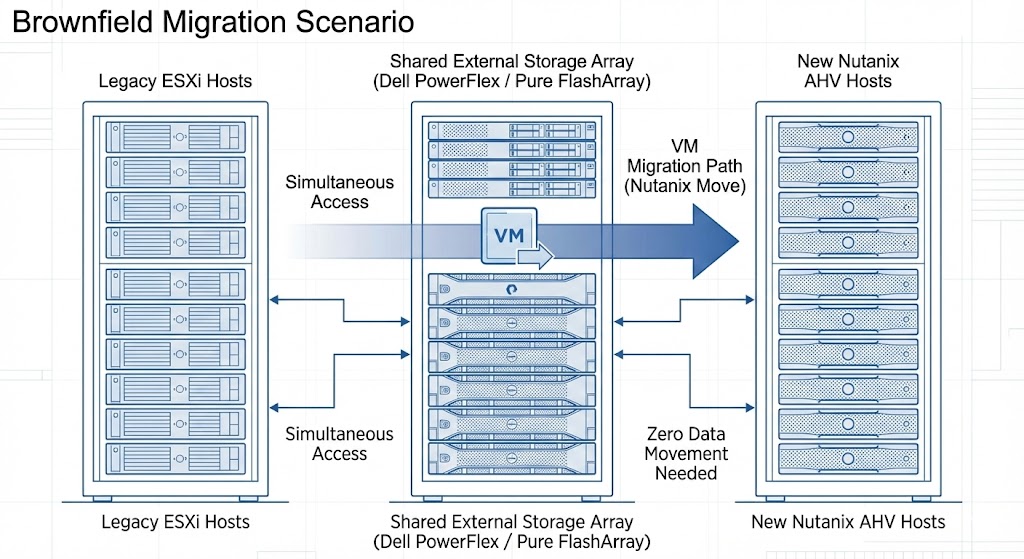

One of the biggest fears in migration is the “Forklift Upgrade”—buying a massive new storage array just to copy data to. If you already own a supported Pure or Dell array serving VMware, you don’t need to.

The Step-by-Step “In-Rack” Migration:

- Carve Out Capacity: On your existing array, create a new Storage Container for Nutanix alongside your existing VMFS Datastores.

- Dual-Connect: The storage array is now connected to old ESXi hosts (via FC/iSCSI) and new AHV hosts (via NVMe-oF/TCP or SDC) simultaneously.

- Execute the Move: Use Nutanix Move to migrate VMs. Since source and destination storage are on the same physical box, the data transfer is highly efficient.

- Decommission: Once moved, delete the old VMFS LUNs to reclaim space.

Repurposing Existing Compute (vSAN Pivot)

If you have existing Dell or Cisco servers running VMware vSAN, you don’t need to throw them away.

- Evacuate & Wipe: Take an ESXi host out of your cluster.

- Install AHV: Re-image the bare metal server with Nutanix AHV (booting from a local BOSS card/M.2).

- Connect to Array: Connect this new compute node to your external storage array. Do not populate its drive bays for storage.

Summary

The integration of Dell PowerFlex and Pure Storage marks Nutanix’s evolution from an “HCI vendor” to a “Cloud Platform provider.” By decoupling compute from storage, IT leaders can escape hypervisor lock-in without being forced to abandon their multi-million dollar investments in enterprise storage arrays. Just remember: in this new world, your compute nodes are for compute only—leave the storage bays empty.

Additional Resources:

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.