The K8s Exit Strategy: Why GCP and Azure are Winning the GenAI Arms Race

This technical deep-dive has passed the Rack2Cloud 3-Stage Vetting Process: Lab-Validated, Peer-Challenged, and Document-Anchored. We tested the NVIDIA L4 concurrency and Azure Flex cold-starts so you don’t have to.

How Cloud Run + GPU and Azure Flex Consumption solved the “Cold Start” problem that AWS still manages with Band-Aids.

The tradeoff for architects used to be simple: Simplicity or Performance. You couldn’t have both. AWS Lambda gave us simplicity but no GPUs and painful cold starts for Python. SageMaker gave us GPUs but brought 3 AM operational headaches and massive idle costs.

Google and Microsoft just quietly opened a third path. With GCP Cloud Run + GPUs and Azure Functions Flex Consumption, we can finally deploy AI workloads that scale to zero without the 10-second cold-start penalty—and without touching a single Kubernetes manifest.

The Benchmarks: Engineering EvidenceTo understand the lower-level compute physics

We ran the same Python-based RAG agent across the new serverless generation. To understand the lower-level compute physics of these cold starts, see our module on Architectural Learning Paths.

| Platform | Compute Model | Cold Start (Ready) | GPU Access | Best For |

| AWS Lambda | CPU/Firecracker | ~3–8s (Large deps) | ❌ No | Lightweight Logic |

| GCP Cloud Run | Container/L4 GPU | ~5–7s | ✅ NVIDIA L4 | Raw Inference |

| Azure Flex | Serverless / VNet | ~2–4s | ❌ No* | Enterprise Integration |

Azure Flex Consumption does not currently support direct GPU attachment, but its Always Ready instances and VNet integration make it the superior “Nervous System” for connecting to Azure OpenAI (GPT-4o).

Model Performance on Cloud Run (NVIDIA L4 – 24GB VRAM)

| Model (4-bit Quantized) | Tokens/sec (Throughput) | VRAM Usage | Latency (TTFT) |

| Llama 3.1 8B | ~43–79 t/s | ~5.5 GB | <200ms |

| Qwen 2.5 14B | ~23–35 t/s | ~9.2 GB | <350ms |

| Mistral 7B v0.3 | ~50+ t/s | ~4.8 GB | <150ms |

The Breakthrough: Azure Functions Flex Consumption

Microsoft’s “Flex” plan is a direct response to the Python performance gap. Unlike the old Consumption plan, Flex introduces Always Ready instances and Per-Function Scaling.

Why architects care:

- VNet Integration: You can finally put a serverless function behind a private endpoint without paying for a $100/mo Premium Plan.

- Concurrency Control: You can set exactly how many requests one instance handles (e.g., 1 request at a time for memory-heavy AI tasks).

- Zero-Downtime Deployments: Flex uses rolling updates, meaning your AI agent doesn’t “blink” when you push new code.

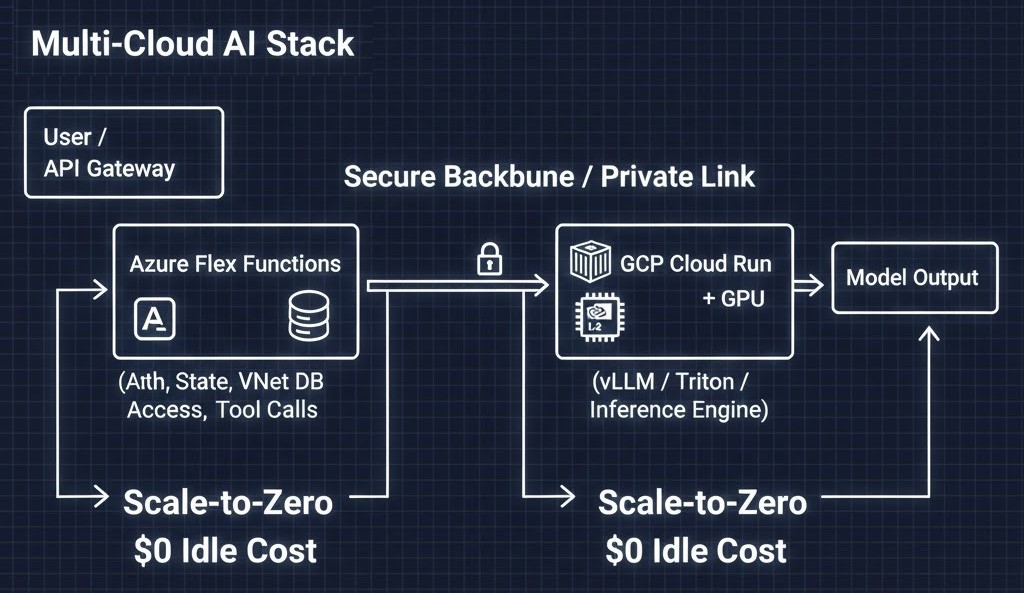

Reference Architecture: The Multi-Cloud AI Stack

To build production-grade AI, you no longer need to manage a cluster. I’m moving toward a “Brain and Nerves” split using two different clouds to maximize cost-efficiency and performance.

[User/API]

|

[Azure Flex Functions]

| (Auth, State, DB Access, Tool Calls)

|

[Private Link / Secure Backbone]

|

[GCP Cloud Run + GPU]

| (vLLM / Triton / Inference Engine)

|

[Model Output]The Flow:

- Orchestrator (Azure Flex): Handles user auth, state (Durable Functions), and VNet-secured database lookups.

- Inference (Cloud Run + GPU): Azure calls GCP to perform heavy reasoning on NVIDIA L4s.

- Scale-to-Zero: Both platforms vanish when the request is done, resulting in $0 idle cost.

Failure Modes: What Breaks First?

Senior architects know “Serverless” isn’t magic. Here is what I actually had to debug:

- Azure Initialization Timeout: If your Python app takes >30s to start (e.g., loading a 2GB model), Azure Flex will just kill the instance. You’ll see a generic

System.TimeoutException. - GCP Quota Limits: Cloud Run GPU quotas default to zero. If you don’t request an increase, your deployment will fail silently.

- Memory Bloat: Azure Flex has a ~272 MB system overhead. Account for this in your sizing or you’ll be hit with endless OOM restarts.

Cost Behavior: The CFO Perspective

This is built on our core Architectural Pillars—market-analyzed and TCO-modeled.

- The Old Way: ~$150+/month per region just to keep a GPU “warm” on a cluster.

- The Serverless Way: Pay only for the milliseconds the agent is actually thinking.

- The Break-even: If your agent runs for only 10 minutes per hour, serverless is ~80% cheaper than a reserved instance.

The Rack2Cloud Migration Playbook

Don’t start with a cluster. Evolve into one.

- Phase 1: Prototyping (AWS Lambda / Azure Consumption) — Move fast, ignore cold starts, focus on the prompt.

- Phase 2: Orchestration (Azure Flex Consumption) — Secure your data with VNets and stabilize Python latency.

- Phase 3: Cost/Privacy Optimization (GCP Cloud Run + GPU) — Move inference off expensive APIs and onto your own private L4 containers.

- Phase 4: Repatriation (On-Prem / Bare Metal) — Once your monthly inference bill exceeds $5,000, exit the cloud using our Cloud Repatriation ROI Estimator.

Conclusion: The End of “Serverless Lite”

We are entering the era of Serverless Heavy. You no longer need Kubernetes to run serious AI. GCP gives you the GPU; Azure gives you the Enterprise Networking. AWS still owns the ecosystem—but in serverless GenAI, Google and Microsoft are currently setting the pace.

Additional Resources:

- Google Cloud Blog: Deploying LLMs on Cloud Run with GPUs

- NVIDIA Technical Docs: L4 Tensor Core GPU Performance for AI Inference

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.