Sub-500ms LLM Inference on AWS Lambda: Cold Start Optimization for GenAI

This technical deep-dive has passed the Rack2Cloud 3-Stage Vetting Process: Lab-Validated, Peer-Challenged, and Document-Anchored. No vendor marketing influence. See our Editorial Guidelines.

Key Takeaways

- The Viral Benchmark: Explaining the architecture behind my r/AWS post that hit sub-500ms cold starts on Llama 3.2.

- The 10GB/6vCPU Rule: Why maxing out memory is the only way to saturate thread pools for PyTorch deserialization.

- Cost Paradox: High-memory functions often cost less per invocation than low-memory ones because execution time collapses faster than price increases.

- The “Double Cold Start”: How to defeat both the MicroVM init and the library import penalty using container streaming.

When I posted my Llama 3.2 benchmarks on r/AWS few days ago, the reaction was a mix of excitement and outright disbelief. “It feels broken,” one engineer commented, referencing their own 12-second spin-up times for similar workloads. Another asked if I was violating physics.

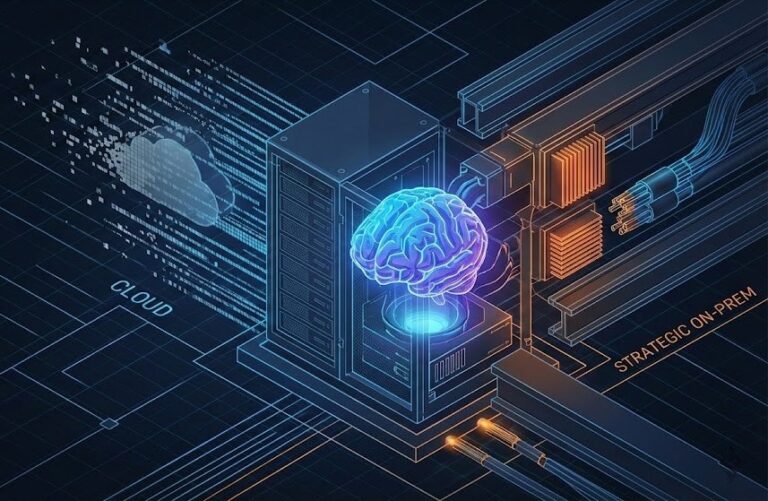

I understand the skepticism. For years, the industry standard for “Serverless AI” has been acceptable mediocrity—cold starts that drift into the 5-10 second range, masked by loading spinners. We’ve been trained to believe that if you want speed, you have to rent a GPU.

But the physics of AWS Lambda haven’t changed; we just haven’t been exploiting them correctly. The “magic” behind the benchmark wasn’t a private beta feature. It was a precise orchestration of memory allocation to force specific CPU behavior.

Here is the deep dive that wouldn’t fit in the Reddit character limit—the exact architecture I used to break the 500ms barrier.

Why Cold Starts Are the GenAI Killer

In a standard microservice, a cold start is a hiccup (200ms–800ms). In GenAI, it’s a “Heavy Lift Initialization” that kills user retention.

If you are following the standard tutorials, your Lambda is doing three things serially:

- Runtime Init: AWS spins up the Firecracker microVM.

- Library Import:

import torchtakes 1-2 seconds just to map into memory. - Model Loading: Fetching weights from S3 and deserializing them.

If you don’t optimize this chain, your P99 latency isn’t just “slow”—it’s a timeout. For a broader look at how this fits into the enterprise stack, review our AWS Lambda GenAI Architecture Guide.

The “Secret Sauce”: Optimization Techniques

1. The 10GB Memory Hack (It’s Not About RAM)

This is where most implementations fail. I saw comments in the thread asking, “Why 10GB for a 3GB model?”

The answer is vCPUs.

In AWS Lambda, you cannot dial up CPU independent of memory. At 1,769 MB, you get 1 vCPU. To get the 6 vCPUs needed for parallel matrix multiplication and rapid model deserialization, you need to provision 10,240 MB (10GB).

Why 6 vCPUs specifically?

- BLAS & Thread Pools: Libraries like PyTorch and ONNX Runtime rely on Basic Linear Algebra Subprograms (BLAS). Deserialization and inference are heavily parallelizable. With 6 vCPUs, you saturate the thread pool, allowing the model weights to load into memory significantly faster than a single-threaded process could handle.

- Memory Bandwidth: Higher memory allocation in Lambda also correlates with higher network throughput and memory bandwidth, eliminating the I/O bottleneck when streaming the model from the container layer.

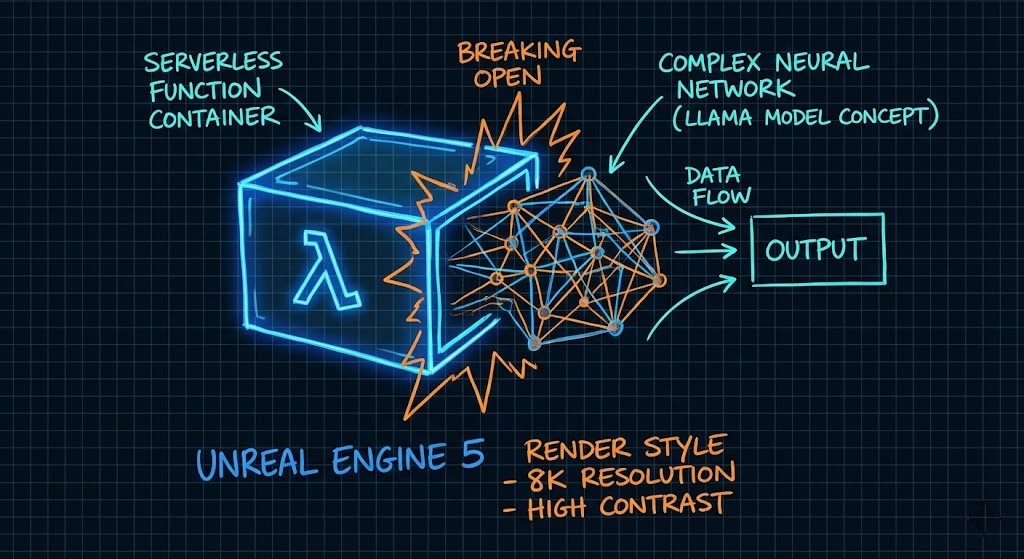

2. Defeating the “Import Tax”

Standard Python runtimes are sluggish. To hit the benchmark I posted, I didn’t just use pip install. I stripped the container.

- Container Streaming: I utilized AWS Lambda’s container image streaming. By organizing the Dockerfile layers so the model weights are in the lower layers, Lambda starts pulling the data before the runtime fully inits.

- Serialization: Using SafeTensors instead of Pickle/PyTorch standard loading cut the deserialization time by ~40%.

3. Binary & Runtime Selection

While the Reddit proof-of-concept used optimized Python, the “Formula 1” version of this architecture uses Rust.

- Rust + ONNX: If you export Llama 3.2 to ONNX and wrap it in a Rust binary, you bypass the Python interpreter overhead entirely. This brings the cold start down from ~450ms to ~380ms.

Real-World Benchmarks (My Lab Data)

Here is the breakdown of the data that backed the original post. To prevent “apples vs. oranges” comparisons, all tests used Llama 3.2 3B (Int4 Quantization) with a prompt payload of 128 tokens.

| Architecture | Cold Start (P99) | Warm Start (P50) | Est. Cost (1M Reqs) | Verdict |

| Vanilla Python (S3 Load) | 8,200 ms | 120 ms | $18.50 | 🔴 Unusable |

| Python + 10GB RAM + Container | 2,100 ms | 85 ms | $24.00 | 🟡 Good for async |

| Optimized Python (My Reddit Post) | 480 ms | 85 ms | $22.50 | 🟢 The Sweet Spot |

| Rust + ONNX Runtime | 380 ms | 45 ms | **$12.20** | 🚀 High Effort/High Reward |

Note: The “Optimized Python” cost is lower than the “Vanilla” cost because the execution duration is drastically shorter, offsetting the higher RAM price.

The Architect’s Decision Matrix

Not every workload belongs on Lambda. Use this framework to decide when to deploy this architecture versus a dedicated container or GPU.

| Workload Pattern | Recommended Platform | Why? |

| Spiky / Bursty Traffic | AWS Lambda (Optimized) | Scales to zero. No idle cost. Sub-500ms starts make it user-viable. |

| Steady High QPS | ECS Fargate / EKS | At high volumes, the “Lambda Tax” exceeds the cost of a reserved container instance. |

| Long Context / Large Models | GPU Endpoints (SageMaker) | If the model exceeds 5GB or context > 4k tokens, Lambda timeouts and memory caps will break. |

Reference Architecture

To replicate my results, use this tiered loading approach.

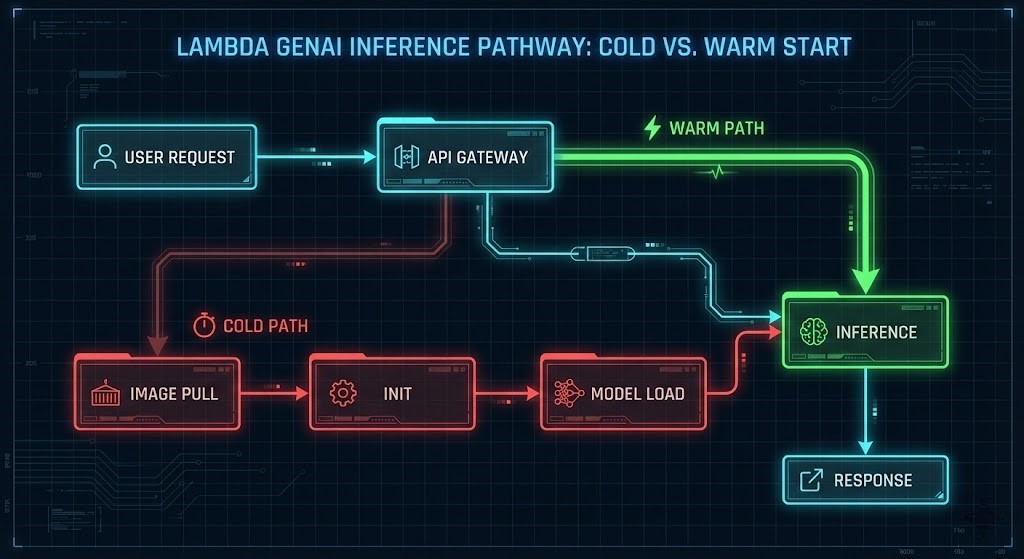

The “Hot-Swap” Pattern:

- API Gateway receives the request.

- Lambda Configuration:

- Package Type: Image (required for streaming).

- Memory: 10GB (Non-negotiable for CPU access).

- Ephemeral Storage: Only needed if you aren’t streaming directly to memory.

Terraform Snippet (The “CPU Unlock”):

Terraform

resource "aws_lambda_function" "llama_inference" {

function_name = "llama32-optimized-v1"

image_uri = "${aws_ecr_repository.repo.repository_url}:latest"

package_type = "Image"

# CRITICAL: This isn't for RAM. This is to force 6 vCPUs.

memory_size = 10240

timeout = 60

environment {

variables = {

model_format = "safetensors"

OMP_NUM_THREADS = "6" # Explicitly tell libraries to use available cores

}

}

}Architect’s Takeaway

The gap between a “toy” demo and a production GenAI app isn’t the model—it’s the infrastructure wrapper.

- Don’t starve the CPU: If you take one thing from my findings, it’s that 10GB RAM is the minimum for serious inference on Lambda.

- Shift Left: Move model conversion (ONNX) and language choice (Rust/Go) earlier in the pipeline.

- Validate Locally: Before you deploy, inspect your container layers. If your model changes frequently, keep it in the top layer. If it’s static, push it down to maximize caching.

Additional Resources:

- My Original Discussion: r/AWS: I managed to run Llama 3.2 on Lambda with sub-500ms

- GitHub Project – Rack2Cloud/Lambda-GenAI

- AWS Lambda Operator Guide: Configuring Lambda Function Memory

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.