The Multi-Cloud AI Stack: Why I’m Done Looking for a “Swiss Army Cloud”

This strategic advisory has passed the Rack2Cloud 3-Stage Vetting Process: Market-Analyzed, TCO-Modeled, and Contract-Anchored. No vendor marketing influence. See our Editorial Guidelines.

For the first decade of my career, I chased the same goal every architect did: one provider, one control plane, one security model. It looked clean on a slide deck. It even worked—for a while.

Then 2025 happened.

We watched key AWS teams hollow out, turning incident response into 75-minute archaeology digs. We saw model availability flip overnight as OpenAI, Anthropic, Meta, and Google reshuffled access. Suddenly, that “single pane of glass” didn’t look like simplicity—it looked like fragility.

In 2026, the “one cloud to rule them all” dream is officially over. Not because any provider failed, but because no single cloud is optimized for every layer of a modern GenAI system. If you’re forcing high-throughput inference, regulated identity, and petabyte-scale storage into one provider for “consistency,” you’re trading outcomes for aesthetics.

The winning pattern isn’t loyalty. It’s orchestration.

Below is the real-world GenAI stack I see working—across enterprises, SaaS platforms, and regulated environments.

The Architectural Truth: Multi-Cloud is Specialization

Multi-cloud in 2026 isn’t about “insurance” or redundancy; it’s about exploiting the specialized primitives of each hyperscaler.

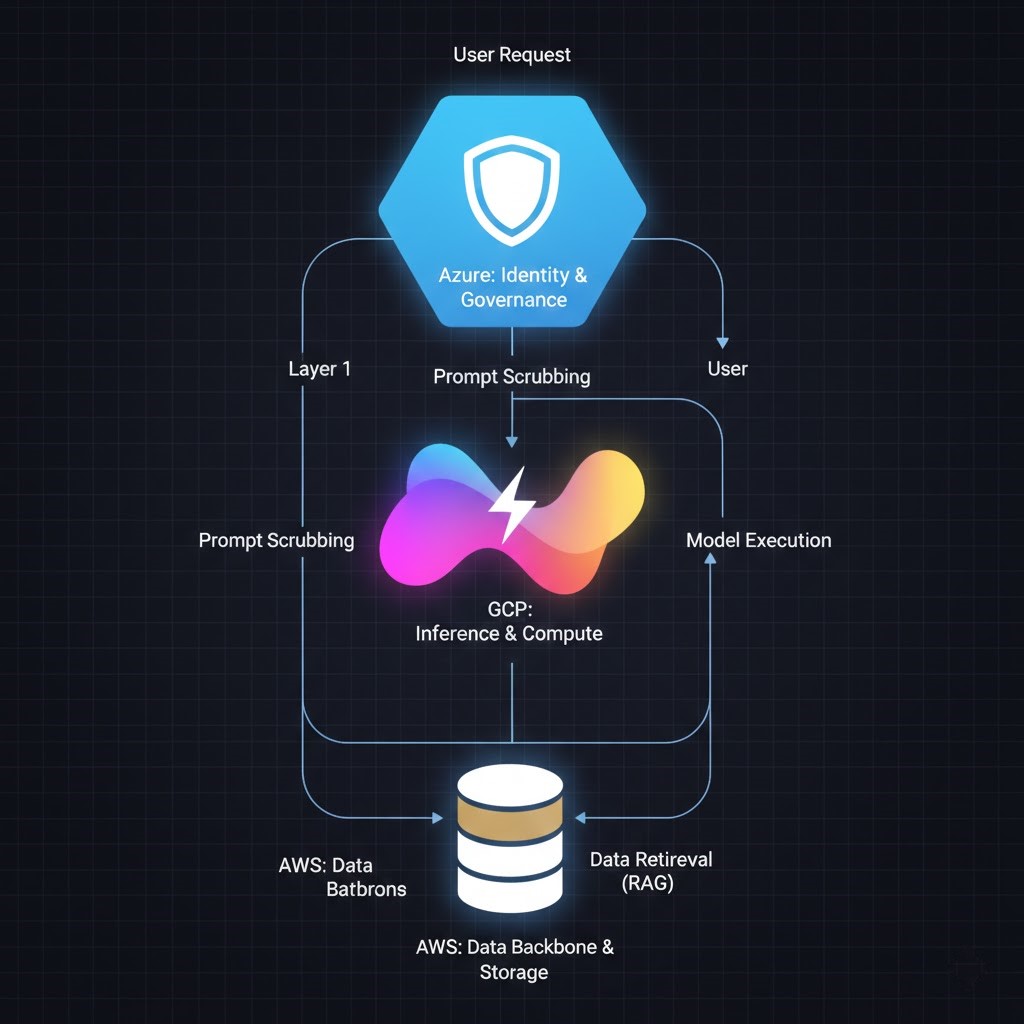

Azure: The “Adult in the Room” for Identity & Compliance

Azure may not win UX awards, but they’ve won the enterprise trust war. If I’m building for healthcare, finance, or government, I’m not sending raw prompts directly to a model endpoint. I’m routing them through Azure AI Foundry and Entra ID.

Why? Because Azure’s Content Safety, PII redaction, and compliance accelerators are currently unmatched when legal exposure matters.

- The Play: Use Azure as your Governance Control Plane. Use Entra ID (formerly Azure AD) for identity, and let Azure AI Foundry act as your “AI Firewall” to scrub prompts for toxic content or leaked credentials before they ever hit a model.

- The Arc Advantage: Use Azure Arc to manage your GCP and AWS resources. It allows you to apply Azure’s strict governance policies to containers running on other clouds, giving you a singular audit trail.

The Outcome: Enterprise-grade identity and auditable AI interactions. Azure isn’t where I run my heavy compute—it’s where I protect the business.

GCP: The High-Performance Inference Engine

Google quietly became the fastest place to run inference—not because of price, but because of Infrastructure Primitives. When I moved production inference to GCP, it was about Container Image Streaming.

If you’ve ever waited two minutes for a cold-start on AWS Fargate while model weights downloaded, you understand the problem.

- The Play: Run your models on Cloud Run with NVIDIA L4 GPUs. Because of GCP’s optimized streaming, large model containers (even 10GB+) can boot in seconds because they stream the image while starting up.

- Model Weight Streaming: Using tools like the NVIDIA Run:ai Model Streamer, you can fetch model tensors directly from Cloud Storage concurrently to GPU memory. This slashes model loading times from minutes to seconds.

The Outcome: Serverless elasticity with GPU performance. GCP is where I put the “brain” of the system.

AWS: The Data Nervous System (Still the Backbone)

AWS may be losing the AI hype cycle, but they still own the world’s data. Most enterprises already have petabytes in S3, event streams in Kinesis, and operational state in DynamoDB.

Moving that data just to run RAG elsewhere is usually a non-starter—not because it’s impossible, but because egress fees and the “Data Gravity” of your operational risk kill the ROI.

- The Play: Leave your data where it lives. Use S3 for your primary data lake and Glue/Athena for preprocessing.

- The Bedrock Trick: Use Amazon Bedrock for “Model Redundancy.” Bedrock’s standardized API allows you to swap between Anthropic Claude, Meta Llama, and Mistral without rewriting a single line of infrastructure code. It’s the best “Model Mall” for staying within the AWS security boundary.

The Outcome: AWS is no longer my inference layer; it’s my Data Backbone.

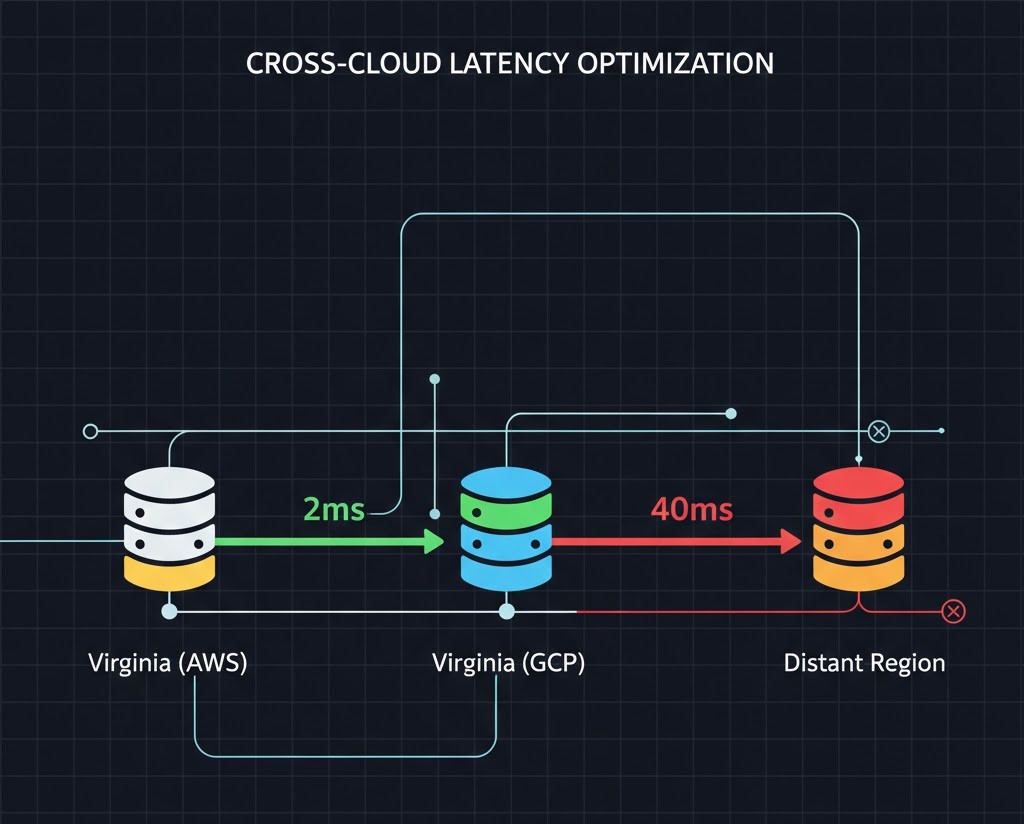

The Hidden Tax: Latency and Egress

Multi-cloud works until you ignore physics. A round-trip between AWS us-east-1 and GCP us-central1 costs ~$20–$40ms. If your app makes 50 cross-cloud calls per user request, you’ve just recreated dial-up latency.

The Architect’s Rules:

- Minimize Payloads: Pass text tokens and JSON, never raw documents or unoptimized images between clouds.

- Avoid Chatty Flows: One call per layer. Batch your requests so you aren’t paying the latency tax 50 times per second.

- Pair Geographically: Pair regions that are physically close, such as AWS

us-east-1(Virginia) with GCPus-east4(Virginia).

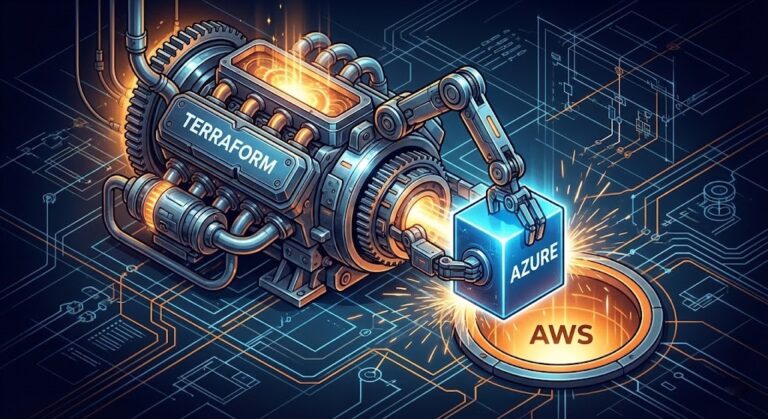

The 2026 Reference Architecture (“Thin Control Plane”)

Here is the pattern that is proving resilient for my clients this year:

- 🧠 Control Plane (Thin): Azure → Identity, RBAC, compliance, and PII safety.

- 📦 Data Plane: AWS → Storage, ingestion, and heavy preprocessing.

- ⚡ Compute Plane: GCP → Inference, high-speed model serving, and scaling.

- 📊 Observability: Datadog / OpenTelemetry → Unified tracing across all three clouds so you can see exactly where the “lag” is.

When Multi-Cloud Isn’t the Answer

There are cases where single-cloud still wins:

- Early-stage startups optimizing for Speed to Market over long-term cost.

- Teams without the Operational Maturity to handle three different IAM models.

- Highly regulated environments that restrict all data movement outside a specific perimeter.H

But once your inference costs exceed your compute budget or your security team demands centralized identity, single-cloud becomes a constraint, not a simplifier.

Conclusion: Be Outcome Obsessed

The job of a senior architect in 2026 isn’t to be “cloud agnostic.” That usually leads to lowest-common-denominator infrastructure where nothing performs well.

The job is to be outcome-obsessed. Use Azure for trust. Use GCP for speed. Use AWS for data gravity. Orchestrate—don’t consolidate. Stop waiting for one provider to fix their weaknesses; just use the best tool for each layer and move on to the next problem.

Additional Resources

- Performance Benchmarks: Why GCP Is Winning the 2026 Inference War

- Security Deep Dive: Azure AI Foundry vs. AWS Bedrock vs. GCP Vertex

- Networking Strategy: From Multiple Clouds to Multicloud: The 2026 Evolution

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.