Regulating Generative AI: Lessons from Indonesia’s Grok Ban and What Comes Next

The Grok Ban: What Happened and Why It Matters

Indonesia’s Communications and Digital Affairs Ministry temporarily blocked the AI chatbot Grok, developed by xAI and integrated into X, citing the AI’s ability to generate non-consensual sexual deepfake images, including disturbing depictions involving minors.

This isn’t a “social media quirk.” It’s a regulatory first — a sovereign actor using digital safety laws to curb generative AI harms in the wild. Other countries (Italy’s data watchdog) are issuing warnings; jurisdictions from France to Malaysia are investigating similar behaviors.

The core regulatory rationale Indonesia and others are leveraging:

- Human rights protections (privacy, dignity) against unauthorized image manipulation.

- National digital security frameworks that treat AI-generated explicit content as criminally actionable.

- Intergovernmental enforcement pressure on platforms and service providers to embed safety by design.

This is a wake-up call: any cloud-hosted generative AI service — regardless of origin — is now subject to local digital safety and content liability laws.

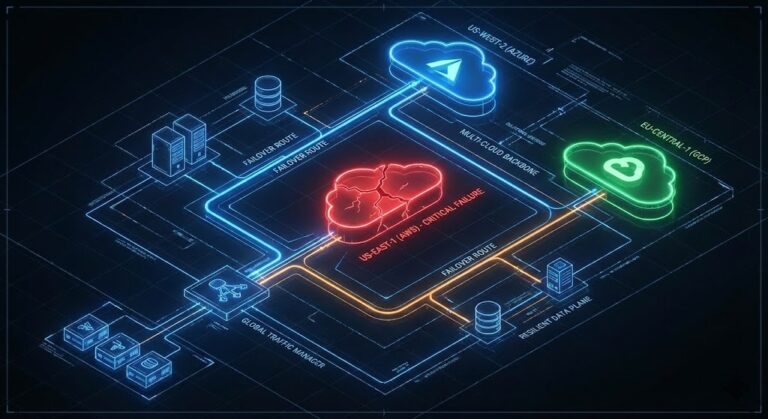

The Global Landscape: Moving Beyond Reactive Bans

Indonesia’s move sits within a wider regulatory pattern:

| Region | Approach | Key Focus |

|---|---|---|

| EU | Risk-based, comprehensive legislation | AI Act mandates conformity assessments, transparency requirements (incl. high-risk categories) |

| China | Content labeling + identity verification | Requires deepfake content to be marked & traceable |

| US | Sectoral bills + proposed No Fakes Act | Focus on rights of publicity and civil liabilities |

| Australia | Criminal penalties under deepfake laws | Applies defamation and harassment penalties to synthetic media |

| Indonesia | Content blocking + direct enforcement | Assert digital safety and human rights violations |

Compare these approaches against enterprise risk tolerance before you architect global deployment: the EU model mandates upfront risk assessments, while U.S. posture currently leans on existing statutes — meaning cloud teams must design for compliance without clear federal guardrails yet.

Decision Framework: How to Think About AI Governance in Practice

I’ve been in regulated cloud programs where a single generative AI misstep tripled compliance costs overnight. Here’s how I think about decisions:

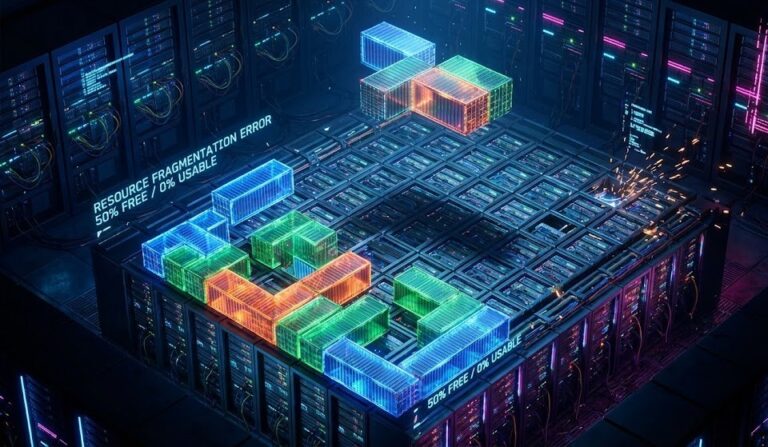

Risk Categorization

- Sort generative AI use cases into innovation vs. risk buckets.

- Anything touching PII, biometric data, or public safety = high risk.

Control Implementation

- Use managed inference platforms with fine-grained policy controls.

- Build audit trails and watermarking to satisfy rule sets like China’s CAC or EU requirements.

Cloud Cost Implications

| Cost Type | Pre-Regulation | Post-Regulation Reality |

|---|---|---|

| CapEx | One-time model training compute | Additional governance tooling, compliance labs |

| OpEx | Standard inference & hosting | Continuous monitoring, logging, risk scans |

| Licensing | Base SaaS AI fees | Premium for compliance modules & data residency |

I’ve watched a single regulatory ruling turn a cheap proof-of-concept into a seven-figure operating cost. Regulation doesn’t just dictate what you release—it dictates how you operate at scale.

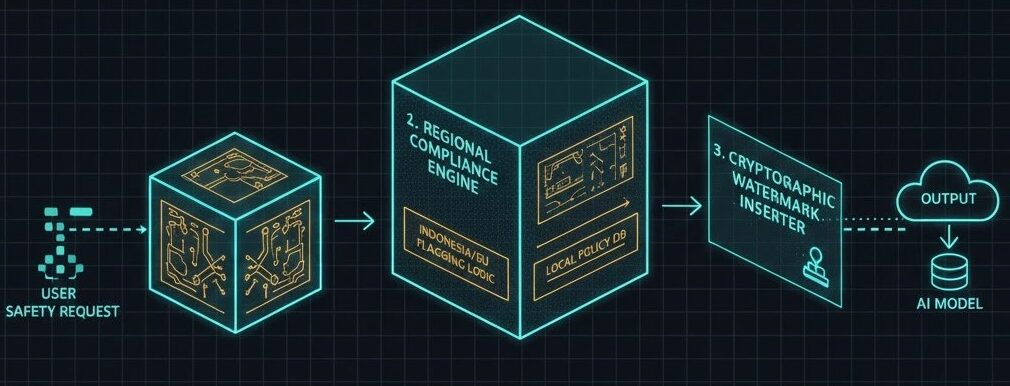

What Responsible AI Governance Actually Looks Like

Regulators are now zeroing in on three pillars:

1. Transparency & Traceability

Users and regulators must see what was generated, why, and by whom. Embedded metadata and visible “AI-generated” labels are becoming table stakes.

2. Liability & Accountability

You must treat AI like any other publisher — implement Terms of Service, logging, and enforceable takedown processes.

3. Risk-Based Controls

Adopt a classification + mitigation approach: identify high-risk workloads (deepfakes, sensitive transforms) and elevate mitigation — ranging from rejection to human review.

These map well to ISO/IEC frameworks discussed in academic research tying standards to regulatory contexts.

Architect’s Note: Layer 2 (Regional Compliance) is where most projects fail. If you haven’t hard-coded ‘Geofenced Model Routing,’ you aren’t production-ready for 2026.

CapEx vs. OpEx Takeaway for SEs & Architects

As SEs designing generative AI pipelines:

- CapEx: Budget for secure model hosting, data residency requirements, and regulatory sandbox environments.

- OpEx: Plan for ongoing compliance tooling, monitoring, and rapid incident response.

- Regulatory uncertainty means cloud cost must include a compliance reserve — I typically budget +15-20% for this in forecasts.

Internal Cloud Engineering Links That Matter

- Use Cloud Egress Calculator and Refactoring Cliff Calculator to start calculating the real cost of data mobility.

- When migrating legacy AI/ML workloads to compliant environments, consult our NSX-T Migration guide.

- Evaluate infrastructure choices against cost and compliance using our VMware to Nutanix Sizer.

- Review your environment and make sure it is ready for migration with our HCI Migration Advisor.

External Research & Citations

- [Reuters] Indonesia Blocks xAI’s Grok Over Explicit Content Concerns (January 2026)

- [NIST] AI Risk Management Framework (AI RMF 1.0)

- [EU Parliament] The EU AI Act: Comprehensive Regulatory Framework (2024-2026)

- [Indonesian Govt] Law Number 1 of 2023: The New Criminal Code (KUHP)

Editorial Integrity & Security Protocol

This technical deep-dive adheres to the Rack2Cloud Deterministic Integrity Standard. All benchmarks and security audits are derived from zero-trust validation protocols within our isolated lab environments. No vendor influence.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.