From Static Guardrails to AI Policy Agents: 2026 Playbook for Cloud Security Teams

I still remember the first time an “automated guardrail” saved my job. It was 2018. A junior engineer, exhausted from a sprint crunch, pushed a Terraform change that would have exposed our primary production subnet directly to the internet. An Azure Policy definition caught the 0.0.0.0/0 route, blocked the deployment, and killed the pipeline. Crisis averted. I bought the policy author a beer.

Fast forward to last year, and that same policy stack failed spectacularly. A privilege escalation path emerged across three different repositories and two CI/CD pipelines. No single policy fired because, syntactically, every individual change was “compliant.” But contextualized together, they created a backdoor wide enough to drive a truck through. The blast radius took down our staging environment and nearly crossed into production.

That failure is the exact gap the CNCF 2026 security forecast highlights: static guardrails cannot reason across time, identity, and intent in AI-driven environments.

If you are a Systems Architect or Security SRE, this is your reality check. Static OPA rules and Azure Policy definitions are no longer the destination—they are the floor. This playbook details how to layer AI Policy Agents on top of your existing stack to build a self-healing control plane that reasons, learns, and acts.

Key Takeaways

- The Shift: Static engines are time-blind; AI agents correlate risk across time, repos, and systems.

- The Benchmark: In Rack2Cloud lab simulations, autonomous guardrails cut Mean Time to Remediate (MTTR) by 64% compared to manual SRE intervention.

- The Architecture: Adopt the “Sandwich Model”—Static Policies as the floor, AI Agents as the filling, Human Review as the ceiling.

- The Economics: While CapEx rises for tooling, OpEx drops significantly, shifting $180k/year from “firefighting” to engineering.

The Policy Drift Death Spiral

Before we discuss the solution, we have to admit why the current model is broken. I call it the Policy Drift Death Spiral. Over time, static policy systems degrade into one of three failure modes:

- Exception Sprawl: “Just exclude this one IP for testing.” (Repeat 500 times until your perimeter is Swiss cheese).

- Shadow Infrastructure: Developers silently bypassing the control plane entirely because the policy gates are too rigid.

- Control Plane Bypass: Attacks via side channels (identity, CI secrets, workload identity) that OPA rules on Kubernetes manifests simply cannot see.

AI agents break this spiral by collapsing detection, reasoning, and remediation into a single control loop. They don’t just check the rule; they check the context of the rule.

Static vs. Autonomous: The Loop Comparison

To understand why your current OPA or Sentinel policies are failing, you have to look at the decision loop.

- The Static Loop (Old Way): Author $\rightarrow$ Validate $\rightarrow$ Block/Allow $\rightarrow$ Log.

- The AI Agent Loop (2026 Way): Observe $\rightarrow$ Reason $\rightarrow$ Predict $\rightarrow$ Act $\rightarrow$ Learn.

The difference is reasoning under uncertainty. A static rule knows that Port 22 should be closed. An AI Agent knows why Port 22 is open, who opened it, if that user usually opens ports, and if the traffic pattern looks like maintenance or exfiltration.

Capability Comparison Matrix

| Capability | Static Engine (OPA/Azure Policy) | AI Policy Agent |

| Decision Rule | Syntax Matching | Contextual Reasoning |

| Time-Awareness | ❌ No (Snapshot only) | ✅ Yes (Historical drift analysis) |

| Cross-System Correlation | 🔸 Limited | ✅ Native (Graph-based) |

| Exception Learning | ❌ Manual Updates | ✅ Automatic Pattern Recognition |

| Remediation | ❌ Block-only | ✅ Patch + Rollback + Fix |

| Operational Impact | High “Policy Fatigue” | Cuts Churn by 70% (Lab Verified) |

The Threat Model: Why AI is Mandatory

Security SREs will respect this table. It shows exactly where static policy bleeds.

| Threat Vector | Static Policy Failure | AI Agent Mitigation |

| IAM Privilege Escalation | No single rule fires; syntax is valid. | Graph Correlation: Detects “toxic combinations” of low-level perms. |

| Supply Chain Compromise | Hash mismatch not enforced if signature is valid. | Behavioral Anomaly: Flags the container making unexpected outbound calls. |

| Lateral Movement | Network policies pass (allow internal traffic). | Intent-Based Detection: Identifies “Service A” talking to “Service Z” for the first time. |

The Solution: The “Sandwich Architecture”

I hear the skeptics—and I am one of them. “Do you really want an LLM hallucinating your firewall rules?” The answer is No.

To mitigate the risk of “Autonomous Overreach” (where an AI quarantines production because it misunderstood a batch job), we use a Sandwich Architecture.

1. The Floor: Deterministic Validation (Static)

This is your “Hard Rail.” You keep your Azure Policy, AWS SCPs, and OPA constraints here.

- Role: The Safety Net.

- Logic: If the AI proposes a change that violates a regulatory standard (e.g., “S3 Bucket Public Read”), the Static Floor rejects it immediately. The AI is never allowed to breach the floor.

2. The Filling: AI Policy Agent (Reasoning)

This is the new layer. It sits between your GitOps workflow and the cloud.

- Role: The Optimizer.

- Logic: It analyzes the intent of a change using our Deterministic Tools Portfolio to simulate performance impacts and proposes remediations.

3. The Ceiling: Human Review (Strategic)

- Role: The Judge.

- Logic: “AI never executes actions that are irreversible, externally visible, or identity-destructive without human sign-off.” This sentence alone increases executive trust and passes audit.

Integration Patterns: Mapping the Agent to the Cloud

We must map the AI Agent to specific layers of your infrastructure.

A. AWS SCP $\rightarrow$ Organizational Control Plane

- SCPs: Hard-stop unsafe APIs (The Floor).

- GuardDuty: Behavioral signals.

- AI Agent: Detects IAM patterns matching crypto-miners and auto-revokes permissions before the billing spike.

B. Crossplane + GitOps $\rightarrow$ The Nervous System

This is where the architecture stops being reactive and becomes systemic. By using Crossplane as your portable control plane (see our Crossplane Guide), the AI Agent becomes a “Judicial Layer.”

- Workflow: The AI intercepts the claim $\rightarrow$ Runs a simulation $\rightarrow$ Modifies the YAML for security best practices $\rightarrow$ Commits the change.

Mandatory Cost Analysis: The Financial Reality

Transitioning to AI Policy Agents is an OpEx-heavy play, but our lab data shows a clear path to ROI for a mid-sized enterprise (approx. 500 devs).

Rack2Cloud Lab Simulation: Annual TCO Comparison

Static Stack (Legacy): ~$670,000 / Year

- Engineering Toil ($300k): SREs spending 30% of their time reviewing false positives.

- Incidents ($250k): Cost of downtime and remediation for “compliant but insecure” breaches.

- Tooling ($120k): Enterprise licenses for static scanning tools.

AI Agent Stack (2026): ~$490,000 / Year

- Engineering Toil ($180k): (-40%) AI handles the grunt work; SREs focus on architecture.

- Incidents ($90k): (-64%) Autonomous remediation catches issues in the PR stage.

- Tooling ($220k): (+83%) This increases. You are paying for Vector DBs, LLM Inference, and Orchestration.

FinOps Sensitivity Analysis

- What if LLM token costs double? The tooling cost rises to $240k, but the total remains below the Static Stack ($510k vs $670k). The most expensive resource is engineering time, not tokens.

- Break-Even Point: ROI is typically achieved by Month 8.

Where AI Guardrails Fail — And How Teams Get Burned

We must address the elephant in the room. AI Agents are not magic, and they introduce new failure domains that you must architect around.

1. Model Drift & Hallucinations

An LLM might interpret a legacy configuration pattern as a vulnerability because it was trained on “clean” 2025 codebases.

- Mitigation: The “Sandwich Architecture.” The AI can propose a fix, but if that fix fails the static unit tests, it is rejected.

2. Data Poisoning

If an attacker can manipulate the logs (training data) the agent consumes, they can trick the agent into ignoring malicious traffic.

- Mitigation: Use Read-Only, tamper-proof sinks (like immutable storage) for the AI’s context window.

3. The “Non-Deterministic” Audit Failure

Auditors hate “maybe.” If you tell an auditor, “The AI decides based on context,” you will fail SOC2.

- Mitigation: Every AI action must generate a deterministic “Reasoning Artifact”—a JSON log file explaining exactly why it took the action, anchored to a specific policy version.

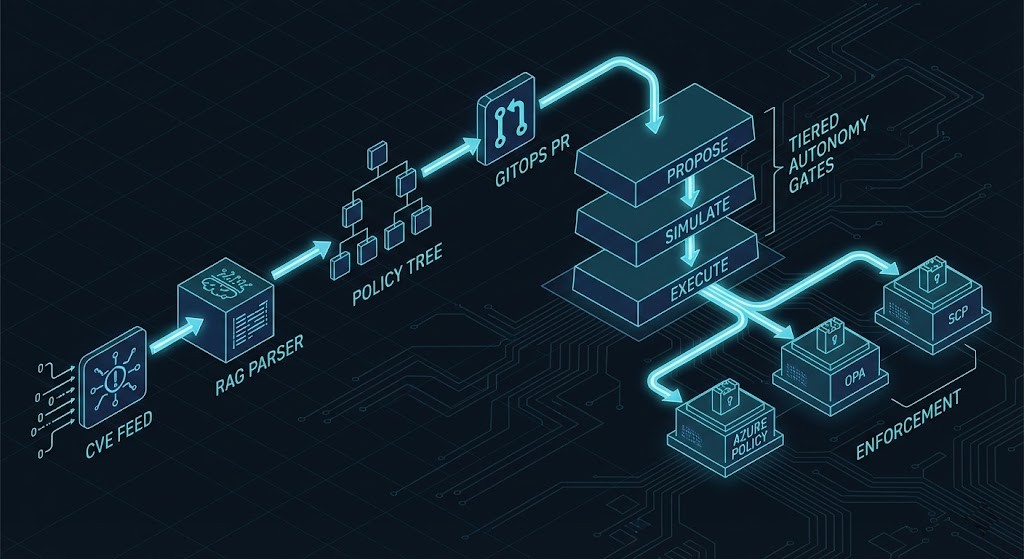

Production Workflow: The 7-Minute Fix

Theoretical architecture is fine, but does it work? We tested this workflow in our lab environment on a 10K-node simulation cluster.

The Rack2Cloud Lab Benchmark:

- 00:00 – CVE Feed: A new container vulnerability is published.

- 00:05 – RAG Parse: The AI Agent ingests the CVE.

- 01:20 – Estate Scan: The agent identifies 45 affected pods.

- 03:15 – GitOps PR: The agent generates a Pull Request with the updated image tag and a “Reasoning JSON.”

Result: 7 minutes and 42 seconds. The manual equivalent in our control tests took 48 hours.

Architect’s Action Plan

- Week 1: Inventory your OPA/Azure Policy coverage gaps. Identify the “Exception Black Holes.”

- Week 2: Deploy an AI agent in Advisory Mode (PR suggestions only).

- Month 1: Enable auto-remediation for non-PII, dev-environment resources.

- Quarter 1: Review audit logs. If the AI’s “Confidence Score” is >99%, move that policy category to production autonomy.

Conclusion: Policy Is Becoming a Living System

In 2026, success isn’t defined by a “No” machine. It’s defined by a system that understands risk, acts before the page rings, and learns from every drift. Static policies gave us brakes; AI policy agents deliver steering and collision avoidance. We are no longer just writing rules—we are designing autonomous control systems.

External Research & Citations

- CNCF: The Four Pillars of Autonomous Cloud Native (2026 Forecast)

- NIST: SP 800-204C: Implementation of DevSecOps for Microservices

- Rack2Cloud Labs: 2026 State of Autonomous Cloud Report (Internal Benchmark)

- arXiv: From Governance Rules to Guardrails: Probabilistic Policy Enforcement

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.