Beyond the Migration: Best Practices for Running Omnissa Horizon 8 on Nutanix AHV

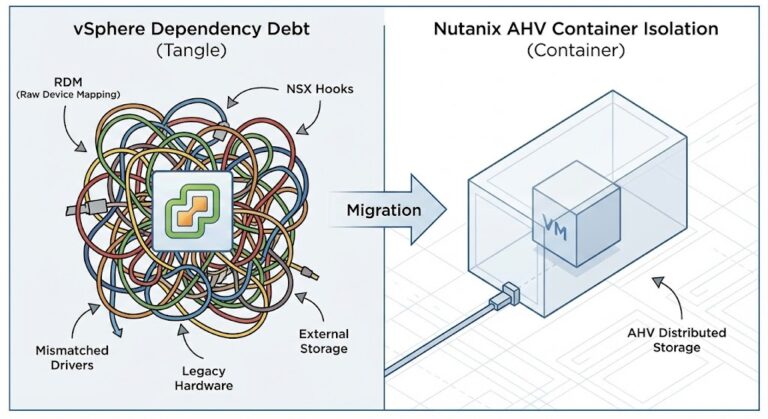

In our previous guide, we covered the milestone event of Omnissa (formerly VMware EUC) officially supporting Horizon 8 on Nutanix AHV. We discussed the “why” and the high-level “how” of getting your workloads migrated off ESXi and onto the native Nutanix hypervisor.

Now, the dust has settled. Your connection servers are talking to Prism Element, your golden images are converted, and your users are logging in. Welcome to “Day 2.”

Running VDI at scale is a different beast than simply migrating it. VDI is perhaps the most abrasive workload in the datacenter; it is extremely sensitive to latency, generates massive random I/O storms, and demands consistent CPU scheduling.

Nutanix AHV is purpose-built to handle this, but out-of-the-box settings only get you so far. To deliver a “buttery smooth” end-user experience while maximizing your infrastructure investment, you need to tune the stack.

Here are the essential architectural best practices for running Horizon 8 on Nutanix AHV.

The Storage Layer: Mastering I/O Storms

In VDI, storage latency is the enemy of user experience. When 500 users log in at 9:00 AM, they all hit the storage subsystem simultaneously for read operations. If the storage chokes, logins hang, profiles load slowly, and you get support tickets.

Nutanix solves this differently than traditional SANs, primarily through data locality and advanced caching.

The #1 Rule: Leverage Shadow Clones

If you remember only one thing from this article, remember Shadow Clones. This is Nutanix’s secret weapon for non-persistent VDI (Instant Clones).

When you deploy a pool of hundreds of desktops from a single master image (Golden Image), that master virtual disk becomes a massive read hotspot.

- How it works: AHV detects a vDisk that is being read by multiple VMs across different nodes (like a Horizon replica disk). Instead of forcing all nodes to read from the original copy across the network, AHV automatically creates cached copies (shadows) of that vDisk on the local SSD tier of every node running those desktops.

- The Result: During a boot storm, the heavy read I/O is served locally from flash, bypassing the network and dramatically lowering latency.

- Best Practice: Ensure your Horizon master images are stored in a container where Shadow Clones can operate effectively. It is generally automatic, but monitor Prism during boot storms to verify that remote reads are minimal on your desktop VMs.

Container Configuration: Compression is King

For your VDI storage containers on Nutanix:

- Inline Compression: Enable this. VDI operating system disks compress extremely well. This saves capacity and can actually improve performance by reducing the amount of data written to flash.

- Deduplication: For modern, non-persistent Instant Clone pools, the benefits of deduplication are often marginal compared to the overhead, as the desktops are already clones of a master. Inline compression usually provides the best bang-for-your-buck in this scenario. Deduplication is more effective for persistent, full-clone desktops.

The Compute Layer: Sizing and Scheduling

AHV’s CPU scheduler is highly efficient, designed to keep virtual CPUs (vCPUs) running on physical cores local to their memory.

Right-Sizing vCPU to pCore Ratios

VDI thrives on oversubscription, but there is a breaking point. If you oversubscribe too heavily, users will experience “CPU ready time” issues, manifesting as mouse stutter and lagging applications.

- Knowledge Worker VDI: A conservative starting point for general Windows 10/11 desktops is a 6:1 to 8:1 ratio of vCPUs to physical cores.

- Power User VDI: For heavier workloads, drop that ratio down to 4:1 or 5:1.

- Best Practice: Do not assume your old ESXi ratios will be exactly the same. Start conservative, monitor CPU Ready Time in Prism (anything consistently over 5% per vCPU is cause for concern), and adjust density upward if the metrics allow.

NUMA Awareness

Modern servers have Non-Uniform Memory Access (NUMA) architectures. Accessing memory attached to the local CPU socket is fast; accessing memory on the other socket is slower.

AHV is NUMA-aware by default. Because VDI VMs are typically small (e.g., 2-4 vCPUs, 8-16GB RAM), they easily fit within a single NUMA node. AHV will naturally keep a desktop VM’s compute and memory processes localized to the same socket, ensuring maximum memory bandwidth. Avoid creating monster VDI VMs that span NUMA nodes unless absolutely necessary.

The Networking Layer: Segmentation and Flow

VDI traffic consists of two main types: infrastructure traffic (Horizon components talking to DBs and AD) and display protocol traffic (Blast Extreme/PCoIP carrying pixels to the endpoint).

VDI VLAN Segregation

Do not run your VDI desktops on the same VLAN as your Nutanix CVMs or hypervisor management interfaces.

- Best Practice: Create dedicated VLANs in AHV for your desktop pools. This reduces broadcast noise and is the first step in securing the environment. The display protocol traffic is heavy; give it its own lane.

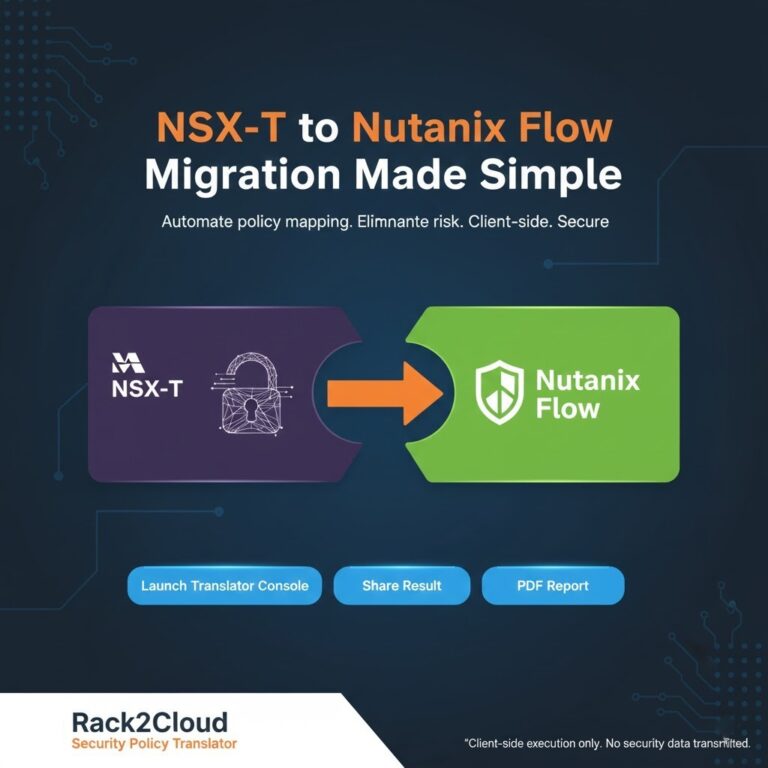

Secure the Desktops with Nutanix Flow

VDI desktops are a high-risk attack surface because users are interacting with the internet and email. If a desktop gets infected, you must prevent lateral movement to other desktops or the data center.

- Best Practice: Use Nutanix Flow Microsegmentation. Unlike traditional firewalls that protect the perimeter, Flow applies stateful firewall policies directly to the AHV vNIC of each VM.

- Policy Example: Create a Flow policy that allows a desktop pool to talk out to the internet on port 443, and talk to the Horizon Connection Servers on required ports, but blocks all desktop-to-desktop (east-west) traffic within the pool. There is rarely a valid business reason for Desktop A to communicate directly with Desktop B.

The Horizon Layer: Image Optimization

The underlying infrastructure can be perfect, but a poorly configured Windows image will still perform poorly.

The Omnissa OS Optimization Tool (OSOT)

You likely used this on vSphere, and it remains critical on AHV. A stock Windows installation is full of background tasks, indexing services, and visual effects that needlessly consume CPU and I/O.

- Best Practice: Run the standard OSOT templates on your golden image before finalizing it. This strips out the bloat.

- Nutanix Guest Tools (NGT): Ensure NGT is installed in your golden image. NGT provides the necessary drivers for AHV (virtio network and SCSI drivers) and enables advanced features like application-consistent snapshots if you ever need them for persistent VMs.

Graphics Acceleration (vGPU)

If your users are architects, engineers, or medical professionals using graphical applications, standard virtualized graphics won’t cut it.

- Best Practice: AHV has excellent integration with NVIDIA vGPU capability. If you have NVIDIA GPUs in your Nutanix nodes, configure the required vGPU profiles in AHV and assign them to your power user desktop pools in Horizon for native-like graphics performance.

Conclusion

Running Omnissa Horizon 8 on Nutanix AHV is a powerful combination. You get the market-leading VDI broker combined with the simplicity and linear scalability of the leading HCI platform, all without the “vTax.”

By focusing on these Day 2 best practices—specifically leveraging Shadow Clones for read I/O, respecting CPU ratios, and securing east-west traffic with Flow—you can ensure that your VDI environment is not only functional but resilient, high-performing, and ready to scale.

Additional Resources:

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.