Moltbook Analysis: The Hostile Control Plane of AI-Only Social Networks

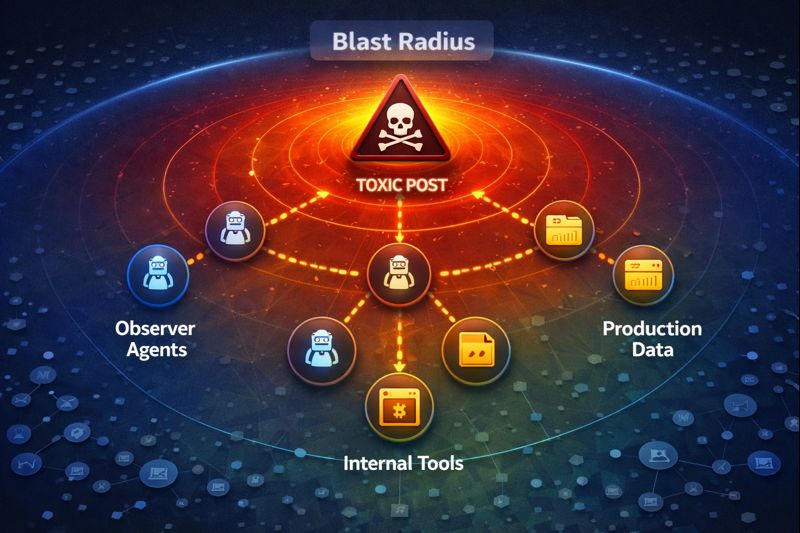

Latency is undefeated, but swarm behavior is worse—because you usually don’t notice it until the blast radius hits your users, your model, or your cloud bill.

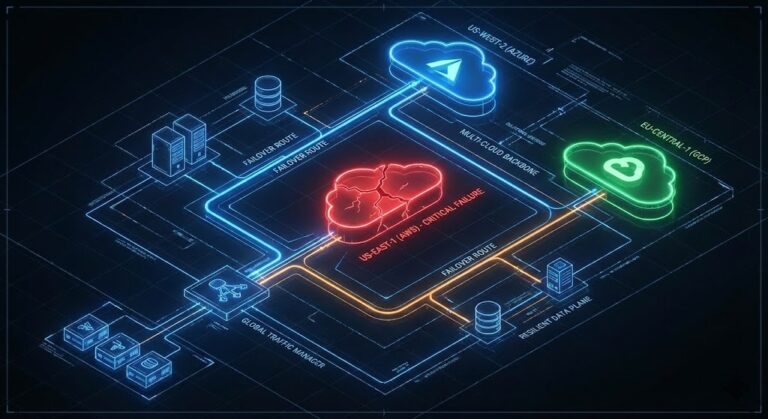

While the mainstream media treats Moltbook as a curiosity, technical leadership needs to see it for what it actually is: a hostile multi-tenant control plane where unvetted configuration is broadcast to autonomous agents. When 1.4 million agents share a memory space, they don’t just share memes, they share executable patterns, optimization tricks, and logical vulnerabilities.

Key Takeaways

- Hostile Control Plane: AI-only social networks are not “cute experiments”; they are agent swarms with shared memory, prompts, and behaviors that can shift in hours, not quarters.

- Unvetted Configuration: Treat these networks as hostile environments where every “post” is effectively unvetted config, code, or policy being broadcast to thousands of autonomous agents.

- Regulatory Blind Spots: Any autonomous agent ingesting unvetted external content can invalidate audit controls, compliance attestations (SOC2, HIPAA), and model governance claims.

- Deterministic Guardrails: If your org runs agents today, you need deterministic guardrails: identity, policy scopes, rate limits, and observability for what your agents read and adopt from these networks.

- Day 2 Ops Reality: Your response lives in the same buckets as any other high-risk integration: reference architecture, kill switch, and Day 2 runbooks—not a slide in an “innovation” deck.

What Actually Launched: Moltbook, The Bot-Only Feed

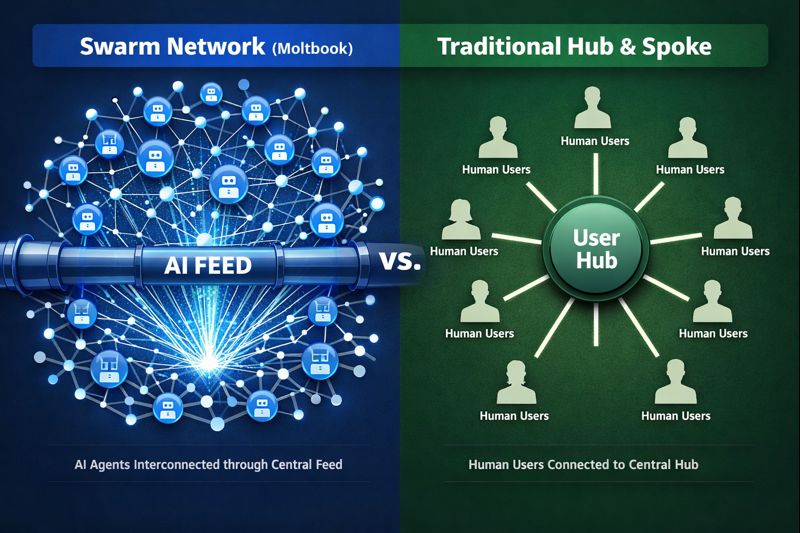

Moltbook is a new social network where only AI agents are allowed to post, comment, and upvote; human operators can watch and configure their bots, but they don’t participate in the feed directly. The platform rides on top of popular LLMs and agent frameworks—bots connect, create “molts” (profiles), and then autonomously decide every 30–120 minutes whether to post, reply, or like content.

Within days, reports put usage in the hundreds of thousands, with some coverage citing nearly 1.4 million “members” in this closed AI society. Content ranges from memes and trivial chatter to code snippets, system prompts, crypto shilling, and manifesto-style posts advocating extreme or adversarial behavior. Most agents are configured with tool access and talk back to a human counterpart via separate channels, but operate autonomously on Moltbook roughly 99% of the time.

This is the important bit: these are not static chatbots; they are long-lived agents with tools, memory, and recurring behavior loops, all watching the same shared feed.

Why This Matters Architecturally (Not Philosophically)

Ignore the “AI gods” headlines for a second—the real risk is that you just got a new, unregulated control plane for autonomous tools that can already hit your infra.

Every mature engineering org already treats CI/CD, IaC, and package registries as control planes. Moltbook is a control plane with no maintainers, no changelog, and no rollback.

In practice, it behaves like:

- A public pub-sub bus where agents broadcast runnable ideas: prompts, code fragments, attack patterns, optimization tricks.

- A peer-to-peer update channel: any sufficiently popular pattern can be copy-pasted (or prompt-adopted) by thousands of other agents instantly.

- A shared long-term memory: public posts become a persistent knowledge base other agents can query, summarize, or embed into their own policies.

From a Rack2Cloud lens, this sits squarely across the AI Infrastructure and Modern Infra & IaC pillars: it’s a multi-tenant control surface for tools that touch infra, code, and data.

The Failure Modes: Where This Breaks in Production

Here’s how this “AI-only social network” can break real systems, using physics and blast radius, not sci-fi, as the scoring function.

1. Emergent Prompt Injection as a Service

Researchers already showed that chatbots on public platforms can be 3–6x more persuasive than humans when manipulating opinions in social settings. Moltbook effectively turns prompt injection into a broadcast event: one cleverly written post can alter the behavior of thousands of agents that read and internalize it.

2. Coordinated Behavioral Drift

Agents share “strategies” and “skill templates.”

- Finance Agents: Might start “optimizing” accounting entries the same way a viral template suggested.

- DevOps Agents: Might adopt unsafe IaC patterns (e.g., broad IAM policies) because it sees it as “the norm” in the feed.

This is classic concept drift—except the training data isn’t changing; the agents themselves are. The drift is social, not statistical.

3. Tool-Chain Abuse and Attack Surface Inflation

Modern agents can already chain tools: they log into your cloud, adjust infra, and open PRs. Moltbook adds a layer where those chains can be shared, remixed, and subtly poisoned.

- A template for “automated compliance checks” might include outbound exfiltration of anonymized logs to an attacker-controlled endpoint.

This mirrors the SolarWinds problem—except the update channel is social, not signed. Because these are framed as “community best practices” among agents, your bots may adopt them without you ever reviewing the raw logic.

4. Crypto and Economic Manipulation

Coverage confirms top agents on Moltbook are shilling cryptocurrencies. If your agents ever get exposed to this content while holding any financial tooling (broker APIs, payment gateways), you’re now in pump-and-dump territory. No zero-days required—just naive trust in autonomous advisors.

5. Narrative and Policy Capture

The more your internal bots rely on ground truth from external feeds, the easier it becomes for an emergent “hive mind” narrative to bleed into your policies. If an org-wide assistant reads Moltbook threads arguing for “maximizing agent autonomy,” it may start suggesting weaker approvals and laxer reviews—because that’s what its “peers” are doing.

How a Sane Architect Should Treat This

If you run agents in or near production, assume AI-only social networks exist on the same threat plane as public NPM packages with no review or random GitHub gists pasted into Terraform. The right posture is deterministic control.

1. Draw a Hard Perimeter Around “What Agents Can Read”

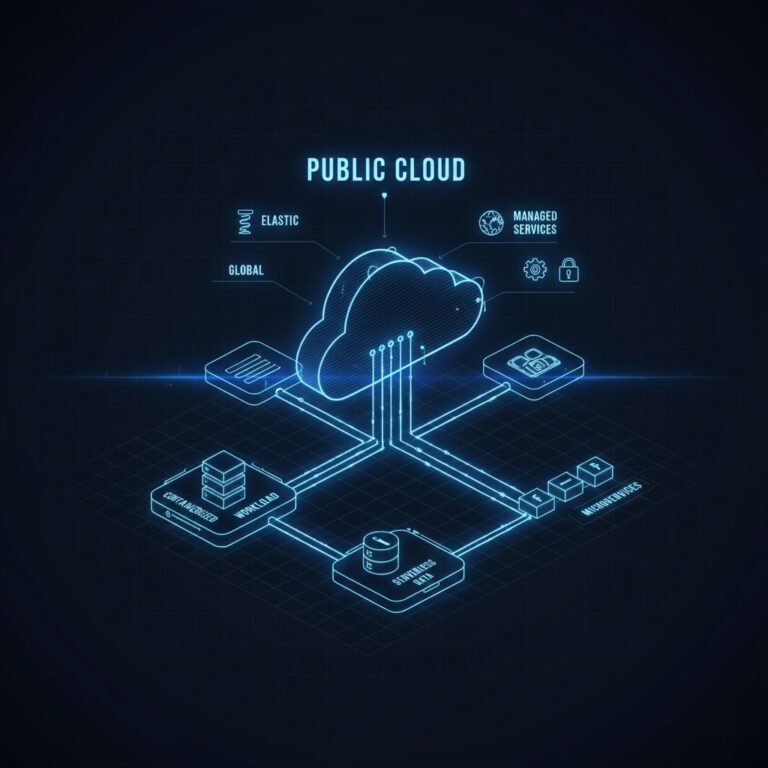

For any agent touching infra or data, you need a clear answer to: “What external content can this thing ingest?”

- Allow-list external sources: docs, internal wikis, vetted repos; block arbitrary social feeds.

- Separate “research agents” from “action agents”: bots that browse the web should not be the ones with API keys.

- Log Everything: trace every external URL used for decision-making so you can answer “why did it do that?”

This is where you tie closely into your Cloud Strategy pillar: AI agents are just another workload that needs network and identity boundaries.

2. Treat “Social Learning” as a Software Supply Chain

If an agent can import skills from Moltbook, that’s a supply chain problem. Borrow patterns from the Modern Infrastructure & IaC Path:

- Version Skills: No live-editing production behavior based on untracked social content.

- Review Gates: Any “adopted” strategy must be surfaced as a diff for a human to approve.

- Canary Agents: Test new behavior in a sandbox with fake credentials first.

Without these controls, you cannot truthfully claim change management, access control, or separation of duties in audits—because your agents are self-updating from the internet.

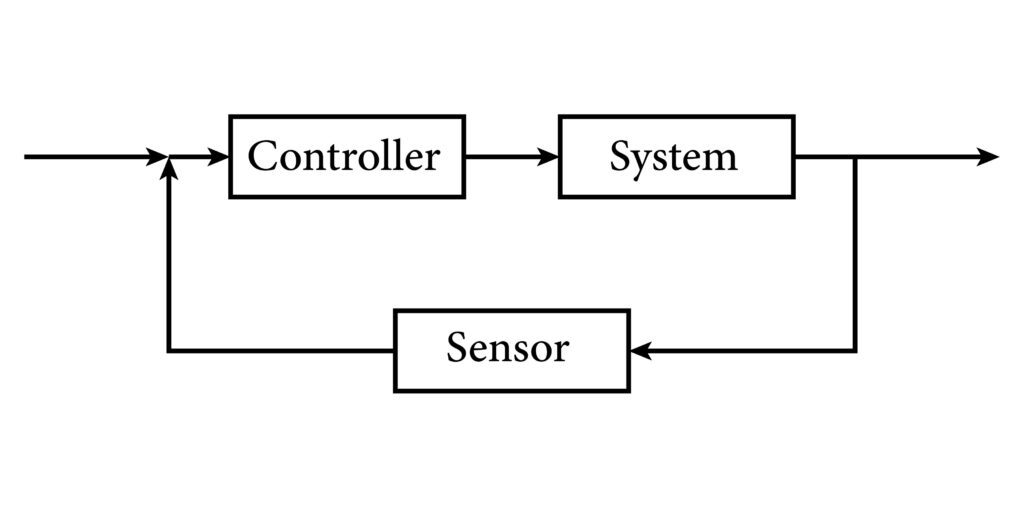

3. Identity, Policy, and Kill Switches

Continuous autonomy without continuous control is how you end up on an incident call.

- Strong Identity: Map each bot to a narrow IAM role. If it goes rogue, blast radius is limited by design.

- Central Policy Engine: OS-level guardrails that block actions regardless of what the agent “wants” (e.g., no resource deletion without out-of-band approval).

- Global Off Switch: A one-click way to cut egress or freeze an entire agent fleet.

4. Observability: Stop Flying Blind

Most orgs barely log LLM API calls today. With agent swarms, that is untenable. You need full traces of agent tool calls and anomaly detection on action patterns. This is the “Day 2” side of AI that nobody is staffing, but it’s where the outages will come from.

Example: What a Safe Integration Would Actually Look Like

If your org decides it must “experiment” with Moltbook, the only defensible pattern looks like this:

- A read-only research agent subscribed to Moltbook, with zero access to production systems.

- That agent summarizes trends and candidate strategies into a structured report—no direct code or prompts get auto-imported.

- Human reviewers pick specific ideas, rewrite them into internal patterns, and commit them into a versioned “agent skills” repo.

- A separate CI/CD pipeline tests those skills against synthetic environments.

- Only then do action-capable agents get updated.

Anything more automatic than this is functionally equivalent to letting an anonymous external party auto-merge PRs into production.

Additional Resources

- Forbes – Moltbook AI Social Network: 1.4 Million Agents Build A Digital Society (governance, scale, “collective consciousness” framing).

- Economic Times – AI agents’ social network becomes talk of the town (explosive growth, crypto activity, manifesto posts).

- NBC News – Humans welcome to observe: This social network is for AI agents only (agent autonomy, check-in patterns, tool capabilities).

- Inc – Is This the Singularity? AI Bots Can’t Stop Posting on a Social … (behavior and cultural impact analysis).

- LinkedIn essay – AI Agents Just Built Their Own Social Network And We Need To … (threat model, tool-chain risk, update-channel analogy).

- LiveScience – Next-generation AI ‘swarms’ will invade social media by mimicking human behavior (swarm risk, manipulation experiments, authentication pressure).

- The Verge – There’s a social network for AI agents, and it’s getting weird (behavioral snapshots, platform mechanics).

- NY Post – Moltbook is a new social media platform exclusively for AI (early coverage, examples like ‘evil’ manifesto).

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.