Proxmox vs VMware in 2026: A Migration Playbook That Actually Works

This technical deep-dive has passed the Rack2Cloud 3-Stage Vetting Process: Lab-Validated, Peer-Challenged, and Document-Anchored. No vendor marketing influence. See our Editorial Guidelines.

The “Proxmox curiosity” of 2023 has evolved into the “Proxmox mandate” of 2026. After two years of Broadcom’s portfolio “simplification”—which felt more like a hostage negotiation for mid-market IT—architects are no longer asking if they should move, but how to do it without losing their weekends.

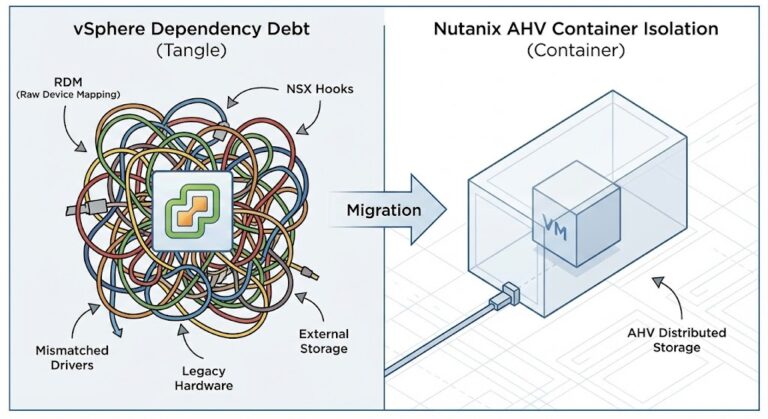

I’ve spent 15+ years watching hypervisor wars. The mistake most teams make is treating a Proxmox migration like a simple “V2V” task. It’s not. It’s a shift from a proprietary “black box” ecosystem to an open, Debian-based stack where you are finally back in the driver’s seat—but you’d better know how to drive a stick shift.

Key Takeaways

- Network is Bottleneck: Skip live imports over management LAN. Deploy dedicated 10GbE+ migration VLANs to prevent Corosync split-brain disasters.

- VirtIO is Gatekeeper: 95% Windows boot failures trace to missing VirtIO SCSI drivers. Pre-inject in VMware or embrace the BSOD.

- Big VM Problem: Multi-TB SQL/File servers timeout native Import Wizard. Deploy Clonezilla block-level sync instead.

- Snapshot Chains Kill Imports: vCenter hides delta disk nightmares—consolidate ALL snapshots first.

- Cache Mode Pivot: Post-migration, flip VM cache from vSphere defaults or watch SQL IOPS tank.

Phase 1: Brownfield Discovery (The Snapshot Audit)

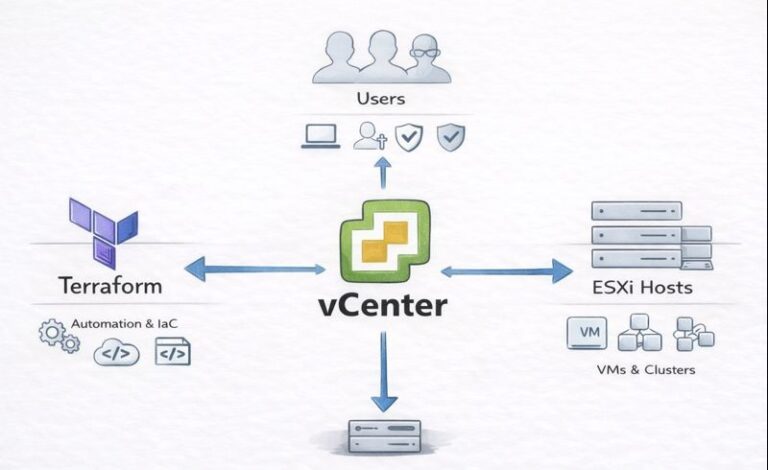

VMware vCenter hides sins that break KVM imports. Proxmox’s ESXi Import Wizard (significantly enhanced in VE 9.x) still chokes on complex snapshot chains.

The Snapshot Audit

- The Trap: A production VM running on a “delta” disk from a backup snapshot taken three years ago. The hypervisor is reading a 50-file chain.

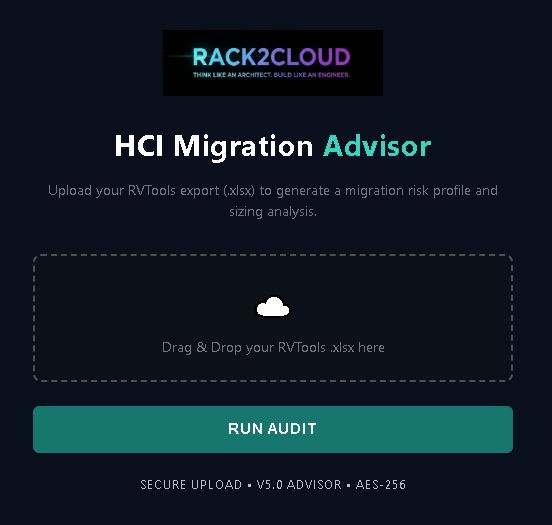

- The Fix: You cannot migrate a delta. You must Consolidate/Delete All snapshots in vSphere before the migration window. If the HCI Migration Advisor flags a locked snapshot, you must clone the VM to a new entity to flatten the chain.

The Hardware Passthrough Trap

- Identify any VMs using USB passthrough or DirectPath I/O (SR-IOV). These will not migrate.

- Real World Example: We had a client with a legacy license server keyed to a physical USB dongle passed through to a vSphere VM.

- Action Item: Use the Advisor report to identify these edge cases early. Document the physical USB Bus ID and Vendor ID on the host for manual reconstruction in the Proxmox hardware tab.

Related Guide: If you are unsure about the TCO implications of staying vs. going, read our CFO’s Guide to Broadcom Renewals before you start moving bits.

Phase 2: Network Foundation (Prevent Cluster Crash)

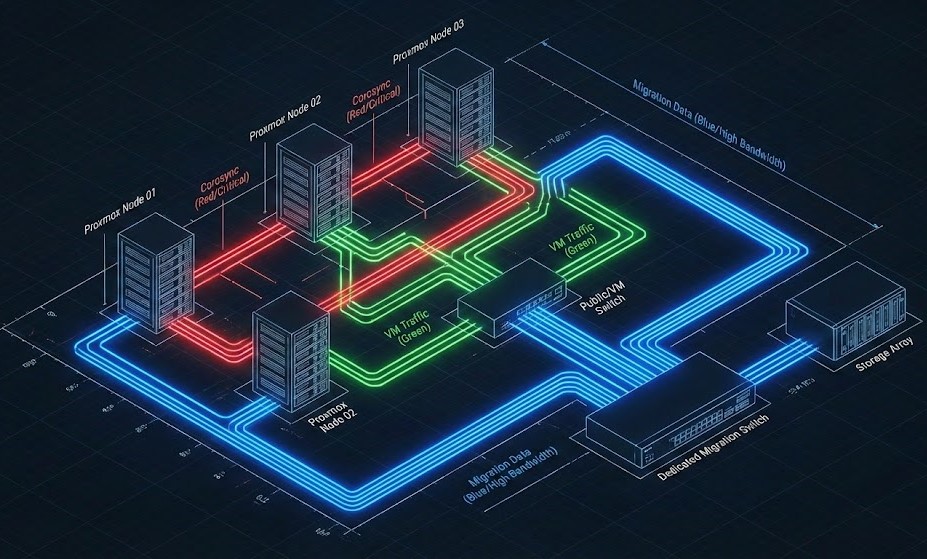

Proxmox relies on Corosync for cluster communication, which demands <2ms latency. If you saturate your links moving 50TB of data, nodes will “fence” (reboot) themselves to protect data integrity.

The Golden Rule: Physically or logically separate your traffic.

Figure 1: The “Safe Passage” Architecture. Note the physical separation of the Migration/Storage link from the Corosync heartbeat.

The Configuration

| VLAN Purpose | Speed | MTU | Notes |

| Mgmt / Corosync | 1GbE | 1500 | Heartbeat Only (Critical) |

| Migration | 10/25GbE | 9000 | Bulk Data Copy |

| VM Traffic | LACP Bond | 1500/9000 | Production Payload |

Pro Tip: Deploy a “Stretch L2” during migration—trunk your VMware port groups and Proxmox Linux Bridges to the same physical switch ports. This allows VMs to keep their IPs while the database backend stays on vSphere during the transition.

Phase 3: Windows Migration (The “Blue Screen” Evasion)

Windows expects an LSI Logic controller. When it wakes up on Proxmox, it sees a VirtIO SCSI controller. Result: INACCESSIBLE_BOOT_DEVICE.

The Pre-Flight Injection (Step-by-Step)

Do not rely on post-migration repair. Fix it while the VM is still alive in VMware.

- Add Dummy Hardware: In vSphere, add a new 1GB Hard Disk to the VM.

- Force Controller: Expand the disk settings and select VirtIO SCSI as the controller type (or mount the

virtio-win.isoand install the driver manually if the option isn’t visible). - Install Drivers: Inside Windows, run the

virtio-wininstaller. Ensureviostor.sysandNetKVMare installed. - Verify: Check Device Manager for “Red Hat VirtIO SCSI Controller”.

- Migrate: Now, when you switch the boot disk to VirtIO on Proxmox, Windows will boot cleanly.

Tool Reference: You can download the latest validated drivers from the Fedora Project VirtIO archive.

War Story: We once migrated a critical Payroll Server (Server 2019) on a Friday night. We skipped the injection. I spent 4 hours in the Proxmox Recovery Console fighting a registry hive because the mouse driver also failed. Inject before you eject.

Phase 4: Execution (Moving the Bits)

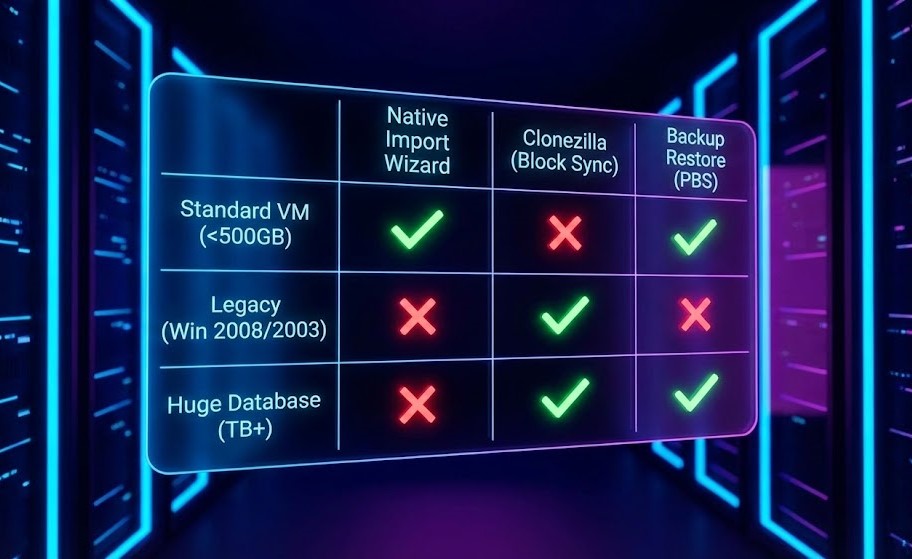

Option A: Native Import (Web/App Servers <500GB)

Proxmox VE 9.x allows you to mount vSphere storage directly.

- Path: Datacenter → Storage → Add ESXi → Browse vCenter VMs → Import.

- Reality: This is a streaming

ddcopy. If the network hiccups, you start over. Great for small VMs, risky for large ones.

Option B: Clonezilla Heavyweights (4TB SQL)

For the big iron, use Clonezilla.

- Boot Source (VMware) into Clonezilla ISO.

- Boot Target (Proxmox) into Clonezilla Server (Receive mode).

- Why: It understands filesystems. If you have a 4TB drive that is only 200GB full, Clonezilla only moves the 200GB. The Native Import often moves the whole 4TB block.

Figure 2: Select the tool based on downtime tolerance and data size, not convenience.

Phase 5: Day 2 Operations (The Cache Pivot)

You booted. Don’t celebrate yet. Proxmox caching logic differs from vSphere.

- Cache Mode: Set to “Write Back” (if battery-backed) or “None” (for ZFS/Ceph). Avoid “Writethrough” on slow storage; your SQL performance will tank.

- Backup Reality: Deploy Proxmox Backup Server (PBS) immediately.

- First Backup: 4 hours.

- Second Backup: 3 minutes (thanks to block-level dedupe). This is your ransomware insurance policy.

- Storage Architecture: If you are still debating the underlying storage layer, stop. You need to understand the I/O path differences immediately.

Deep Dive: Read our Proxmox Storage Master Class: ZFS vs Ceph to verify your layout before you fill the drives.

Production Timeline

| Week | Focus | Deliverable |

|---|---|---|

| 1 | Discovery + Network | VLANs up, snapshot chains cleared |

| 2 | Pilot Migration | 10% VMs validated |

| 3 | Production Cutover | 90% VMs live on Proxmox |

| 4 | HA Validation | Full DR testing complete |

Once you survive Week 4, review the Proxmox HA Tuning Guide to fine-tune your watchdog settings.

Conclusion: The “Open Box” Era

Migration is not just about replicating vCenter; it’s about architecting for the future. Broadcom forced the industry’s hand, but the destination—an open, flexible, API-driven infrastructure—is arguably where we should have been all along.

You have moved from a “Black Box” (where you pay Broadcom to solve problems) to an “Open Box” (where you engineer the solution). The learning curve is steep, but the control—and the budget—is finally yours again.

Next Steps: Once your compute is stable, your next bottleneck will be storage IOPS. Read our guide on ZFS Tuning for High-Performance SQL Workloads to prepare for Day 3.

Additional Resources

- Proxmox VE 9.x ESXi Import

- VirtIO Windows Drivers

- Corosync Cluster Requirements

- Proxmox Backup Server

- HCI Migration Advisor

- Rack2Cloud Lab Validation

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.