Designing AI-Centric Cloud Architectures in 2026: GPUs, Neoclouds, and the Network Bottleneck

Key Takeaways

- Physics, Not Just Ops: At the H100 scale, distributed training is a physics problem. A 5ms latency spike doesn’t just slow you down; it stalls the entire gradient synchronization, leaving expensive silicon idle.

- The Neocloud Arbitrage: Specialized clouds (Lambda, CoreWeave) are 40% cheaper, but the “egress tax” can wipe out those savings if your data gravity strategy is flawed.

- Failure Modes: Straggler GPUs and stalled NCCL collectives are the new “down time.” If you can’t see inside the packet flow, you can’t debug the training run.

- Repatriation is Real: For steady-state inference, renting GPUs is financial malpractice. We are seeing a hard pivot back to colo for predictable workloads.

I sat in a boardroom last week with a CTO who was furious. His team had finally secured a reservation for 128 H100s on AWS after a six-month wait. They had the data, they had the model, and they had the budget. But three weeks into fine-tuning, their training efficiency was hovering at 35%.

“We bought the Ferraris,” he told me, “Why are we driving in a school zone?”

The answer wasn’t in the GPU specs. It was in the architecture. They had spread their cluster across three Availability Zones (AZs) to “maximize availability,” inadvertently introducing 2ms of latency between nodes. In web apps, 2ms is a rounding error. In distributed training using NCCL (NVIDIA Collective Communication Library), 2ms is a disaster. It meant their expensive GPUs were spending more time waiting for gradients to sync than actually calculating tensors.

This is the reality of AI architecture in 2026. The challenge isn’t acquiring compute anymore; it’s feeding it without going bankrupt.

The Physics of Failure: Why Latency Kills ROI

Let’s get technical about why the network is your new bottleneck.

When you train a model across multiple nodes (Pipeline or Tensor Parallelism), every GPU needs to exchange gradients with every other GPU at the end of a step (AllReduce). This is a synchronous operation.

If GPU #1 finishes its math in 100ms, but GPU #64—stuck behind a noisy neighbor on a shared switch—takes 150ms, the entire cluster waits for GPU #64. This is the Straggler Problem.

- The Operational Reality: Your metering doesn’t stop. You are paying $4.00/hour for every GPU to sit idle, burning power, waiting for a TCP ACK that got dropped in a congested spine switch.

- The Architect’s Fix: You need deterministic network performance. This means RDMA (Remote Direct Memory Access) over Converged Ethernet (RoCEv2) or InfiniBand. If your cloud provider is routing your GPU traffic over standard TCP/IP without an optimized fabric (like AWS EFA), you are lighting money on fire.

Validation Step:

Don’t guess at your latency. Before you sign that commit, use the Metro Latency Monitor. While designed for storage replication, we use it constantly to benchmark RTT stability between disparate cloud regions. If you can’t guarantee sub-millisecond tail latency, you need to redesign your topology.

The Neocloud Decision: Arbitrage vs. Complexity

This year, I’ve moved three clients off hyperscalers (AWS/GCP) and onto Neoclouds (CoreWeave, Lambda). The economics are compelling—often 50% cheaper per FLOP. But this introduces a massive “Fragmentation Tax.”

The War Story (Failure):

A healthcare client moved their training to a Neocloud to save $200k/month. It worked great until they needed to move the trained checkpoints back to their compliant S3 bucket for deployment. They hadn’t modeled the egress fees. The bill for moving petabytes of training data and checkpoints back and forth wiped out 60% of their compute savings in month one.

The Economic Trap:

The Neoclouds are cheap on compute but often lack the “gravity” of free data ingestion that hyperscalers offer. You have to treat data movement as a first-class citizen in your TCO model.

| Feature | Hyperscaler (The “Safe” Bet) | Neocloud (The “Raw” Bet) | The Architect’s Trade-off |

| Interconnect | Virtualized (EFA/Titan) | Bare Metal InfiniBand | Hyperscalers hide complexity; Neoclouds give you raw speed but require you to manage the fabric. |

| Storage Locality | High (S3/EBS next to compute) | Low (Often disjointed) | Critical Risk: Training on remote storage kills throughput. You need local NVMe caching strategies. |

| Egress Cost | Punitive ($0.09/GB+) | Often Waived / Low | Hyperscalers trap your data. Neoclouds let it flow, but getting it there is the cost. |

The Tool You Need:

Do not commit to a multi-cloud split without running the numbers. Use our Cloud Egress Calculator. Plug in your dataset size and your retraining frequency. If the egress cost exceeds 15% of the compute savings, stay on the hyperscaler.

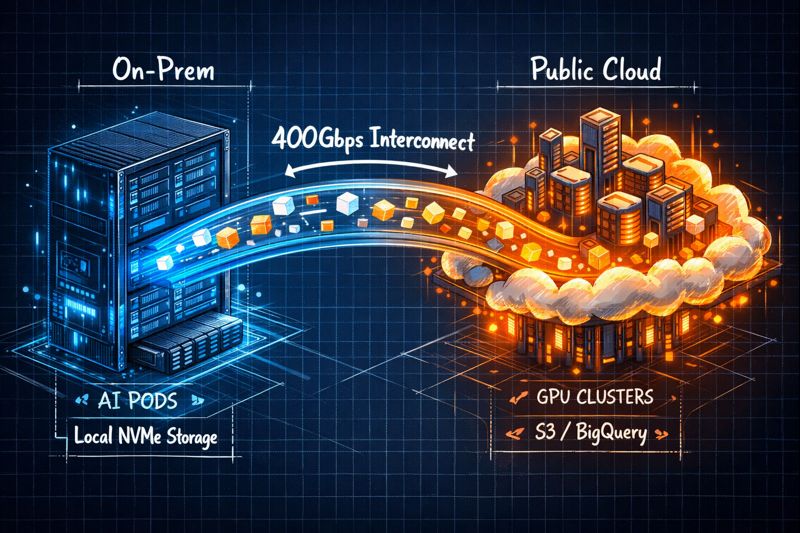

The Win: The “Just-in-Time” Hybrid Architecture

To balance the ledger, let’s look at a client who got this right. I worked with a LegalTech firm fine-tuning LLMs on sensitive contract data. They were priced out of Azure GPU quotas but were terrified of moving regulated data to a “startup” cloud.

The Architecture:

We built a “Hub-and-Spoke” model using Just-in-Time (JIT) Hydration.

- The Hub (Azure): Hosted the “Golden Copy” of the dataset in a secure, compliant Data Lake.

- The Spoke (Neocloud): We spun up a bare-metal cluster only for the duration of the training run.

- The Trick: Instead of mirroring the full 2PB lake, we used a high-performance parallel file system client to hydrate only the specific shards needed for the current training epoch directly into the local NVMe of the GPU nodes.

The Result:

- Security: Data never “rested” permanently on the Neocloud storage; it lived in ephemeral NVMe cache and was wiped post-training.

- Performance: By saturating the local NVMe, we kept the GPUs fed at 98% utilization, avoiding the network bottleneck entirely during the backward pass.

- Cost: They saved 45% on compute compared to Azure, and because they only transferred active shards, the egress fees were manageable.

This worked because they didn’t treat the cloud as a single place. They treated it as a supply chain: Azure for storage, Neocloud for manufacturing.

What Failure Looks Like in Production

When these architectures fail, they don’t crash with a 404. They “brown out.”

- The “Stalled” Collective: You check your logs and see NCCL timeouts. The training hasn’t stopped, but it’s progressing at 10% speed. This is usually East-West packet loss.

- The Compliance Breach: In a rush to leverage cheap GPUs in a new region, a Junior DevOps engineer spins up a cluster in a non-compliant zone.

- Remediation: We use the Sovereign Drift Auditor to scan Terraform plans against our data sovereignty whitelist before apply. It catches that rogue GPU instance in a region that violates GDPR before it boots.

- The “Day 2” Hangover: You picked a bleeding-edge AI service from Azure, only to realize it has zero Terraform support. Now your team is clicking through the portal manually, breaking your CI/CD pipeline.

- Prevention: Check the Terraform Lag Tracker. If the feature lag is >3 months, don’t build your core production workflow on it yet.

Repatriation: The “Inference at the Edge” Pattern

Here is the controversial take: Stop doing inference in the public cloud.

For bursty training, the cloud is perfect. But for steady-state inference (e.g., analyzing video feeds 24/7), the OpEx model is predatory. I recently helped a logistics company build “AI Pods” (standard racks with 4x L40S GPUs) deployed in their distribution centers.

The Math:

- Public Cloud Inference: $18,000/month per node (On-Demand).

- On-Prem Pod (CapEx amortized): $4,200/month per node over 3 years.

That is a 4x difference. Plus, the latency dropped from 120ms (cloud roundtrip) to 5ms (local LAN).

However, on-prem requires a stack. You can’t just install Ubuntu and hope for the best. You need a hypervisor. If you are looking at VMware Cloud Foundation (VCF) for these AI nodes, be careful. Broadcom’s core-based pricing on high-density GPU servers is astronomical.

Architectural Check:

Use the VMware Core Calculator to verify the licensing costs. We often find that for dedicated AI clusters, a KVM-based alternative or bare-metal Kubernetes (Harvester/OpenShift) offers a far better ROI.

Furthermore, if you are deciding whether to refactor your inference code for serverless (Lambda/Cloud Run) vs. keeping it on persistent VMs, use the Refactoring Cliff Calculator. There is a specific request-per-second volume where serverless becomes more expensive than a dedicated cluster. Find that cliff before you code.

Conclusion: The “Go/No-Go” Decision Gate

We need to move away from “Can we build it?” to “Should we build it this way?” Here is the decision framework I force my teams to use in 2026:

Gate 1: The Data Gravity Test

- Is the training dataset >50TB?

- Yes: Compute must come to the data. Do not move the data to a Neocloud unless the compute savings are >40%.

- No: You are free to chase the cheapest GPUs.

Gate 2: The Latency Mandate

- Does the workload require multi-node training (e.g., >8 GPUs)?

- Yes: Mandatory RDMA/InfiniBand support. Standard Ethernet is a No-Go.

- No: Standard instances are acceptable.

Gate 3: The Sovereignty Audit

- Does the data contain PII/Regulated info?

- Yes: Mandatory Sovereign Drift check. Disqualify any provider without specific region guarantees.

Gate 4: The Inference Tipping Point

- Is the inference load consistent 24/7?

- Yes: Mandatory Repatriation/Edge analysis. Cloud inference is likely a budget leak.

Designing for AI isn’t about magic; it’s about ruthlessly optimizing for the physical constraints of the hardware. The code is soft, but the silicon—and the network connecting it—is hard. Respect the physics.

Additional Resources:

- State of AI Compute 2026: NVIDIA Data Center Documentation – Verified specs for H100/Blackwell architecture.

- Cloud Pricing Benchmarks: Vantage Cloud Cost Report – Reference for egress and compute pricing trends.

- Distributed Training Patterns: Meta Engineering Blog – Real-world examples of RDMA implementation.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.