The Unholy Trinity: Cisco, Pure, and Nutanix Just Broke the HCI Tax (But Read the Fine Print)

This strategic advisory has passed the Rack2Cloud 3-Stage Vetting Process: Market-Analyzed, TCO-Modeled, and Contract-Anchored. No vendor marketing influence. See our Editorial Guidelines.

Key Takeaways

- The “HCI Tax” is Dead: You no longer need to buy a massive compute node just to get more storage. You can finally scale compute (UCS) and storage (Pure) independently while keeping the HCI operating model.

- This is Not “3-Tier” Reinvented: While the hardware looks like 3-tier, the control plane is unified. Prism manages the Pure FlashArray volumes natively via NVMe/TCP. No LUN zoning, no storage silos.

- The “Re-Foundation” Reality: There is no supported in-place conversion path for existing clusters. Practically, this means evacuating workloads and re-foundation of the cluster as compute-only nodes.

- The Licensing Pivot: This architecture uses Nutanix NCI-C (Compute-Only) licensing, positioning it as a direct financial weapon against Broadcom’s bundled pricing and Proxmox’s “do it yourself” storage model.

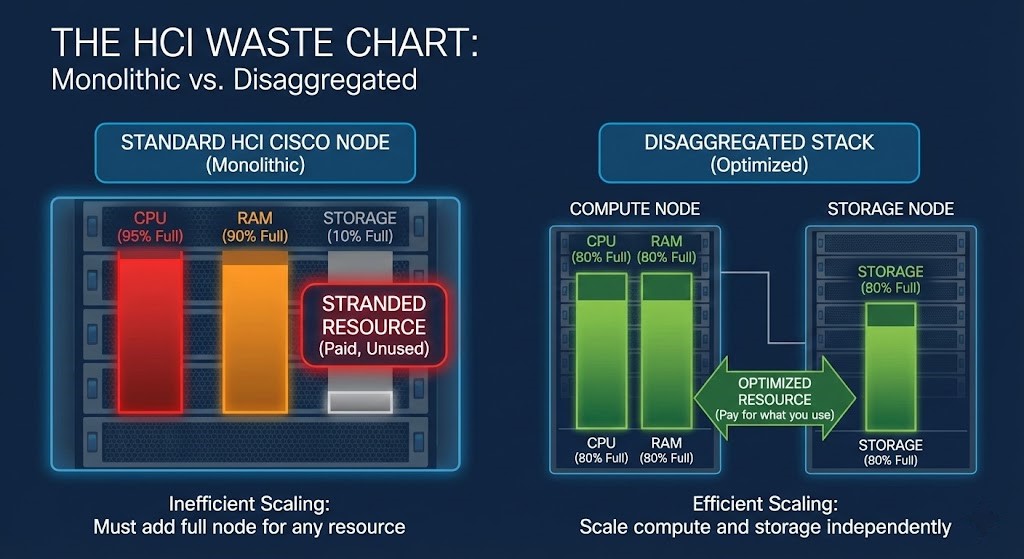

The “HCI Tax” No One Talks About

We spent the last decade falling in love with Hyperconverged Infrastructure (HCI). It promised simplicity, and it delivered. But it came with a quiet economic penalty that vendors glossed over.

The HCI Tax: The rigid coupling of Compute and Storage.

If your SQL cluster hits 90% CPU but has 80TB of empty disk, you still have to buy a new node. If your VDI environment fills its storage but CPU is idle, you still have to buy a new node.

The Math of Waste: Consider a standard HCI node (e.g., Nutanix NX-series or equivalent UCS). It is packed with dual CPUs, massive RAM, and a full load of NVMe drives.

If you only needed the storage capacity, you just lit budget on fire buying CPU and RAM you didn’t need. This isn’t just inefficiency; it’s structural waste.

The Fix: The new “Disaggregated” stack breaks this coupling without breaking the HCI experience:

- Need Storage? Buy a Pure FlashArray blade (tens of thousands for dedicated storage).

- Need Compute? Buy a Cisco UCS compute blade (not six figures for a full node).

- Management? Still 100% Prism.

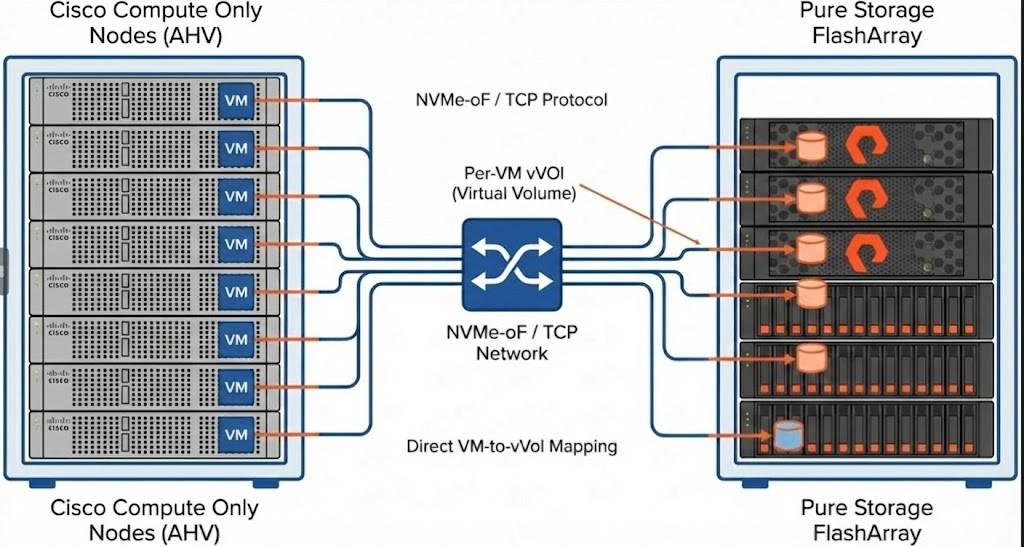

The Kill Shot: Control Plane Convergence

The moment I draw this topology on a whiteboardThe moment I draw this topology on a whiteboard—Cisco servers connected to a Pure Storage array—every architect in the room rolls their eyes. “Congratulations,” they say. “You just reinvented 2005. I thought we killed SANs to escape zoning hell.”

They are half-right. The hardware topology is 3-tier. But the Control Plane is pure HCI.

1. The “Under the Hood” View (The Wiring) This architecture abandons LUNs in favor of Virtual Volumes (vVols) running over an NVMe-oF/TCP fabric. As the diagram below shows, the Cisco nodes connect directly to the Pure Array, where individual VMs are mapped to individual vVols.

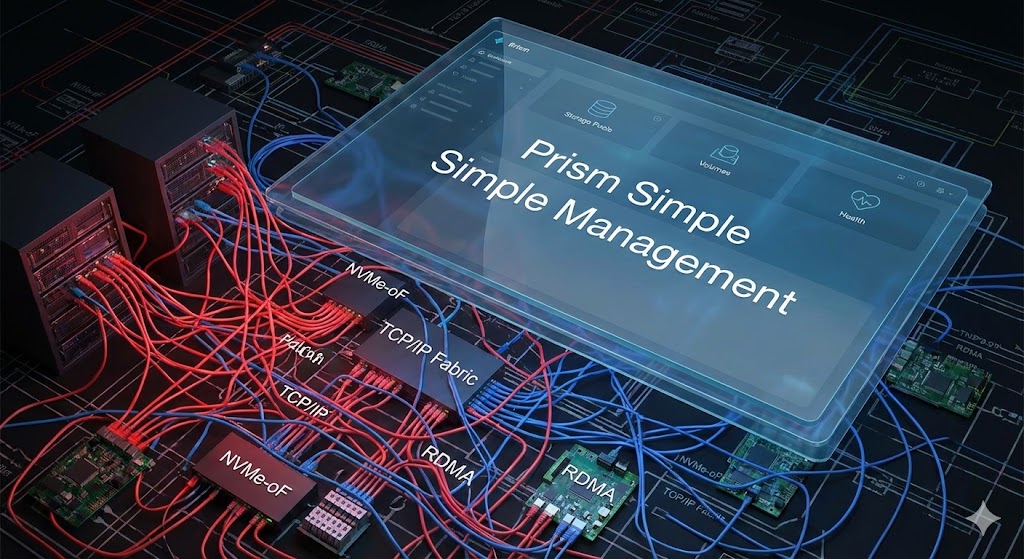

2. The Operator View (The Magic) While the wiring above looks complex, you never touch it. Nutanix Prism acts as the abstraction layer. When you click “Create VM,” Prism uses APIs to configure the Pure array automatically. You don’t zone switches. You don’t mask LUNs. You just manage VMs.

It feels like HCI to the operator because Prism handles the API calls, but it scales like 3-tier for the CFO because the hardware is separate.

The Fine Print: Hardware & Protocol Constraints

Before you rush to buy this, you need to know the physics. This is not a “plug and play” solution for every environment. It is a narrow, validated path.

1. The “Pure //X Only” Handcuff This integration relies entirely on NVMe over TCP.

- Supported: Pure FlashArray //X (and //XL).

- NOT Supported: FlashArray //C, //E, or older //M models.

- NOT Supported: iSCSI or Fibre Channel fallback.

If you have a legacy Pure array you wanted to repurpose? Tough luck. This is a performance-first architecture, and it demands modern metal.

2. The Cisco UCS Lock-In This is a “Cisco Validated Design” (CVD). It doesn’t run on just “any server.”

- Supported: UCS X-Series and select UCS C-Series (M6/M7) models validated for NVMe/TCP offload and Fabric Interconnect integration.

- NOT Supported: Legacy B-Series blades or older M5 rack servers.

- Why? It requires specific NIC offloads for NVMe/TCP to perform like local storage.

3. The Re-Foundation Reality (The Big One) This is the detail that gets people fired. You cannot take an existing Nutanix HCI cluster (running local disks) and “just add” a FlashArray to it. The architecture is fundamentally different.

- Requirement: No in-place conversion.

- Process: There is no supported in-place conversion path. Practically, this means evacuating workloads and re-foundation of the cluster as compute-only nodes.

- Impact: If you thought this was a simple Tuesday afternoon upgrade, you are looking at a massive migration project.

The Licensing War: Nutanix vs. Broadcom vs. Proxmox

The hardware is interesting, but the Licensing is where the real war is being fought. This stack is a direct response to the “Broadcom Tax.”

1. The Nutanix Play (NCI-C)

Nutanix introduced NCI-C (Nutanix Cloud Infrastructure – Compute) licensing.

- The Logic: Since you aren’t using Nutanix’s software-defined storage (AOS) to manage local disks, why should you pay for it?

- The Win: You pay a lower license cost per core because the storage heavy lifting is offloaded to Pure. You are paying Nutanix strictly for the Hypervisor (AHV) and the Management Plane (Prism).

2. The Broadcom/VMware Trap

Compare this to VMware Cloud Foundation (VCF).

- The Trap: Broadcom often bundles vSAN entitlements into VCF whether you use them or not. Even if you run external storage, you are often paying a “per-core” price that assumes full-stack value.

- The Result: You pay the “HCI Tax” in licensing, even if you run 3-tier hardware.

3. The Proxmox “DIY” Foil

Then there is the Proxmox angle we discussed in our last research note.

- The Reality: Proxmox allows this same disaggregation (Compute Nodes + External Ceph/NFS/iSCSI) for zero license cost.

- The Trade-off: You lose the “Single Pane of Glass.” In the Nutanix/Pure stack, Prism manages the storage. In Proxmox, you are managing Proxmox for compute and your Storage Array interface separately.

- The Verdict: Nutanix + Pure is the “Enterprise” version of the Proxmox freedom. You pay for the integration so you don’t have to be the integrator.

Verdict: Who Is This For?

This architecture is not for everyone. It is a scalpel, not a Swiss Army Knife.

Use This Archetype If:

- ✅ You are a “Greenfield” Deployment: You are building a new cluster and have the budget for Pure //X and UCS M7.

- ✅ You have “Lopsided” Workloads: Massive SQL databases (High Storage / Low Compute) or heavy VDI (High Compute / Low Storage).

- ✅ You are exiting VMware: You want the simplicity of vCenter (Prism) but are tired of the vTax, and you trust Cisco hardware.

Stay Away If:

- ❌ You have existing Nutanix Clusters: The re-foundation effort will destroy your ROI unless you are doing a hardware refresh anyway.

- ❌ You use “Cheap and Deep” Storage: If you need FlashArray //C or //E economics, this integration doesn’t support it yet.

- ❌ You are a small shop: If you have 3 nodes, just buy standard HCI. The complexity of NVMe/TCP networking isn’t worth it until you hit scale.

Final Thought: We broke the HCI Tax, but we didn’t break the laws of physics. You still have to manage a network fabric, you still have to buy a storage array, and you still have to pay for licenses. But for the first time in a decade, you can finally choose which tax you want to pay.

Additional Resources (Verify Our Work)

Don’t just take our word for it. Here are the official validated design guides from the vendors:

- Cisco: FlashStack with Nutanix Design Guide (CVD)

- Pure Storage: FlashArray + Nutanix Solution Brief

- Nutanix: NCI-C (Compute Only) Licensing FAQ

// RACK2CLOUD ARCHITECTURAL INTEGRITY

Verified by Humans, Not Just Algorithms. This analysis is based on validated reference architectures (CVDs) and verified vendor compatibility matrices as of January 2026.

- No Vendor Sponsorship: Rack2Cloud does not accept payment for architectural reviews.

- Conflict of Interest: None. We are not a VAR.

- Validation Status: Green. (Confirmed against Cisco CVD & Pure Compatibility Matrix).

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.