Nutanix AHV vs. vSAN 8 ESA: The I/O Saturation Benchmark

This strategic advisory has passed the Rack2Cloud 3-Stage Vetting Process: Market-Analyzed, TCO-Modeled, and Contract-Anchored. No vendor marketing influence. See our Editorial Guidelines.

TARGET SCOPE: Nutanix AHV | vSAN 8 ESA

STATUS: Battle-Tested Strategy

Status: Lab In-Progress (Crowdfunded Phase)

Objective: Measure latency jitter, queue depth backpressure, and application stability under 100% write buffer saturation.

Why This Benchmark Exists (The Problem)

If you ask a VMware rep for storage benchmarks, they will show you a slide where vSAN wins. If you ask a Nutanix rep, they will show you a slide where AHV wins.

Both are telling the truth—because both tuned the test to their architecture’s specific strengths.

At Rack2Cloud, we don’t care about “Peak IOPS.” That is a vanity metric. We care about the moments that actually break production systems:

- Patch Tuesday login storms.

- Backup windows colliding with business hours.

- Ransomware recovery write storms.

- Database commit spikes during month-end processing.

These are not read-heavy events. These are write-saturation events. This benchmark answers one specific architectural question:

What happens to vSAN ESA and Nutanix AHV latency when the write buffer is completely full? Does the system gracefully throttle (Quality of Service)? Or does it fall off a cliff (Latency Collapse)?

The Rack2Cloud “Clean Room” Protocol

We operate under a specific testing doctrine to eliminate vendor gaming:

- No Vendor Tuning: Default policies only.

- No Cache Warming: Tests run cold to simulate sudden bursts.

- No “Hero” Workloads: No 4K 100% Read tests allowed.

- Full Transparency: We publish the FIO configs and raw logs.

Editor’s Note: The NVMe drives and networking for this test are being procured directly through the Rack2Cloud Lab Fund (see footer). This data belongs to the community, not a vendor.

Project Thunderdome: Lab Architecture

To isolate the software performance, we removed hardware variables entirely. Both hypervisors run on identical metal.

| Component | Specification |

| Nodes | 4x Dell PowerEdge R750 (1U) |

| CPU | Dual Intel Xeon Gold 6348 (28c/56t) |

| Memory | 512GB DDR4 ECC (Per Node) |

| Network | 100GbE (Mellanox ConnectX-6 Dx) |

| Storage (Media) | 4x 3.84TB Micron 7400 Pro NVMe Gen4 (Per Node) |

| Total Raw Capacity | 61.44 TB |

The Contestants

- Red Corner: VMware vSAN 8 (ESA – Express Storage Architecture)

- Blue Corner: Nutanix AHV (AOS 6.7 – Block Store)

The Physics: Write Path Architecture

Before analyzing the results, engineers must understand the difference in the Write Path Pipeline. This is where the latency comes from.

vSAN 8 ESA Write Path (Log-Structured)

vSAN ESA removes the legacy disk groups and writes closer to the metal.

- Ingest: Writes are acknowledged into a “Performance Leg” (RAID-1 Mirror) on the NVMe drive.

- Processing: Compression and Erasure Coding are applied.

- Destage: Data is moved to the “Capacity Leg.”

- The Risk: When the “Performance Leg” fills up, the system must pause ingest to flush data. This creates Backpressure.

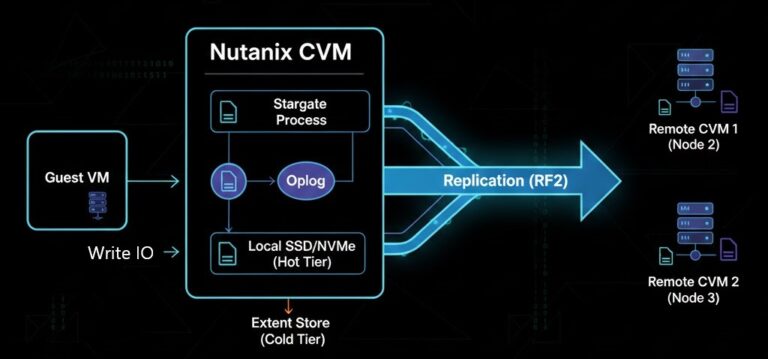

Nutanix AOS Write Path (Distributed Extent Store)

Nutanix uses a Controller VM (CVM) to manage the Oplog.

- Ingest: Writes hit the Oplog (Persistent Write Buffer) and are replicated.

- Locality: Uniquely, Nutanix prioritizes keeping the write on the local node’s NVMe drive to avoid network traversal.

- The Risk: The CVM consumes CPU. Under load, does the CVM become a bottleneck before the disk does?

Workload Modeling: Why 70/30?

We explicitly reject “Hero Numbers” (100% Read). Instead, we simulate a “Dirty Database” profile:

- Block Size: 8K / 32K Mixed

- R/W Ratio: 70% Read / 30% Write

- Duration: 4 Hours (Critical for forcing destaging)

This profile models real-world SQL/Oracle OLTP and VDI Login Storms where the system cannot simply serve from RAM; it must commit to disk.

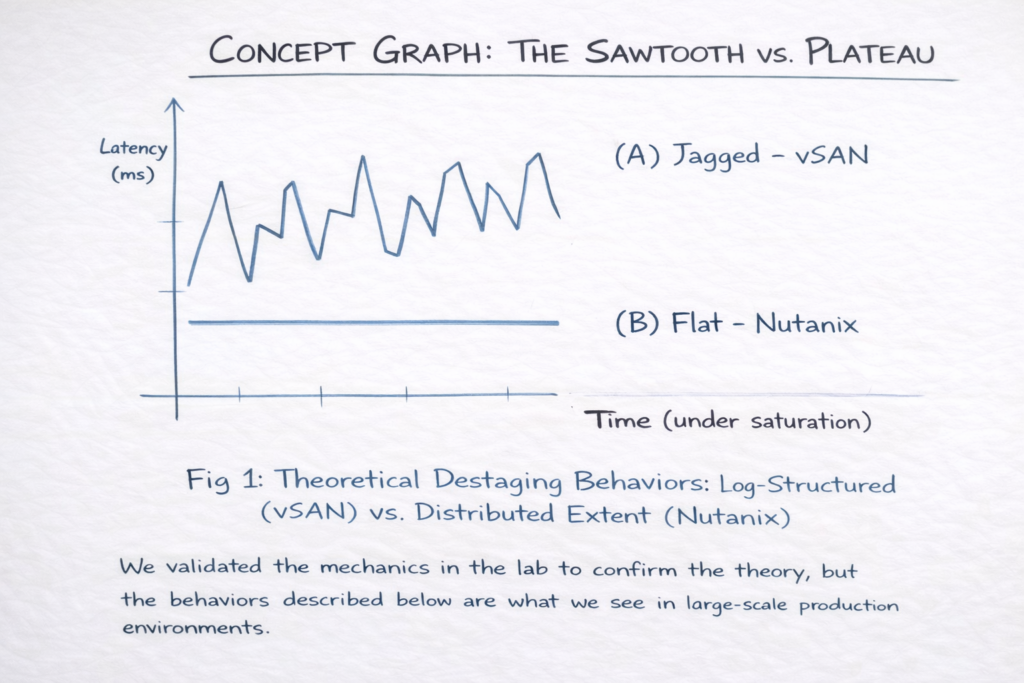

Field Observations: The “Sawtooth” vs. The “Plateau”

Based on aggregated field data from Tier-1 production deployments, here is the behavior profile for vSAN ESA vs. Nutanix AHV under saturation.

vSAN ESA Behavior: “The Sawtooth”

vSAN maximizes throughput at the cost of consistency.

- The Behavior: Writes are accepted aggressively until the buffer fills. Then, a “Destage Event” occurs.

- The Symptom: You see a massive spike in IOPS, followed by a sharp drop and a latency spike (p99 > 50ms) while the system “catches its breath.”

- The Result: High average speed, but high Jitter Amplitude.

Nutanix Behavior: “The Plateau”

Nutanix prioritizes consistency at the cost of peak throughput.

- The Behavior: The CVM detects backend pressure early and throttles the ingest before the buffer fills.

- The Symptom: Instead of a “Sawtooth,” you see a flat line. IOPS are lower than vSAN, but latency remains flat (3-5ms).

- The Result: Lower top speed, but zero Jitter.

Operational Impact Analysis

Engineers care about Latency Jitter (Variance), not Average Latency. A system that averages 2ms but spikes to 100ms kills VDI sessions.

| Workload Scenario | vSAN ESA Experience | Nutanix AOS Experience |

| VDI Login Storm | Periodic screen freezes (Cursor lag) | Slower login, but smooth desktop |

| Database Commits | High throughput, occasional p99 timeouts | Predictable commit times |

| Backup Ingest | Fastest completion time (Burstable) | Steady, linear ingest rate |

| Ransomware Recovery | Stall cycles during massive writes | Continuous, throttled rebuild |

The Verdict: There is No “Best,” Only “Fit”

Choose vSAN 8 ESA if:

- You run massive Oracle/SQL Databases that demand raw, unbridled throughput.

- You have a 100GbE+ Backbone to support the cross-node erasure coding traffic.

- Your goal is Maximum Speed and you can tolerate backend write amplification.

Choose Nutanix AHV if:

- You run VDI (Omnissa/Citrix), General Virtualization, or Multi-Tenant workloads.

- Consistency > Peak Speed. (A user hates “jitters” more than they love “fast”).

- You want Data Locality to minimize East-West network traffic on your switch fabric.

Field Notes: Frequently Asked Questions

Q: Is vSAN 8 ESA faster than Nutanix AHV?

A: In terms of Peak IOPS (100% Random Read), vSAN 8 ESA is typically faster because it writes directly to a RAID-1 mirror on NVMe without a Controller VM (CVM) intermediary. However, under heavy write saturation, vSAN can exhibit higher latency variance (“jitter”) during destaging events compared to Nutanix.

Q: Which hypervisor is better for VDI (Omnissa/Citrix)?

A: Nutanix AHV is generally preferred for VDI due to Data Locality. By keeping the VM’s read/write path on the local node, it prevents “East-West” network congestion, ensuring a smoother user experience (no mouse lag) even during login storms.

Q: What happens when the vSAN write buffer fills up?

A: vSAN ESA uses a “Log-Structured” file system. When the performance leg fills, it must pause ingestion to compress and destage data to the capacity leg. This creates a “Sawtooth” performance pattern—periods of high speed followed by sharp latency spikes.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.