Proxmox isn’t “Free” vSphere: The Hidden Physics of ZFS and Ceph

Key Takeaways

This strategic advisory has passed the Rack2Cloud 3-Stage Vetting Process: Market-Analyzed, TCO-Modeled, and Contract-Anchored. No vendor marketing influence. See our Editorial Guidelines.

Key Takeaways:

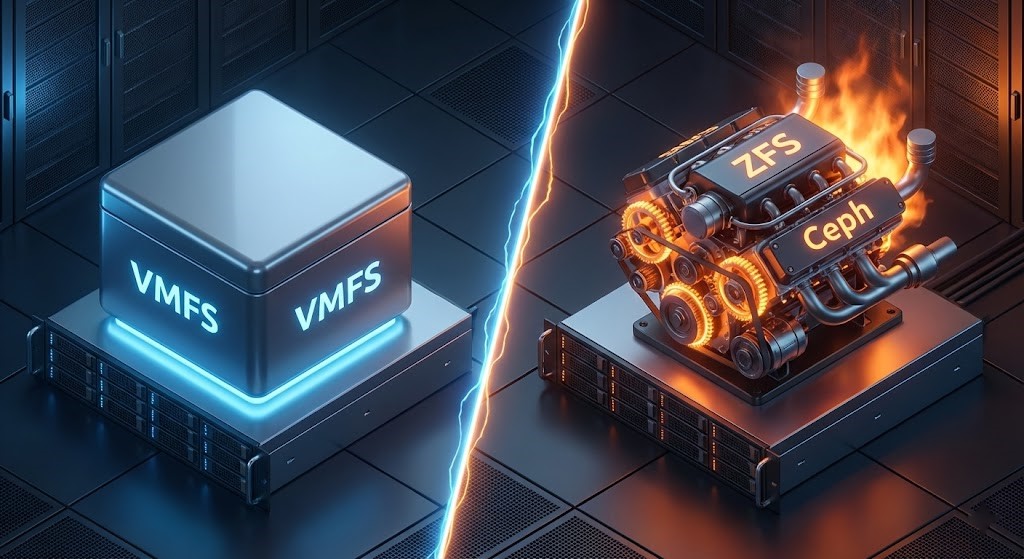

- The Philosophy Shift: Moving to Proxmox is not a hypervisor swap; it is a storage philosophy change. VMFS abstracted physics; ZFS and Ceph expose them.

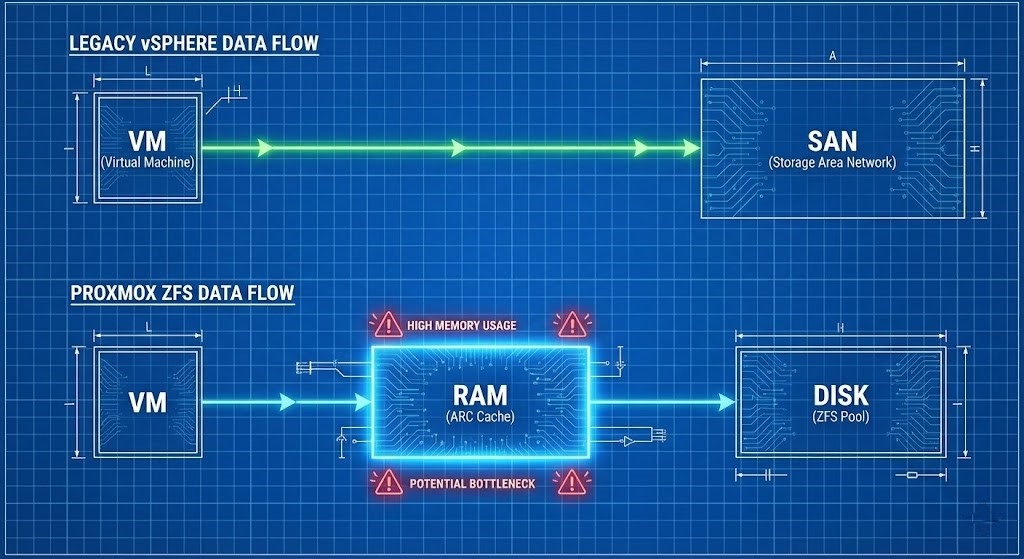

- The ZFS “RAM Tax”: ZFS delivers data integrity but will aggressively consume a large chunk of your host RAM for ARC if untuned, often around half the system memory on typical defaults, causing “phantom” OOM crashes on right-sized VMs.

- The Ceph “Network Floor”: Ceph treats 10GbE dedicated to storage as a floor, not a luxury. Running HCI on 1GbE is a recipe for a “cluster freeze” during a rebuild.

- The Migration Trap: Using

qemu-imgwithout checking for block alignment or snapshot debt will silently cut your IOPS in half.

Broadcom’s acquisition of VMware forced thousands of teams to ask a dangerous question: “Why not just move everything to Proxmox? It’s free.”

On paper, Proxmox VE (PVE) is the perfect escape hatch. It is open-source, capable, and battle-tested. Management hears “free hypervisor” and assumes the migration is a simple file transfer. A VM is a VM, right?

That assumption is how storage outages happen.

vSphere spent 15+ years shielding admins from storage physics. VMFS (VMware File System) hid the ugly details—locking, block alignment, multipathing, and write amplification—behind a single, magical abstraction.

Proxmox does not coddle you. It hands you the raw tools—ZFS, Ceph, or NFS—and expects you to understand the tradeoffs.

- If you treat ZFS like VMFS, your databases will crawl.

- If you run Ceph on 1GbE, your cluster will freeze during a rebuild.

- If you ignore block alignment, your IOPS will silently drop by 50%.

VMware trained you to think in “datastores.” Proxmox forces you to think in IO paths, latency domains, and failure behavior.

The “VMFS Hangover”

In the vSphere era, storage was conceptually simple: You carved a LUN, formatted it VMFS, and all hosts saw it. vMotion and HA “just worked.” The SAN controller handled the heavy lifting of RAID, caching, and tiering.

Proxmox does not ship with a VMFS-style clustered filesystem that magically abstracts locks, metadata, and shared access. You must choose a side:

| Option | What You Get | What You Lose |

| Local ZFS | Exceptional performance, data integrity, simplicity. | No shared storage (No live migration without replication). |

| Ceph (RBD) | True HCI, Shared Storage, HA, Live Migration. | Requires operational maturity and a massive network. |

| NFS / iSCSI | Familiar “Legacy” SAN model. | Loses the “Hyperconverged” value proposition. |

There is no “default safe path.” Each option encodes a new set of risks.

The ZFS Trap: The “RAM Eater”

ZFS is a Copy-on-Write (CoW) filesystem. It offers data integrity that VMFS can only dream of (end-to-end checksumming), but it pays for that with RAM.

The ARC: The Cache That Will Eat Your Host By default, ZFS aggressively consumes RAM for its Adaptive Replacement Cache (ARC). On a typical Linux system, ZFS will happily take 50% of available memory to speed up reads.

War Story: We recently audited a failed migration where a team moved a 64GB SQL VM onto a host with 128GB of RAM. They assumed they had plenty of headroom.

The VM started swapping and eventually crashed. Why? ZFS had silently consumed ~64GB for ARC. The hypervisor and the database were fighting for the same memory addresses.

The Fix: Do not let ZFS guess. Explicitly cap the ARC size. As a starting point, many shops cap ARC to roughly 25–30% of host RAM on mixed workloads, then tune up or down based on real hit ratios.

The “Write Cliff” Because ZFS is CoW, every overwrite becomes a new block allocation and metadata update. This causes Write Amplification. If you run ZFS on consumer-grade SSDs (QLC) without a proper ZIL (ZFS Intent Log), you will burn through the drive’s endurance in months, not years. You will hit a “Write Cliff” where latency spikes from 1ms to 200ms instantly.

The Ceph Trap: The “Network Killer”

Ceph is distributed object storage magic—but it is fundamentally constrained by the speed of light. Every write involves network replication, placement calculation, and quorum consensus. Ceph does not care how fast your CPUs are if your network is slow.

The “10GbE Lie” Can you run Ceph on 1GbE? Technically, yes. Operationally, no.

When a drive fails, Ceph initiates a rebalance. It moves terabytes of data across the network to restore redundancy. On a 1GbE link, this saturation blocks client IO.

- Result: Your VMs freeze. The cluster feels “down” even though it is technically “up.”

- The Rule: Treat 10GbE, dedicated to Ceph, as the absolute floor. 25GbE is the new normal if you plan to survive rebuilds without user-visible pain.

The “2-Node Fantasy” We see this constantly: “I’ll just buy two beefy servers and run Ceph.” Ceph requires quorum. A 2-node cluster is not High Availability; it is a split-brain generator. Even if you use a ‘Witness’ or Tie-Breaker vote, performance during a failure state is abysmal because you lose data locality. You need 3 nodes minimum (and realistically 5+) for operational safety

The Migration Trap: Block Alignment

This is where most migrations silently fail. The standard tool, qemu-img convert, moves your .vmdk files to Proxmox’s .qcow2 or .raw format.

The Risk: If you don’t align the blocks properly during conversion (especially when moving from 512b legacy sectors to 4k sectors), you get Sector Misalignment.

- The Consequence: Every single logical write operation becomes two physical write operations on the disk (Read-Modify-Write).

- The Symptom: Your IOPS are cut in half, but you see no errors. You just think “Proxmox is slow.”

The Solution: Audit your VMs before you move them.

- Check for Snapshots: Moving a VM with a 2-year-old snapshot chain onto a CoW filesystem like ZFS is a performance death sentence. Use the HCI Migration Advisor to flag “Dirty Data” before you start

qemu-img. - Align the Disk: Use

virt-v2vor carefulqemu-imgflags to ensure 4k alignment.

Validate the Result: Run the same simple fio test on a freshly created Proxmox-native disk and on a migrated disk. If latency doubles with the same test pattern, you likely have an alignment or stack problem—not a “Proxmox is slow” problem.

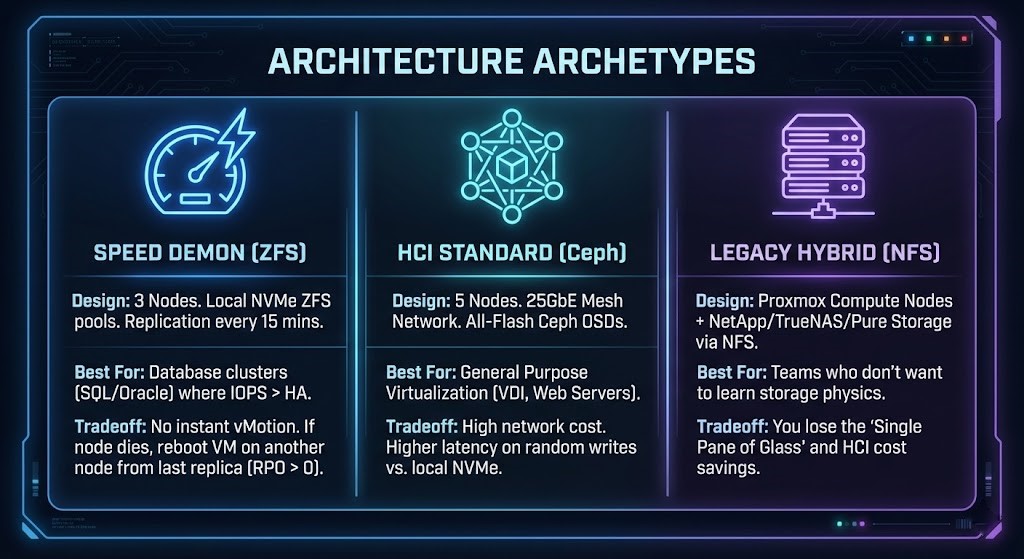

Architecture Archetypes: Which One Are You?

To survive the move, pick one of these valid patterns. Do not invent your own.

Archetype A: The “Speed Demon” (Local ZFS)

- Design: 3 Nodes. Local NVMe ZFS pools. Replication every 15 mins.

- Best For: Database clusters (SQL/Oracle) where IOPS > HA.

- Tradeoff: No instant vMotion. If a node dies, you reboot the VM on another node from the last replica (RPO > 0).

Archetype B: The “HCI Standard” (Ceph)

- Design: 5 Nodes. 25GbE Mesh Network. All-Flash Ceph OSDs.

- Best For: General Purpose Virtualization (VDI, Web Servers).

- Tradeoff: High network cost. Higher latency on random writes compared to local NVMe.

Archetype C: The “Legacy Hybrid” (External NFS)

- Design: Proxmox Compute Nodes + NetApp/TrueNAS/Pure Storage via NFS.

- Best For: Teams that want Proxmox at the hypervisor layer but are not ready to operate distributed storage yet.

- Tradeoff: You lose the “Single Pane of Glass” and HCI cost savings.

Conclusion: Pick Your Poison Intentionally

Proxmox is enterprise-ready when you respect the physics. It is not a “black box” like vSphere.

- If you want “Set and Forget,” buy a SAN.

- If you want “Performance,” tune ZFS and buy RAM.

- If you want “HCI,” build a 25GbE network and commit to Ceph.

Next Steps: Before you migrate a single VM, profile your current IOPS and “Dirty Data.”

Additional Resources:

- HCI Migration Advisor: Audit for snapshots and zombies.

- Egress Calculator: If you are replicating ZFS over the WAN, model the bandwidth cost first.

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.