The 2-Node Trap: Why Your Proxmox “HA” Will Fail When You Need It Most (and How to Fix It)

This technical deep-dive has passed the Rack2Cloud 3-Stage Vetting Process: Lab-Validated, Peer-Challenged, and Document-Anchored. No vendor marketing influence. See our Editorial Guidelines.

I built my first Proxmox cluster on a Friday night. Two beefy nodes. Shared storage. HA enabled. I shut the laptop feeling smug—I had just replaced a six-figure VMware stack with two commodity servers and some Linux magic.

Saturday morning, a power blip hit the rack. Both nodes came back online. No VMs came back.

Three hours later, after console spelunking, log archaeology, and a crash course in Corosync internals, I learned the most expensive free lesson of my career: Two nodes ≠ High Availability. They equal a mathematically guaranteed outage when one fails.

This isn’t just a “homelab” problem. I’ve seen this exact failure pattern in 2-node retail edge clusters, “temporary” production clusters that somehow became permanent, and even 6-node enterprise clusters deployed in isolated pairs.

Let’s fix it—properly.

Key Takeaways

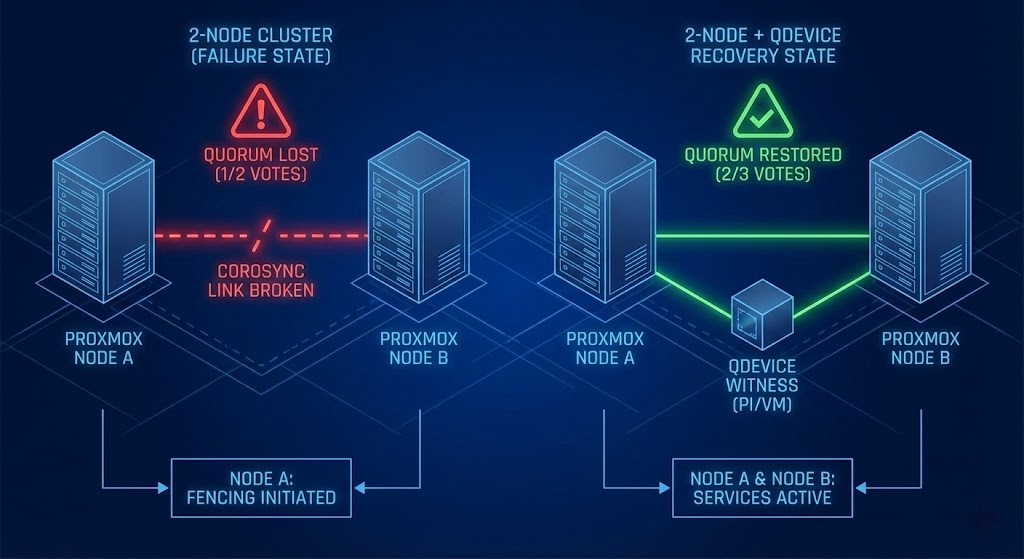

- The Math Trap: Corosync requires $>50\%$ votes. 2 nodes is a tie. No majority = cluster freeze.

- The Failure Mode: When Node 1 dies, Node 2 cannot confirm it has a majority, so it fences itself to prevent data corruption.

- The 15-Minute Fix: A QDevice (external witness) restores the math. $2 + 1 = 3$.

- Enterprise Reality: QDevice is for edge cases. True enterprise HA starts at 3 nodes with hardware fencing (Stonith).

The Lie: “Two Proxmox Nodes = vSphere HA”

I hear this constantly from clients migrating off Broadcom: “We only need two servers. VMware let us do it, so Proxmox should too.”

Here is the cold reality: VMware didn’t eliminate quorum physics—it just hid them behind expensive proprietary locking, vCenter arbitration, and license-enforced constraints. Proxmox doesn’t hide physics. It respects them.

Corosync (the engine driving Proxmox HA) requires a strictly majority of votes to run workloads safely. With two nodes, you have 2 votes. If one fails, you have 1 vote.

1 Vote is less than >50%.

There is no tie-breaker. So the system does the only safe thing: it fences everything to prevent Split-Brain. That’s not a bug. That’s integrity.

The Quorum Physics Trap (Math That Bites)

Here is the exact math that kills your cluster on a Saturday morning:

| Scenario | Node A | Node B | Total Votes | Required for Quorum (>50%) | Result |

| Healthy | 1 | 1 | 2 | 2 | ✅ Running |

| Node B Dies | 1 | 0 | 1 | 2 | ❌ FENCE ALL |

What You Actually See

When this happens, your logs will scream at you.

Bash

pvecm status

# Quorum information: 1/2

# Status: Activity Blocked

Symptoms:

- All VMs stuck in

fencedorstopped. - The surviving node is healthy, accessible via SSH, but refuses to start workloads.

- Your “HA cluster” is now mathematically forbidden from working. Welcome to Friday night hell.

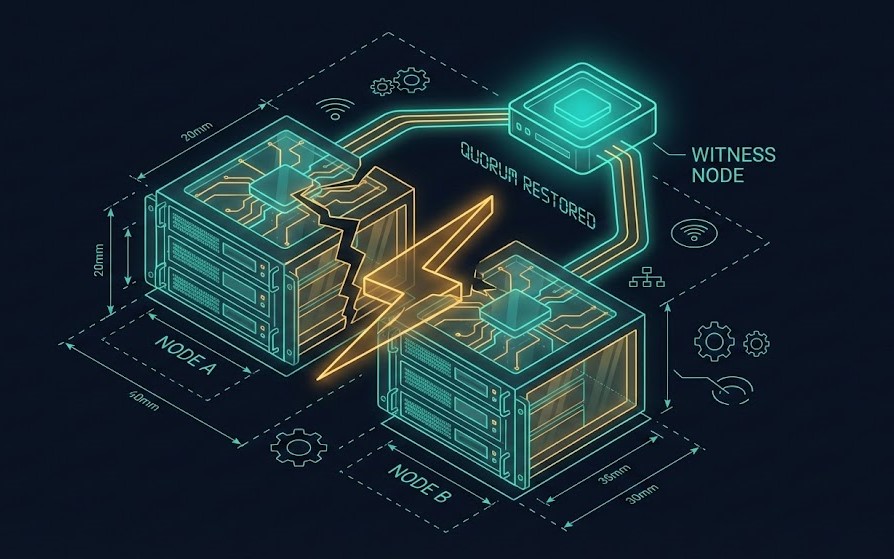

The 2-Node Death Spiral (Step-by-Step)

I once watched payroll batch processing halt at 2:00 AM because a single PSU died in a 2-node cluster.

- State: Both nodes healthy. (2/2 votes).

- Event: Node B power supply fails. Heartbeat lost.

- Reaction: Node A asks, “Did Node B crash, or did the network partition?”

- Calculation: Node A checks votes. It has 1. It needs 2.

- Decision: “No majority. I cannot guarantee I am the only writer. To save the filesystem, I must stop.”

- Outcome: Node A fences itself. The entire data center is dark.

The CIO’s takeaway from that night? “Our HA failed when we needed it most.” That sentence should terrify you.

The Physics Fix: Add a QDevice (Witness Vote)

The fix isn’t complicated. It’s mathematical. You need an External Witness—a third vote that lives outside the blast radius of your two nodes.

The Correct Math:

2 Nodes (1 vote each) + 1 QDevice (1 vote) = 3 Total Votes

If Node B dies: Node A + QDevice = 2 Votes.

2 Votes ≥ 2 (Majority)

Result: Quorum achieved. VMs auto-start on Node A.

Hardware Options (Pick Your Poison)

| Option | CapEx Cost | Reliability | Use Case |

| Raspberry Pi 4 | ~$35 | High | The Homelab King. Ethernet connected, low power. |

| AWS t3.nano | ~$5/mo | Very High | Excellent for remote/edge clusters. |

| Spare Linux VM | $0 | Medium | Good if it lives on a separate hypervisor. |

| Cluster Node | N/A | ❌ FAIL | Never put the witness on the cluster itself. |

Deploying a QDevice (Lab-Validated)

Pre-requisite: Ensure you have SSH access to both Proxmox nodes and your target QDevice (Debian/Ubuntu).

1. On the QDevice Host (Pi/VM):

Bash

# Install the external voter daemon

apt update && apt install corosync-qnetd corosync-qdevice -y

2. On the Main Proxmox Cluster (Node 1):

Bash

# Install the QDevice plugin

apt install corosync-qdevice -y

# Setup the QDevice (IP of your Pi/VM)

pvecm qdevice setup 192.168.1.100

3. Verify The Math:

Bash

pvecm status

# Look for:

# Quorum information: 2/3 <-- SUCCESS

# Votequorum information:

# Expected votes: 3

Time: 15 minutes. Cost: <$50. Outcome: Your cluster stops triggering self-fencing during minor failures.

When 2+1 Still Fails (Production Reality Check)

If you are running a Homelab or a small SMB stack, stop reading here. You are safe.

But if you are an Enterprise Architect, we need to talk about Stonith and Scale.

A QDevice fixes Quorum physics, but it does not fix Capacity or Storage physics. I’ve seen 2+1 clusters survive the network partition only to die immediately because the single surviving node couldn’t handle the RAM/CPU pressure of 200 re-starting VMs.

Enterprise Constraints:

- Ceph Storage: Ceph needs its own quorum. A 2-node Proxmox + QDevice setup cannot run Ceph safely. You strictly need 3 full nodes for Ceph monitors.

- Rack Awareness: If you have 5 nodes, but 3 are in Rack A and 2 in Rack B… losing Rack A kills your quorum (2/5).

- Fencing (Stonith): In high-stakes environments, software quorum isn’t enough. You need hardware fencing (IPMI/PDU) to physically cut power to a rogue node before recovering.

Architecture Archetypes (Pick Your Scale)

Archetype A: The “Homelab Escape Hatch”

- Structure: 2 Beefy Nodes + Raspberry Pi QDevice.

- Pros: Cheap ($100 HA fix), survives single node failure.

- Cons: No live migration (unless shared storage), not payroll-safe.

- Verdict: Perfect for learning or non-critical edge.

Archetype B: SMB Production Ready

- Structure: 3 Identical Nodes (e.g., 16c/128GB each).

- Pros: Live migration, no QDevice dependency, true N+1 redundancy.

- Verdict: The minimum viable product for business.

Archetype C: Enterprise Standard

- Structure: 5+ Nodes + Ceph + Redundant Corosync Links.

- Pros: Survives drive failures, node failures, and even rack failures (if architected correctly).

- Verdict: Required for 100+ VMs or mission-critical SLAs.

Validation Tests (Prove Your HA Works)

If you haven’t tested failure, you don’t have HA—you have hope. And hope is not a clustering strategy. Nor is blind optimism.

- The Pull-the-Plug Test: Physically yank the power from Node 2. Does Node 1 take over?

- Without QDevice: NO.

- With QDevice: YES.

- The Network Slice: Unplug the LAN cables from Node 2.

pvecm statuson Node 1 should show2/3.

- The QDevice Death: Stop the

corosync-qnetdservice on your Pi.- Cluster should remain Green (2/3 votes active).

Verdict: Fix It Before Disaster Teaches You

The 2-node trap kills more Proxmox clusters than hardware failure ever will.

- ❌

pvecm status = 1/2→ You are in the trap. - ✅

pvecm status = 2/3→ Escape hatch deployed. - ✅

3+ Nodes→ Production reality.

Your Weekend Project:

- Run

pvecm statusright now. Confirm your doom. - If you see

1/2, order a Raspberry Pi or spin up a t3.nano. - Run the validation tests. Prove the physics work.

- Before you scale further: Use our HCI Migration Advisor to audit your cluster health and zombie snapshots.

Two servers ≠ HA. Two servers + QDevice = survival. Three servers = reality.

I wish someone had told me this in Week 1. Now you know.

Additional Resources

- Proxmox Corosync & Quorum Docs: https://pve.proxmox.com/wiki/Cluster_Manager

- Corosync QDevice Architecture: https://corosync.github.io/corosync-qdevice/

- Proxmox HA Manager Internals: https://pve.proxmox.com/wiki/High_Availability

- Split-Brain & Fencing Concepts: https://clusterlabs.org/pacemaker/doc/en-US/Pacemaker/2.0/html-single/Pacemaker_Explained/

This architectural deep-dive contains affiliate links to hardware and software tools validated in our lab. If you make a purchase through these links, we may earn a commission at no additional cost to you. This support allows us to maintain our independent testing environment and continue producing ad-free strategic research. See our Full Policy.